By MARTIN HÄGERDAL,

President,

Ericsson Power Modules,

www.ericsson.com

Energy efficiency is now a key requirement in most computing applications. The massive growth of data centre usage around the world, now responsible for a tenth of the world’s electricity consumption has increased that focus even further. It is reaching out into areas such as telecommunications where concepts such as software-defined networking have taken hold. For example, the move to 5G will be accompanied by a shift to use much more energy-efficient basestation architectures, and these systems will most likely be accompanied by compute servers that act as the main gateway to the cloud for the Internet of Things (IoT) and provide advanced, programmable services for network users.

Traditionally, server and telecom switch architecture has focused on power efficiency under peak loads. Today’s switched-mode converters can deliver efficiencies of more than 95 percent when operated at full load. But the push for system-level energy efficiency means that systems will only operate at peak loads for short periods of time. Much of the time they will be idling or running at lower clock speeds to conserve energy. This demands a different approach to power delivery that extends from the front-end converter down to the individual microprocessors themselves.

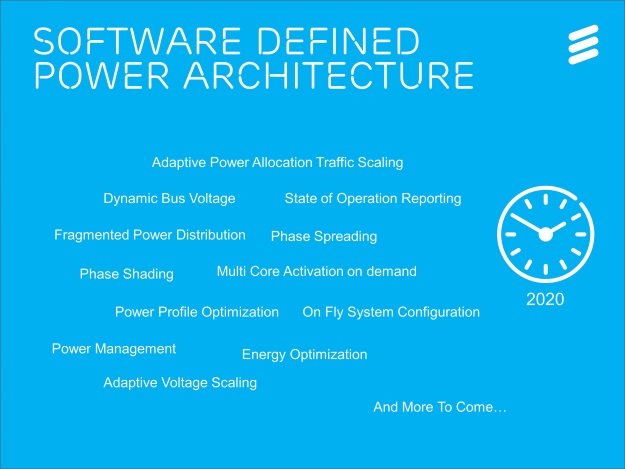

In parallel with the move towards software-defined architectures that increasingly underpin the digital world, power delivery calls for an increasingly software-defined approach. The software-defined power architecture (SDPA) has the potential to bring truly energy-efficient capabilities to advanced network and server applications.

Fig. 1: Software-defined power architecture (SDPA) can bring many energy-efficient capabilities including adaptive power allocation, dynamic bus voltage, phase spreading, on-the-fly system configuration, and many more functions to many applications.

SDPA recognizes the need for power flexibility that advanced microprocessors and the field programmable logic arrays (FPGAs) that often support them now require. The processors frequently shift between power and voltage states as they adapt to the compute workload dynamically.

At the level of the point-of-load (PoL) regulator, adaptive voltage scaling (AVS) is a powerful technique to optimise supply voltages and minimise energy consumption in modern high-performance microprocessors. The technique takes advantage of the quadratic relationship between voltage and power consumption in the CMOS circuitry used in digital logic. Even small changes in voltage can have a dramatic effect on overall power consumption. Higher voltages allow CMOS logic to switch more quickly. When workloads are light, the processor’s clock can be slowed down and the voltage reduced to an appropriate point for that level of performance.

AVS also adjusts to automatically compensate for process and temperature variations in the processor. To do so, AVS employs real-time closed-loop control to provide a supply voltage that allows the processor to use an appropriate clock frequency for its current workload. Leading-edge high-performance microprocessors will change workload and operating conditions within nanoseconds – therefore real-time regulation of the microprocessor supply puts a high demand on the control-loop bandwidth and requires close monitoring of computing hardware performance.

The granularity of power control is likely to become finer over time. An enhancement of AVS will be multicore activation on demand, whereby processors cores within a multicore device are ordered by software running with the processor itself or by an overall system controller to deactivate at times to support the optimisation of power at the system or board level.

Although a key attribute of SDPA will be local AVS control, the requirements of these advanced processors ripple through the entire power-distribution infrastructure of the server, basestation or switch rack. The way in which power reaches the PoL converters requires changes. The intermediate bus architecture (IBA) has been a key part of datacom and large server designs for a number of years. The IBA distributes power to the PoL regulators at a static 12 to 14 Vdc, and is fed in datacom systems through intermediate bus converters (IBCs) that may be supplied by 48 Vdc in typical datacom systems or by a higher voltage ac-supply in compute servers. The choice of 12 or 14 Vdc for the intermediate bus ensures a high enough voltage to deliver all the power required by the load in times of high data traffic with comparatively low losses over the length of the interconnect from the IBC to a tree of PoL regulators.

The traditional IBA approach loses efficiency as compute or data-traffic levels decrease and so present smaller loads to the power converters. A shift to dynamic bus voltage (DBV) control provides the possibility to adjust the power envelope to meet changing load conditions. It achieves this by altering the intermediate bus voltage in response to load changes through the use of advanced digital power control and optimised hardware combined with a series of software algorithms to deliver higher conversion efficiencies.

Fig. 2: Ericsson’s BMR465 PoL converter can be operated as a standalone unit delivering 90 A or connected in parallel to deliver up to 360 A; Offering high levels of software control capability, the converter operates across intermediate bus voltages from 8 to 14 V, complying with the Dynamic Bus Voltage scheme expected to be implemented in the SDPA.

A shift to DBV can reduce board-level power consumption by between 3 and 10 percent according to research conducted by Ericsson. This leads to reductions not only in direct energy consumption but, because it reduces associated heat production, also delivers a cut in the amount of forced air cooling required. As a result, the energy consumption of the system’s infrastructure is also lowered.

A third key technology for SDPA is phase spreading. This technique uses multiple switching conversion circuits, each phase-shifted from the other. This approach allows high peak currents to be drawn – crucial for high-performance processors that operate from supply rails of 1 V or less – without producing high peaks of electromagnetic interference that could occur with single-phase switching architectures. Phase spreading allows higher efficiency at lower loads as phases can be switched off as the current requirements falls during periods of low system activity.

As the power density of servers increases, supported by the integration of processors and support logic, there is a requirement for architectures that deal with multi-kilowatt system boards. A possible solution is fragmented power distribution, which implements digital power monitoring and control capabilities. The approach distributes multiple dc/dc converters around the board to create power islands. The converters communicate using an internal bus – which could be PMBus or a bus dedicated to current-sharing type applications – and operating in concert to share and optimise the delivery of power to the loads.

SDPA will be supported by the introduction of other technologies, such as adaptive power allocation traffic scaling, where certain voltage levels will need to be allocated across highly complex board-level systems to meet changes in data traffic demand. System-level reporting on traffic levels will allow a system controller to assess how best to allocate resources and their associated power delivery on a second-by-second basis. In this framework, boards may be preconfigured for different application scenarios with software performing power profile optimisation deciding the one best suited for the situation.

Through the changes proposed by SDPA, fine-grained control over power delivery will become an integral part of server architecture in datacom and other server applications as it provides the best way to achieve aggressive energy-efficiency targets.

Advertisement

Learn more about Ericsson Power Modules