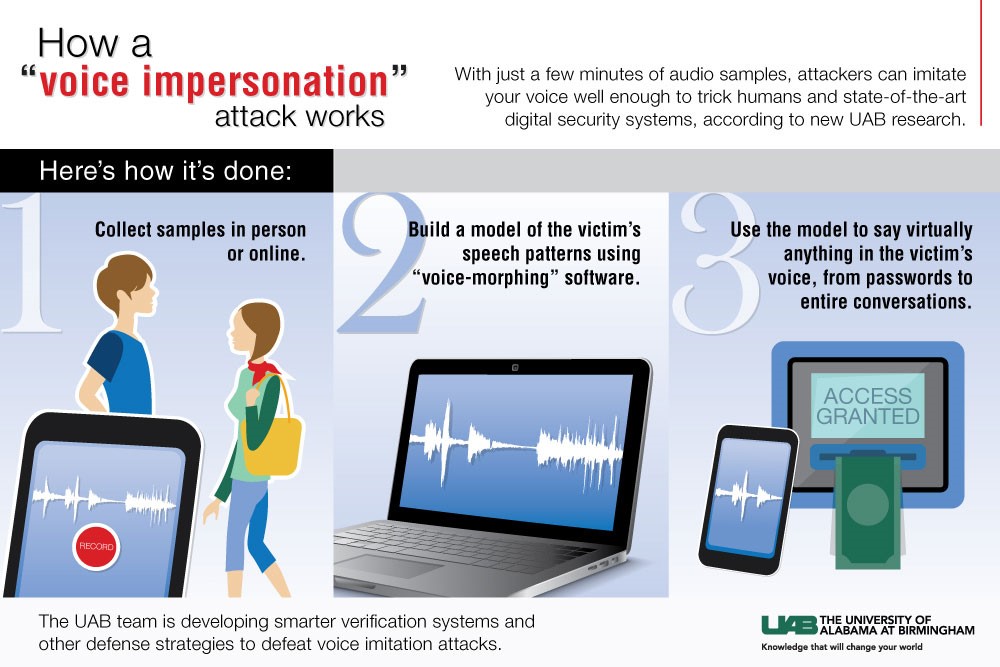

As if hacking someone’s fingerprints from a mere image wasn’t bad enough, the latest developments in the world of cybersecurity highlight a new potential threat: cracking into your bank account using an artificial impersonation of your voice.

After successfully penetrating automated and human voice verification systems using a widely available voice-morphing tool, researchers from the University of Alabama at Birmingham discovered that voice-based user authentication systems are vulnerable to voice impersonations.

Their findings were presented at the European Symposium on Research in Computer Security (ESORICS) earlier this month, and illustrated how advances in automated speech synthesis enable attackers to create models that very closely resemble the victim’s voice, which can then be used to speak any select message in that voice.

“The consequences of such a clone can be grave. Because voice is a characteristic unique to each person, it forms the basis of the authentication of the person, giving the attacker the keys to that person’s privacy,” explains Nitesh Saxena, the director of the Security and Privacy In Emerging computing and networking Systems (SPIES) lab and associate professor of computer and information sciences at UAB.

Worse — the cloning process requires but a few minutes’ worth of audio sample, meaning that hackers can technically gather the audio reel from online videos posted on YouTube, podcasts, phone calls, or even by collecting samples in person with a microphone.

“People often leave traces of their voices in many different scenarios. They may talk out loud while socializing in restaurants, giving public presentations or making phone calls, or leave voice samples online,” Saxena adds.

Testing their theory in the wild, the researchers achieved an 80 to 90% success rate fooling the automated “state-of-the-art” voice biometric systems employed by major banks and credit card companies. The proliferation of voice recognition technology is moving beyond hassle-free banking, widely becoming accepted a substitute for PIN locking phones, or even in place of keys used to access many government buildings.

Outside of unbridled access to users’ private data and its accompanying damage, morphed voice samples can also lead to character assassinations creation of false testimony in court.

When testing the mimicked audio samples against humans, the team still managed to convince the authenticators 50% of time, but as voice-morphing technology improves, this ratio will surely increase.

So, if contemporary verification algorithms are this vulnerable, what are the probable solutions? Since preventing audio clips from being stolen is unrealistic in this day and age, the best defense requires the development of voice verification systems that test the live presence of the speaker. The University of Alabama is now striving to answer this question in the most logical and economic way possible.

Source: UAB.edu

Advertisement

Learn more about Electronic Products Magazine