Stimulating one’s visual, echoic, and episodic memory, video content has become a primary educational tool for skill acquisition that’s proliferated as a result of the growth of contemporary user-created content platforms like YouTube. These days, instructional video content is even used by employers to indoctrinate their workers with corporate HR policies or to teach them how to perform a job. The question is, what happens when the majority of your staff is made up of robots?

Researchers from Cornell University are attempting to automate this process and develop a form of machine learning that permits robots to learn new skills by simply watching a series of step-by-step videos. Best of all, there’s no Blueray/DVD necessary, the robot simply looks up what it needs through the same means that a human would — YouTube. This may sound a bit high tech and futuristic, but it’s a necessary part in establishing a foundation for the machine learning that will make robotic butlers a reality, allowing them to wash dishes, do the laundry, vacuum, and other household duties.

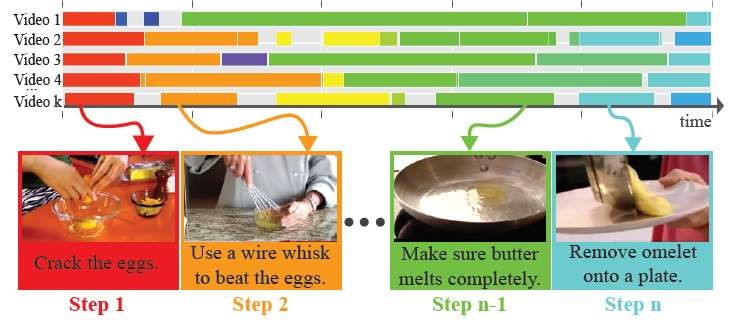

The project, which is called “RoboWatch,” is made possible but the common structure found in most how-to videos presented on YouTube, from the more millions of available “how-to” videos. Performing a quick scan reveals that there are 47,400 on how to vacuum a carpet and 837,000 videos on how-to feed cats. By scanning through multiple videos, the RoboWatch software can establish a pattern and transform it into step-by-step instructions with natural language.

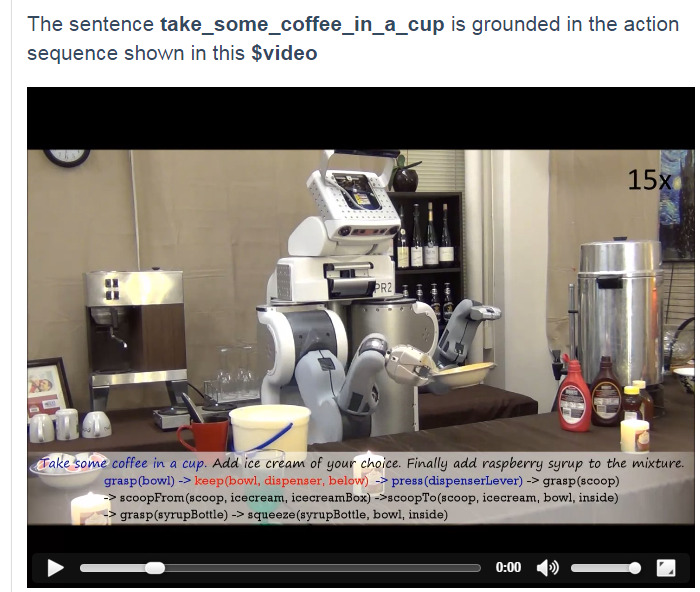

According to Ozan Sener, lead author of video parsing paper presented on Dec. 16 at the International Conference on Computer Vision in Santiago, Chile, the system functions unsupervised, which is actually a unique departure from most machine learning endeavors, as they require a human to translate what the robot sees into a language that the robot will understand. For example, Google’s Machine Vision can draw an image of dogs after being taught that the image in question is that of a “dog.” In RoboWatch’s case, the system begins to send a query to YouTube to find a collection of how-to videos on the task that it is being asked to do.

Its algorithm includes routines to omit any “outliers,” or videos that fit the keyword criteril but are not instructional; meaning, a query about cooking spaghetti will omit videos pertaining to kitchen appliance ads or cartoons. This is accomplished by scanning every single video frame for repeating objects and repeating words in the subtitle narration. The software then matches the similar segments in multiple videos and orders them into a single sequence, before using the subtitles to produce written instructions.

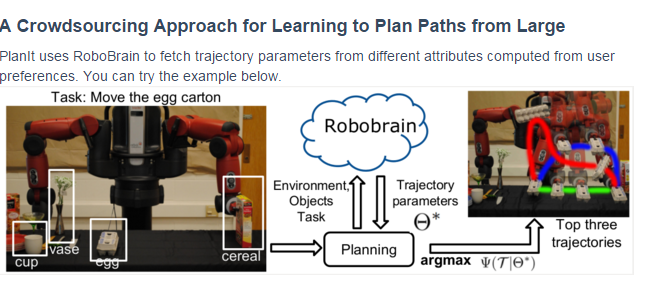

The fruit of the labor is available on RoboBrain , an online database of instructions made by robots, for robots.

Source: via Cornell Chronicle

Advertisement

Learn more about Electronic Products Magazine