As the National Highway Traffic Safety Administration (NHTSA) launched an investigation into the first reported fatality involving the Tesla Model S autopilot feature, the Internet was filled with doomsayers heralding our extinction at the hands of “self-driving cars.” Make no mistake, the accident did not involve a “self-driving” car killing its driver, but rather, a human behind the wheel of a semi-autonomous vehicle.

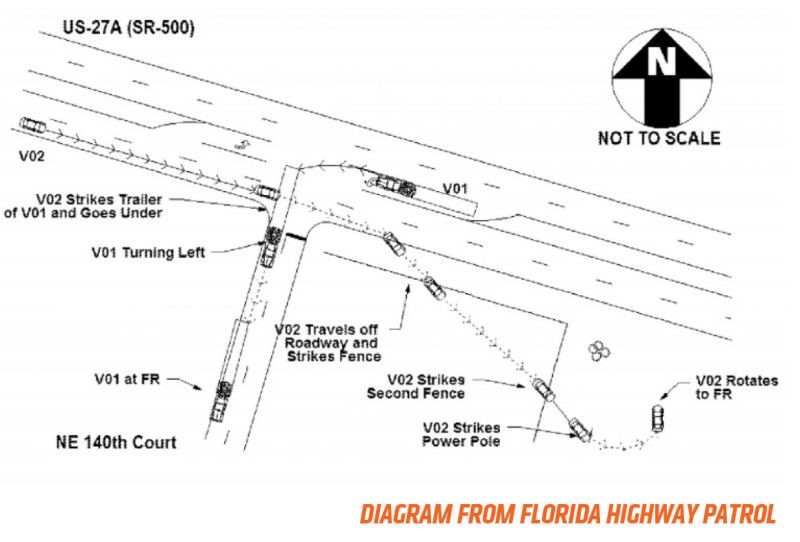

The Tesla driver, identified as Joshua D. Brown, of Canton, Ohio, was a former Navy SEAL turned tech entrepreneur who recorded many videos documenting his Tesla’s Autopilot experience. He was killed on May 7th when his Tesla slides beneath an 18-wheeler that was turning from a highway intersection with no stoplight.

At this moment it’s unclear whether the accident stemmed from Brown overriding the Tesla’s Autopilot feature, or whether the vehicle itself was at fault. All that’s known is that Joshua didn’t notice the white colored-trailer preceding the tractor trailer, and crashed into it.

Tesla’s Autopilot feature is not autonomous in the same way that the Google Car is self-driving, but rather aims to supplement to drivers’ existing capabilities using sensors and cameras to automatically steer and adjust speed on the highway. Tesla makes no false allusion that the feature is fully-functional, emphasizing that it remains in a beta state.

Using the feature requires that drivers “maintain control and responsibility” of their vehicle, by consenting to a number of rules: The mode can only be activated and maintained so long as the vehicle detects the driver’s hands on the steering wheel, providing a series of visual and audio cues if grip is not detected. At which point, the car begins to slow gradually until the driver’s hands are returned to the wheel. In that sense, Tesla encourages the driver to remain alert at all times, ready to take control should the need arise.

Unlike its competitors in the space, Tesla crowd sources data feeds directly from its vehicles to obtain real-world performance information, before machine-learning improves the software and patches back into the vehicle’s computer. Or—as in the case of Joshua Brown’s accident—access accident reports. A few weeks before the NHTSA investigation was made public, Tesla provided the Department of Transportation with million miles worth of autopilot data to assist with the investigation amidst the impending autonomous vehicle regulation.

In the coming months, NHTSA Administrator Mark Rosekind is expected to release guidelines defining the role of the federal government versus the state in regulating the self-driving technology. Foreseeing the arrival semi-autonomous in the next five years, and fully-autonomous cars in the next 10 to 20 years, the agency hopes to avoid any bureaucratic tangle of oversight that might hinder the technology’s deployment.

Tesla announced that Joshua Brown’s death was the first reported death in over 130 million miles of Autopilot operation. The autopilot feature, “when used in conjunction with driver oversight, the data is unequivocal that Autopilot reduces driver workload and results in a statistically significant improvement in safety.” Considering that driver error is responsible for 94 percent of crashes, this is a huge step forward.

Advertisement

Learn more about Electronic Products Magazine