Last year, when Google unveiled Project Soli, the company presented the idea as a way to create gesture controls for future technology. Because they’re so small, Soli’s miniature radars can fit into a smartwatch and can detect movements with sub-millimeter accuracy, allowing you to control the volume of a speaker by twiddling an imaginary dial, for example. Now, a team of researchers from the University of St. Andrews in Scotland have used one of the first Project Soli developer kits to teach Google’s technology how to recognize objects using radar.

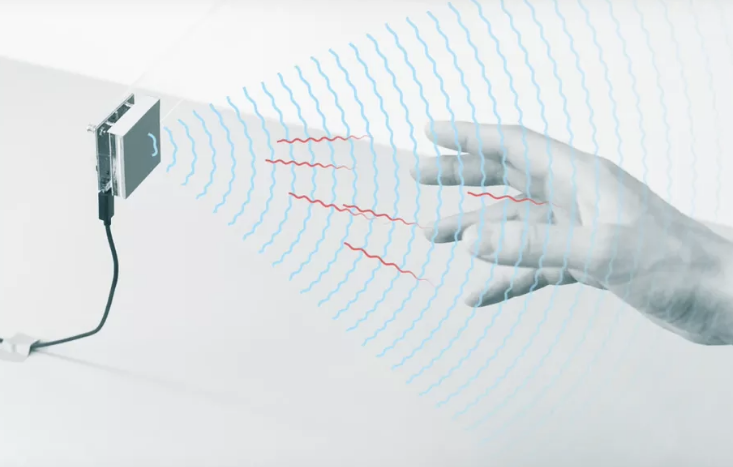

Dubbed as RadarCat (or Radar Categorization for Input and Interaction), the device works like a traditional radar system. A base unit fires electromagnetic waves at a target, some of which bounce off and return. By timing how long it takes for the waves to come back, the system works out the shape of the object and how far away it is. Because Google’s Soli radars are accurate, they can also detect the internal surface and rear surface of an object.

Together, the signals work as a unique fingerprint for each object. The RadarCat is so accurate that it can tell the difference between the front and back of a smartphone, or whether a glass is full or empty.

Although the system is accurate, there are limitations. For example, RadarCat occasionally confuses objects with similar material properties, such as the aluminum case of a MacBook with an aluminum weighing scale. While it works best on solid objects with flat surfaces, it takes longer to get a clear signal on things that are hollow or with an odd shape. Before it recognizes an object, the system must be taught what that object looks like. This may seem troublesome, but according to the researchers, it isn’t as big of an issue as it appears to be because the information only has to be introduced once.

So what’s the whole point of RadarCat, anyway? One of the most obvious applications of this research is to create a dictionary of things. Visually impaired individuals could use it to identify objects that feel similar in shape or size, and it could even deliver more detailed information, such as identifying a phone model and quickly bringing up a list of specs and a user manual.

If the system’s abilities are added to electronics, users could trigger specific functions based on context. If you hold your RadarCat-enabled phone in a gloved hand, for example, it could switch to an easy-to-use user interface with large icons.

Compared to the Internet of Things (IoT), RadarCat’s approach has the advantage of being unobtrusive. You wouldn’t be required to add extra information to an object to recognize it, and you wouldn’t have to give it a Wi-Fi connection.

According to RadarCat’s creators, next steps include improving the system’s ability to distinguish between similar objects, suggesting they could use it to not say whether a glass is full or empty but to also classify its contents. If this technology goes mainstream, it could be used in military technology to detect ships and airplanes or as technology for consumers that can tell you exactly what you’re about to eat or drink.

Source: The Verge

Advertisement

Learn more about Electronic Products Magazine