By Brian Santo, contributing writer

Scientists at IBM Research and ETH, both in Zurich, have been working together on a combination powering-and-cooling mechanism which, if they can successfully commercialize it, would lead to a leap in data center efficiency. They’ve recently achieved refinements that bring the approach closer to fruition. They call the technology redox flow cells (RFC).

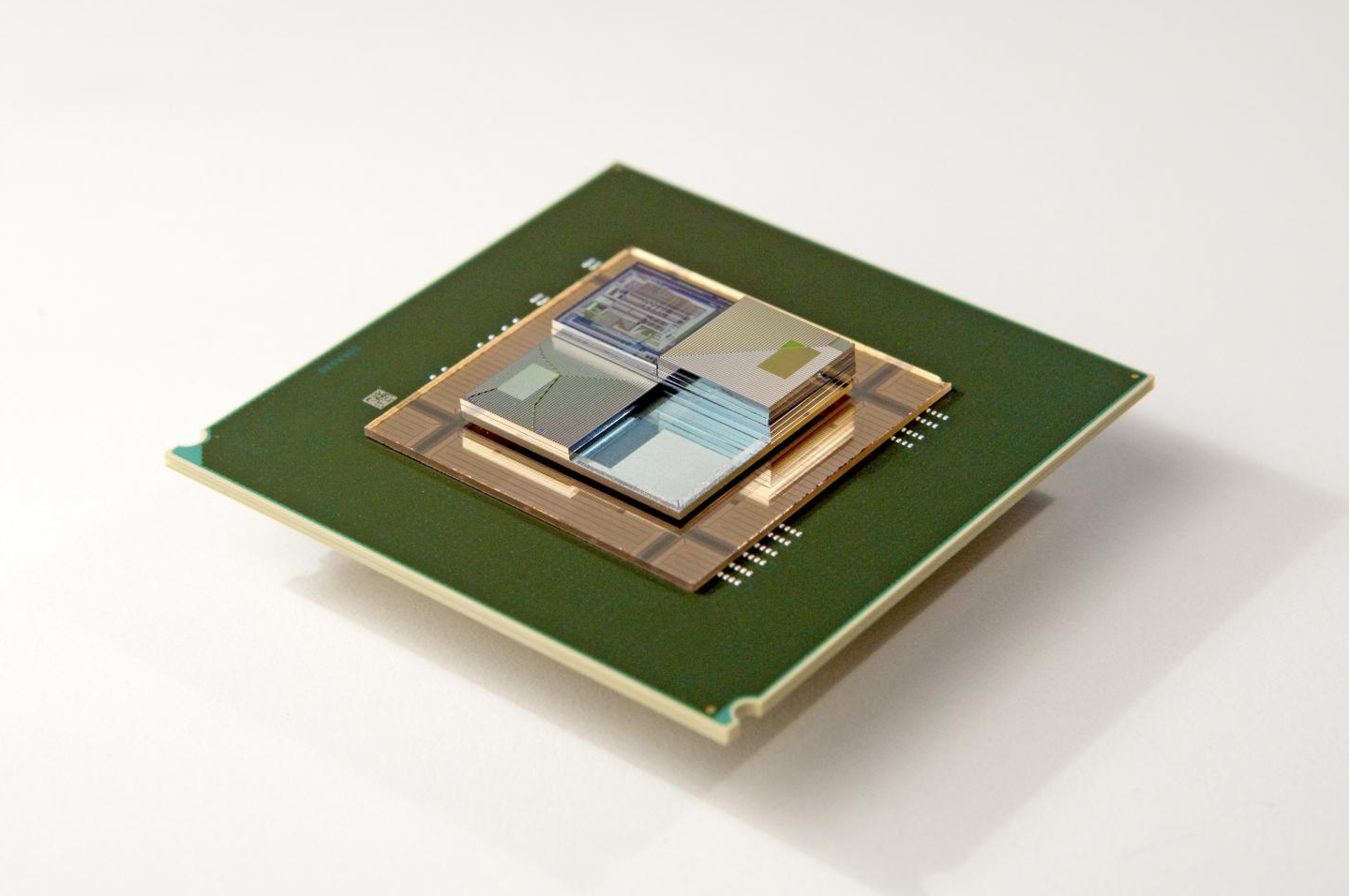

IBM and ETH have devised a way to incorporate small tubes or channels into computer chips and then pump through materials that serve two purposes simultaneously — these materials are the conduit (figuratively and literally) for electric current while also drawing off waste heat.

Image Credit: IBM Research Zurich

Conceptually, RFC technology is deliciously simple, yet it’s proven devilishly difficult to implement. The two labs have been working on RFCs since at least 2011, when they published their first paper speculating on how they could use the technology to increase the efficiency of computing systems.

Computer chips draw power and dissipate heat. The problem of dealing with that excess heat is familiar to anyone who has ever used a laptop and felt their thighs cooking — bad for you; worse for the computer. If the heat isn’t drawn off, the performance of the chips is compromised. Heat stress can also cut into the life of both ICs and their packaging. That’s why PCs have fans.

“Today, air-cooled microprocessors use more packaging pins for power delivery than for communication, and their cooler volume is about a million times greater than that of their active elements,” IBM researcher Patrick Ruch wrote in a recent blog. Ruch has been a consistent contributor to a series of papers on RFCs co-authored by IBM and ETH scientists.

Now aggregate a lot of chips, and the problem scales up proportionally. That’s why data centers drink deeply from cool rivers or are built for maximized airflow.

There are several trends which, together, are conspiring to make computing in general and the data center business, in particular, harder. Internet traffic is increasing, meaning that data centers need to get bigger and denser. Data centers, by and large, are the “cloud” in cloud computing; as the demand for cloud computing increases, the need for data centers to get bigger and denser is made only that much more acute.

Meanwhile, silicon-based circuitry is nearing physical limits regarding speed. It may be possible to use other materials, but down that path remains challenges that are technical, economic, or both. IBM and many other companies are pursuing that path, too. But it would be easier and cheaper to stick with silicon as long as possible.

Speed, don’t forget, is a rate, measuring movement over a distance. In situations where a very, very large number of processors are employed, it would be possible to eke out of them a significant margin of additional performance simply by cramming them physically closer to each other, stacking them several deep. Data centers would get their boost speed and density.

…If only the processors wouldn’t figuratively melt each other into slag. Neither air cooling nor water cooling would be able to draw off heat efficiently enough to prevent that.

In 2011, IBM Research and Laboratory of Thermodynamics in Emerging Technologies, Department of Mechanical and Process Engineering at ETH speculated that one option might be to build microchannels directly into silicon chips through which coolant could be delivered. The two labs have been making gradual progress ever since.

Simply finding materials that would flow properly was a challenge at first, but they eventually discovered a pair of electrolytes which, when used in conjunction with each other, could not only draw heat away but also deliver power. That combination — powering and heat dissipation together — could lead to extraordinary leaps in both speed and density in data centers.

IBM and ETH at the end of January reported new advancements in their technology. They are now manufacturing RFCs using 3D-printing processes. If they can’t make these structures cheaply enough, there’s no point in trying, so that’s an important step. Also, they’ve increased power density to 1.4 W/cm2 , which should be adequate for powering microprocessors, lasers (also used in vast numbers in data center fiber optics) and LEDs.

The approach, the researchers say, would eliminate the traditional power network. That would include all those extra packaging pins dedicated solely for power delivery that Ruch mentioned in the quote above. Simplifying processor packaging couldn’t sound more inconsequential, but the exact opposite is true — it would be a tremendous corollary boon.

In the summary of the abstract of their most recent paper, IBM and ETH researchers conclude “that rational tailoring of fluidic networks in RFCs is key for the development of devices effectively combining power delivery and thermal management.”

IBM positions RFCs as just one component of its strategy for green developments. The information and communications technology (ICT) industry now consumes 2% of the world’s energy; writes IBM researcher Bruno Michel in another blog. Michel is another frequent co-author on the RFC papers.

“In the future, the desirability of energy savings will become the driving factor to adopting digital processes,” Michel wrote. “This trend will be challenged, however, as the energy consumed by data center infrastructures supporting these digital processes soars to unacceptable levels. Future overall energy efficiency gains from digital processes, therefore, must rely on improved ICT efficiency and energy management in cloud data centers, computer terminals, and mobile devices, as well as greater effectiveness of the total software stack and computing applications. Important steps in this direction have been taken by our zero-emission data center efforts.”

Advertisement

Learn more about Electronic Products Magazine