BY BOB CANTRELL

Senior Application Engineer

Ericsson Power Modules

www.ericsson.com/powermodules

Energy consumed by data centers around the world has increased dramatically over the past decade or so, going from almost nothing to over 2% of the global electricity supply. Added to this is the production of approximately 2% of worldwide greenhouse gas emissions. Predictions exist today that data center energy consumption is to triple over the next decade, starting to rival and even overtake the electricity consumed by entire countries with moderately large economies.

Cooling concerns

One important area of contribution to energy consumption is delivering the necessary cooling for equipment using massive air-conditioning units and systems. There are a couple of ongoing approaches to reduce consumption in this area. The first, which is one being pursued by many major data center providers, is the raising of the maximum ambient temperature marginally above typically accepted limits for the operation of servers and other equipment, but not enough to introduce significant reliability issues. A second approach is to benefit from climates with significantly lower average temperatures and locate data centers in cooler parts of the world, such as in the Scandinavian/Nordic countries: Major companies such as Facebook are already establishing facilities in Denmark and Sweden, for example. In addition, these countries, among others, are increasingly looking to renewable sources of energy, including hydroelectric-based solutions, to deliver supplies of low-carbon electricity. Of course, both of these approaches can be used together to reduce energy consumption.

Power architecture today

The second overall area of contribution to data center energy consumption is obviously the power required for operation by servers, mass storage systems, and data communications and networking equipment. In this area, new and innovative modern power techniques are being sought within the industry to reduce energy consumption in modern data centers. Historically, going back to the 1980s at least, in telecoms and datacoms applications, the distributed power architecture has been dominant, in which an AC line voltage is rectified to typically deliver 48 Vdc for distribution to server cabinets via individual onboard and tightly regulated point-of-load (PoL) converters.

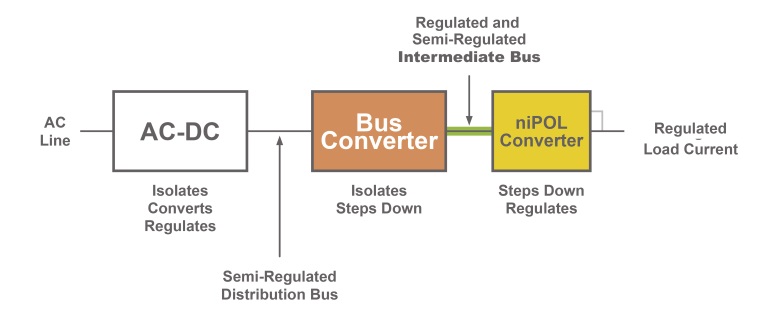

However, this architecture has evolved further over the past decade or so with the introduction of the intermediate bus architecture (IBA), in which IBCs (intermediate bus converters) down-convert from the 48-V bus to a typical voltage level of 12 V. The establishment of the intermediate bus was primarily designed to save the cost and bulk of multiple isolated DC/DC converters. Beyond the IBCs are the onboard and non-isolated PoL converters, which further step down the 12-Vdc intermediate bus to the voltages required by board-level components such as processors, ASICs, FPGAs, memory ICs, and other logic devices. Voltages can typically range below 1 V as needed to power processors or FPGA core logic.

The deployment of two down-conversion stages has the advantage of delivering optimal balance between the intermediate bus supplying the PoL converters and the load currents supplied by the PoLs, which is important for maximizing power-conversion efficiency at the system level. The 12-Vdc voltage level was chosen to ensure high-enough voltage to deliver all the power required by the load, or board, in times of high network data traffic, in addition to delivering lower distribution losses. However, the approach can lead to low efficiencies, especially when there is low traffic demand. An overview of the intermediate bus architecture is shown in Fig. 1 .

Fig. 1: Voltage conversion stages in typical data center or datacoms facility.

However, the overall efficiency of this entire network from the AC line is approximately 85%, which means that up to 15% of the power to the system is, therefore, dissipated as heat by the various power devices. This heat needs to be removed to enable high system reliability, or at least within well-defined acceptable limits.

Trends

There are a number of ongoing trends in the industry that seek to maximize efficiency, reduce power consumption, and minimize costs. There is the move toward advanced software-based architectures, such as software-defined networking (SDN) and network function virtualization (NFV); the increasing use of digital power and the extension of which is the emergence of the software-defined power architecture; and the re-emergence of direct-conversion technology, which has actually been around for 35 years or so, to down-convert the traditional 48-Vdc line directly to the board-level voltages required by processors and other logic ICs.

Addressing the first of these: SDN, essentially, is about separating the control plane from the data plane — or the software from the hardware. Application software does not necessarily need to run on specific networking hardware, but perhaps on servers in a data center. In addition, NFV offers significant economies of scale and standardization: Multiple functions or applications can be consolidated on “virtual machines” based on commercially available hardware. Virtualization can certainly make a contribution to reducing energy consumption, but software control at the board level is also critical.

Digital power

The second element is digital power. Generally, traditional analog switched-mode power converters reach peak efficiency just below the maximum load. While efficiency is only slightly reduced at maximum load, it is significantly lower at half-load or at times of low demand, for example. The reason for this is largely due to the use of fixed-value capacitors, which are employed to ensure a stable supply over a wide range of operating conditions. The downside is a lack of flexibility and the inability to deliver high or uniform efficiency across the potential range of loads. However, the possibilities offered by digital power can overcome this limitation. The digital control loop can be easily and quickly changed and optimized via digital monitoring and control. In addition, the use of digital power converters requires fewer external components, thereby improving reliability and saving board space.

Those who have been early adopters of digital power, including those setting up data center facilities, have seen the many advantages of its enhanced flexibility. For example, the simulation capability of the Ericsson Power Designer software can quickly determine the optimized number of capacitors used on each rail to ensure stability over a wide range of transients, which is a major time-saver and can significantly enhance time-to-market. The tools are also available to apply tracking and sequencing of power rails that have multiple loads for a given piece of silicon. In addition, output voltages can be changed on the fly, as well as the ability to monitor voltages, currents, and temperatures.

In digital-power-based systems, communication between IBCs and PoL converters is carried out via the PMBus, which is an industry standard developed out of the SMBus and defines the physical connection and the data exchange protocol between a central controller and power modules.

Software-defined power

Further extending the digital power trend is an important next evolutionary step, which is the software-defined power architecture (SPDA). This introduces real-time adaptability to digital power supplies and has the potential to bring truly energy-efficient and power-optimized board-level capabilities into advanced network applications. In the SPDA, advanced processors are able to use software command control to adjust output voltages to increase processor performance or lower voltages at times of low load demand.

The SPDA is essentially a scheme that implements many advanced techniques, including concepts such as the dynamic bus voltage (DBV) and adaptive voltage scaling (AVS), as well as others, including fragmented power distribution or phase spreading. An evolution of the IBA, the DBV dynamically adjusts the intermediate bus voltage of the IBC as a function of load current to deliver higher power conversion efficiency. AVS is a technique to optimize supply voltages and minimize energy consumption in modern high-performance microprocessors. It uses a real-time closed-loop approach to adjust the output voltage to optimize performance of individual processors.

Direct conversion

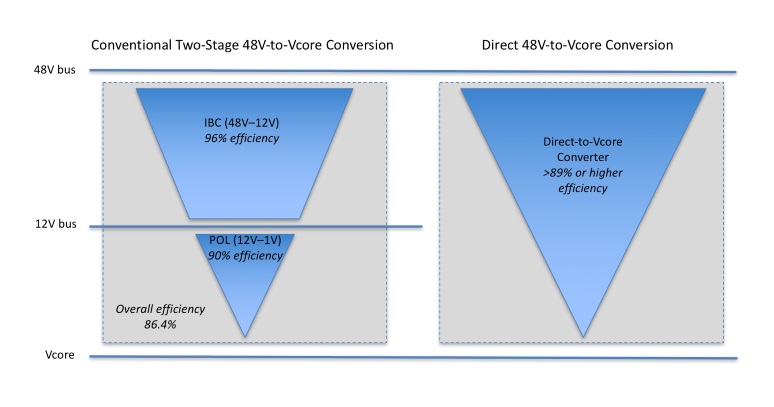

The third trend is direct conversion, which is likely to offer significant advantages to many data center operators. The principle involves bypassing the use of IBCs and PoL converters and deploying a new and highly efficient type of power module that converts the 48-V bus voltage typically used in datacoms applications directly down to the PoL board-level voltages, which can be below 1 V, but in a single power stage (see Fig. 2 ).

Fig. 2: Conventional two-stage IBC + POL converter versus a direct-conversion solution.

The latest IBCs typically offer efficiencies of 95–96%; and PoL converters typically can offer more than 90%. Combined energy loss, however, can reduce overall efficiency to approximately 86.4%, whereas a single converter can deliver efficiencies of 89% or more for the same given load.

As mentioned previously, the direct-conversion concept is not a new one, but the reduction in size and competitive pricing of direct-conversion DC/DC modules is bringing the architecture back to the forefront. The most significant reason for this change in approach is the simplification of current distribution due to the increasing power requirements of modern data centers and the required reduction in I2 R distribution losses. Distributing 12 V at high current to some of the leading-edge server cabinets used in data centers today is prohibitive and not practical. However, direct-conversion architectures can distribute power at 48 V rather than 12 V. The bus supplying the converter carries just 25% of the current that would be required to deliver the same level of power at 12 V, which also means a reduction in the amount of copper bus bars and cables.

Conclusion

Energy consumption is a major issue for data center operators. New and advanced power architectures and techniques will be required to increase system efficiency and reduce costs as well as carbon emissions. In addition to implementing advanced cooling strategies to deal with heat dissipation, important technologies will include hardware virtualization, digital power and its SDPA extension, and in many potential applications, the deployment of direct-conversion architectures.

Advertisement

Learn more about Ericsson Power Modules