By Jean-Jacques DeLisle, contributing writer

The efficiency and consistency of robots and robotic control has become an enabling feature of the most advanced economies in the world. Robotic systems build everything from cars, toys, and food packaging, and are now even driving cars themselves. Regardless of the impressive reaction times and sensor systems that advanced robotics are now using, they have traditionally suffered from an inability to see beyond the now and cope with new environments. Researchers at UC Berkeley are striving to remove this limitation and augment robotic systems with predictive functions based on robotic learning technology.

What the researchers at UC Berkeley have done is implement a system called visual foresight. This system mimics what humans do when we imagine what will happen before we take an action. The difference is that this new technology relies on robotics predicting what their cameras will see when taking a course of action.

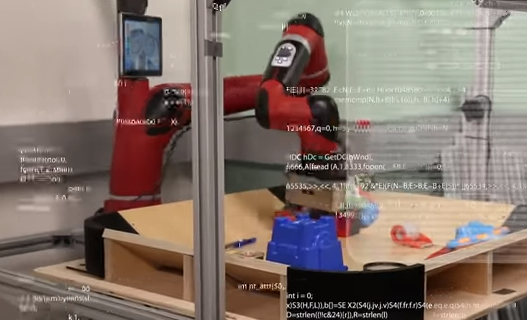

Similar to how children learn to manipulate objects and explore their surroundings, UC Berkeley researchers are exploring giving the same capabilities to robots. Image source: UC Berkeley.

“In the same way that we can imagine how our actions will move the objects in our environment, this method can enable a robot to visualize how different behaviors will affect the world around it,” said Sergey Levine , an assistant professor in Berkeley’s Department of Electrical Engineering and Computer Sciences, who heads a lab that developed the technology. “This can enable intelligent planning of highly flexible skills in complex real-world situations.”

This predictive expiration is very different than the rudimentary training systems previously used. Older robotic training systems still limited robots to previously trained action. There are also greater implications of this technology: What if the robots begin to think of scenarios outside of their experiences?

“In that past, robots have learned skills with a human supervisor helping and providing feedback,” said Chelsea Finn, a doctoral student in Levine’s lab. “What makes this work exciting is that the robots can learn a range of visual object manipulation skills entirely on their own.”

The base of the system is a deep-learning technology, called dynamic neural advection (DNA), which predicts the changing of pixels in images between frames during robotic motion. Though these tools and models are advancing, it is a new field with still much potential. The current visual foresight system is still somewhat simplistic and can only range to several seconds in the future. However, robots are still able to use this technology to push objects on a table, slide toys around obstacles, and otherwise reposition multiple objects to meet desired goals.

Advertisement

Learn more about Electronic Products Magazine