BY KURT SCHULER

VP of Marketing, Arteris IP

www.arteris.com

Advanced driving assistance systems (ADAS) and autonomous cars are driving an explosion of new silicon, and at the center of it all is the system-on-chip (SoC), a powerful semiconductor device that runs complex algorithms and includes many hardware accelerator “brains.” In fact, automotive SoCs are the unsung heroes of the autonomous car gold rush that many call a marvel of advanced engineering.

How can engineers efficiently design these superchips to process billions of operations per second to identify and classify objects on the road in near-real time? What are the actual challenges in designing SoCs that process information from multiple sensors, radars, and cameras to carry out object recognition, distance estimation, and 3D mapping?

Here are three trends that are driving the design of these supercomputer-like processing devices, whether it’s ADAS today or autonomous driving tomorrow.

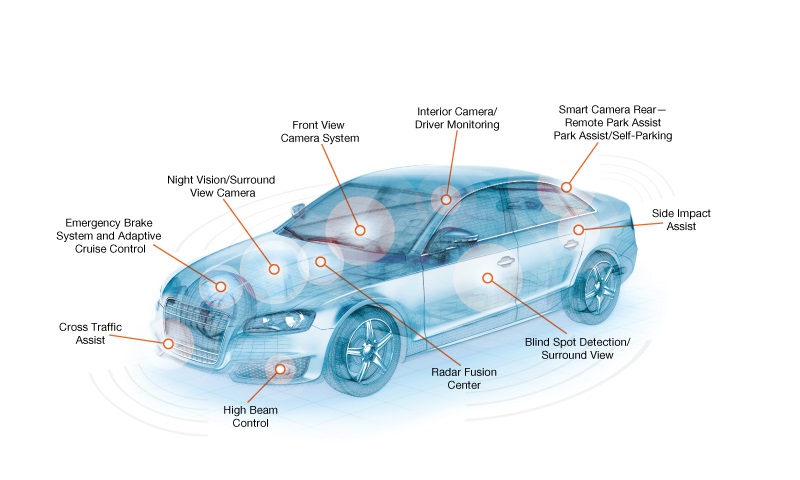

Fig. 1: An automotive SoC serves as the nerve center for ADAS applications. Image Source: Bain and Company.

1. Managing complexity

Automotive SoCs encompass more and more of everything — CPUs, DSPs, memories, clocks, etc. — to carry out a diverse range of tasks such as vision processing, sensor fusion, infotainment, and more. Automotive SoCs have to respond to changing conditions in the physical world in near-real time, further adding to design complexity.

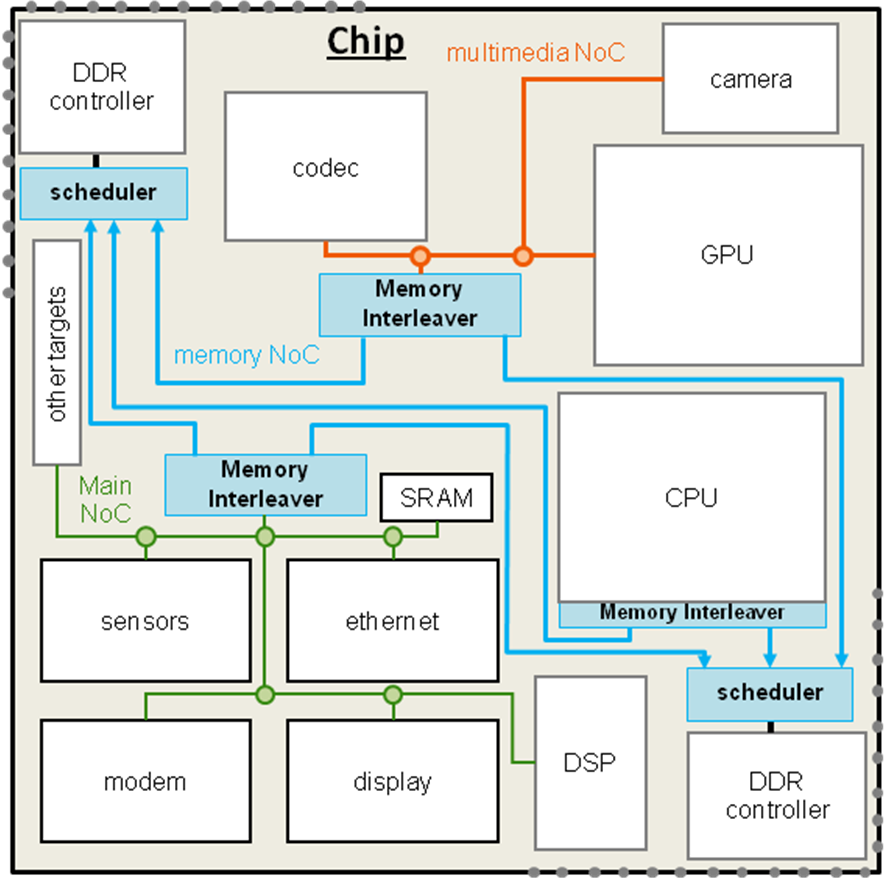

Fig. 2: Automotive SoC designs are becoming larger and more complex with a continuous increase in the number of highly specialized IP accelerators. Image Source: Dream Chip Technologies GmbH.

These new automotive SoCs utilize multiple specialized processing units on a single chip to perform multiple simultaneous tasks like camera vision, body control, and information display. The on-chip communications infrastructure is key to ensuring efficient data flow on the chip. And as the types and numbers of processing elements increase, the role of interconnects and memory architecture connecting these processing elements becomes crucial.

Instead of slow and power-hungry off-chip DRAM access, automotive SoCs are increasingly adopting memory techniques that can keep data close to where it will be used. Memories that are closely coupled to a single processing element are often implemented as internal SRAMs and are usually transparent to the running software. This approach works well for smaller systems, but an increase in the number of processing elements necessitates a corresponding increase in closely coupled memories.

Another approach is to have RAM buffers that can be shared with multiple processing elements. However, in this case, access must be managed at the software level, which, in turn, can lead to software complexity as the system scales up. This software complexity can lead to systematic errors that can lead to errors and faults that affect ISO 26262 safety goals.

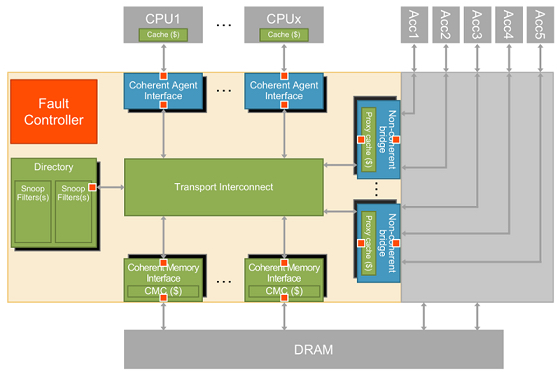

Finally, as the systems become larger, it’s often useful to implement hardware cache-coherence technology. It allows processing elements to share data without the overhead of direct software management. And there is a new technology for cache coherence, now widely implemented in automotive SoCs, that allows processing elements to efficiently share data with each other and as peers in the coherent system using a specialized configurable cache called a proxy cache.

Beyond memory architecture, whether it achieves data locality with buffers or is cache-coherent, what also matters is the on-chip interconnect. It optimizes the overall data flow to guarantee the quality of service (QoS) and thus ensures that automotive SoCs meet the bandwidth and latency requirements.

Bandwidth allocation and latency requirements are a critical factor in mission-critical automotive designs, especially when some of the processing may be non-deterministic, such as for neural-network and deep-learning processing. And here, the on-chip interconnect plays a vital role in implementing an SoC architecture that ensures near-real-time performance while avoiding data starvation of processing elements.

2. New technologies

Automotive designs are also providing the impetus for implementing new technologies like artificial intelligence (AI) because it is impossible to manually create “if-then-else” rules to deal with complex, real-world scenarios. AI algorithms that can handle highly complex tasks are being incorporated into automated driving systems and other life-critical systems that must make decisions in near-real-time domains. That’s why machine learning, a subset of AI, is the most publicly visible new application in self-driving cars.

Machine learning enables complex tasks in ADAS and automated driving through experiential learning that are otherwise nearly impossible using rule-based programming. But machine learning requires hardware customization for algorithm acceleration as well as for data-flow optimization.

Therefore, in machine-learning-based SoC designs, the ADAS and autonomous car architects are slicing the algorithms more finely by adding more types of hardware accelerators. These custom hardware accelerators act as heterogeneous processing elements and cater to specialized algorithms that enable functions such as real-time 3D mapping, LiDAR point cloud mapping, etc.

These highly specialized IP accelerators can send and receive data within the near-real-time latency bounds and deliver the huge bandwidth required to identify and classify objects, meeting stringent and oftentimes conflicting QoS demands. Here, chip designers can compete and differentiate by choosing what to accelerate, how to accelerate it, and how to interconnect that functionality with the rest of the SoC design.

Regarding new technologies, it’s also worth mentioning that neural networks have become the most common way to implement machine learning. What neural networks do here is implement deep learning in autonomous driving systems using specialized hardware accelerators to classify objects like pedestrians and road signs.

Fig. 3: The neural network implements specialized processing functions as well as data flow functions in an automotive SoC. Image Source: Arteris IP.

Deep learning is a branch of machine learning that involves layering algorithms in an effort to gain a greater understanding of the data. It takes raw information without any meaning and constructs hierarchical representations that allow insights to be generated regarding other vehicles, pedestrians, and overall roadside conditions.

3. Functional safety

Safety is a non-negotiable item in the automotive industry, and that makes safety verification a crucial part in SoC designs for mission-critical automotive applications. In other words, functional safety and compliance with the ISO 26262 standard are imperative for SoC designs serving the ADAS and automated driving applications.

ISO 26262 defines five classifications of Automotive Safety Integrity Levels (ASILs) — QM, A, B, C, and D — with ASIL QM offering the basic quality management measures and ASIL D providing the most stringent safety protection against malfunctions that could lead to life-threatening injuries.

There are specific safety mechanism technologies relevant to automotive functional safety, including error correction code (ECC), hardware duplication of components, fault safety controllers, and built-in self-test (BIST). These technologies allow automotive SoCs to adhere to the ISO 26262 standard.

Implementing functional safety mechanisms in silicon offers many advantages over the software-centric approaches. First and foremost, it reduces overall complexity and gives chipmakers more control over the system-wide safety features.

At the same time, however, as automotive SoCs become larger and more complex with a growing number of IP blocks, the role of on-chip communications is becoming fundamental not just for meeting QoS requirements but also for designing SoCs in accordance with the ISO 26262 functional safety standard. The on-chip interconnect plays a vital role in diagnostic coverage because it sees all of the data transferred within a chip. That allows the interconnect IP to find, and, in some cases, fix errors and thus help meet the requirements for ISO 26262 automotive functional safety.

Fig. 4: This is how the on-chip interconnect performs data flow protection for functional safety. Image Source: Arteris IP.

Conclusion

A better understanding of the issues outlined in this article will allow chip designers to quickly implement the complex features required in ADAS and autonomous driving applications. It will also help designers to tailor the SoC hardware architecture according to specific application requirements.

Learn more about Arteris IP

Advertisement