By Alix Paultre, contributing editor

One of the biggest debates in society is over electric vehicle (EV) development and deployment. Being a highly charged issue (no pun intended), the argument spills over and impacts electronic design whereby the discussion is not only mirrored but layered as engineers discuss all aspects of the issue, not just those most apparent. The good thing about engineers is that the arguments are based on reality and what can be accomplished.

Unfortunately, perception often defines reality, so the engineering community is, in one sense, held hostage to public opinion when it comes to the rate and/or success of EVs in the marketplace. However, one aspect of next-generation vehicles that is not in dispute is that the car of the future will be fully or partially autonomous, regardless of the motivational force driving the wheels.

The autonomous vehicle of the near future relies on a network of integrated high-performance sensors and intelligence to control its navigation by sensing the direction in which the vehicle is traveling, surrounding traffic locations, speed of the vehicle, and the location of the vehicle in relation to everything around it. (Source: ACEINNA)

No matter how an EV is powered to reach its destination, advanced navigation systems are critical to successful and safe performance. This is not only important for vehicles but, frankly, any remote autonomous system whether based in water, on the ground, or in the air. Even advanced augmented reality systems require precise and accurate location-determination capabilities to properly function. Creating a navigation system that empowers true autonomy demands extensive simulation and in-field testing with data collection and analysis systems.

Self-awareness

“Know thyself” is a mantra for people, but an autonomous system must also have some sense of where (and sometimes when and how) it is in real space. This awareness is created by using an array of sensors to tell the device about its environment coupled to a logic system that can take that information and turn it into usable information and guidance.

In systems in which human safety is not a critical issue, a little slop in the information is OK. People playing Pokémon Go don’t have to worry about how accurate their position information is, and some actually game the systems (pun intended), using their phone’s wandering GPS to capture targets outside of their true spatial location. This kind of inaccuracy is obviously not tolerable when an error can result in an accident costly in human lives as well as possible loss of the vehicle.

This is where sensor fusion takes place. A properly designed autonomous system will use an array of sensors with overlapping and complementary capabilities to ensure complete coverage of the vehicle’s local environment. The more comprehensive a system’s awareness of its environment, the more accurately it can determine its position and the path to its goal.

Sensor integration

There are several sensor types that must be considered when creating a system to determine position and direction. First are “body” sensors that precisely provide basic information like roll, pitch, and yaw. Once you know how you are positioned, location is the next important thing.

GPS is a very good way to get basic positional information, but it isn’t accurate enough for geopositioning without a secondary feedback to “lock” the rough GPS data into the real world. When car-based GPS systems first came out, several manufacturers put wheel sensors on the cars to track the physical position referenced to local maps in memory.

This kind of sensing used to require a battery of sensors, accelerometers, magnetometers, and gyroscopes that were all required to be used in unison as they all have weaknesses that must be corrected by additional sensor input.

Accelerometers can determine static roll and pitch like a carpenter’s level, but the data will be wrong when accelerating or decelerating because an accelerometer can’t distinguish gravity from linear acceleration on its own. Magnetometers can determine a heading but cannot determine roll and pitch, which is important to orientation. That is why you used to also need three gyros and a filter to combine the information to get good roll, pitch, and yaw/heading. Yaw can be achieved without magnetometers from gyros, but gyroscopes will drift. A magnetometer in a car doesn’t work so well because of all the metal in and around the vehicle, so you need info from a gyro and GPS.

This kind of brute-force solution using a battery of sensors has now been supplanted by using the latest in integrated inertial sensing modules to provide this important feedback.

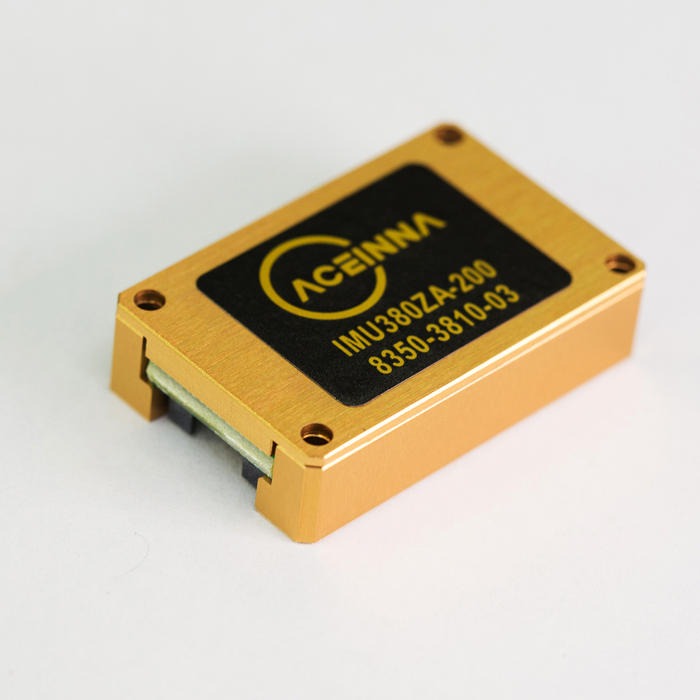

A single IMU device (like an ACEINNA 380ZA-209) can replace the functions of a variety of other sensors — all in a single component. (Source: ACEINNA)

A solution like ACEINNA’s IMU380ZA-209 small-scale fully calibrated inertial measurement unit (IMU) can serve demanding embedded applications that require a complete dynamic measurement solution in a robust low-profile package. By integrating all of the functions previously available only separately, an IMU not only saves space and components but also development time and cost. To simplify connections to the system, the IMU380ZA-209 provides a standard serial peripheral interface (SPI) bus for cost-effective digital board-to-board communications.

The 9-axis IMU380ZA-209 can measure acceleration of up to 4g and takes a supply voltage as low as 3 V and as high as 5.5 V. This wide acceptance range eases system integration issues, reducing the amount of power conversion electronics. A wide operating temperature from –40°C to 85°C ensures that it will function in a variety of ambient environments, and a bandwidth from 5 Hz to 50 Hz and a range of 200 degrees/second mean that it can handle fast-changing situations.

The ACEINNA 9-axis IMU380ZA-200, measuring only 24.15 x 37.7 x 9.5 mm, can handle a wide range of operating temperatures and requires a minimum amount of power. (Source: ACEINNA)

These performance aspects underscore the ability of an IMU to not only reduce space and cost but also provide a higher level of performance and reliability. By replacing the legacy suite of sensors needed to perform the tasks with an integrated system using newer technology, you will achieve a better result than that ensemble of sensors could do together.

Integration removes cables and wires, bulky connectors, and separate housings, increasing robustness and reliability while integration of functionality increases accuracy and precision. These advantages cascade throughout the design from less complexity in the power electronics to improved vehicle design from reduced and simplified internal space requirements.

Putting it together

When developing a typical design, dynamic trajectory and modeled sensor readings (with errors) are simulated to create an Extended Kalman Filter or Particle Filter navigation algorithm. Once a given simulation shows promising results, that algorithm is implemented with real-time code on embedded hardware. The resulting prototype is then tested in the field, where data is logged (often into the terabytes) and then analyzed against predicted results.

To facilitate this process, developers of navigation systems typically select and use different software for each step in this process. Surprisingly, open-source “web technologies” can form the basis of a highly efficient software stack for the development of navigation systems. As a real-world example, ACEINNA has traditionally used a stack with many “classic” proprietary embedded programming and simulation tools including Matlab for simulation, IAR Systems for compiling embedded C/C++ code, and a combination of C# with National Instruments tools to build configuration, graphing, and data logging graphical user interfaces.

At the end of the day, all of this information is put into large data files that are stored on in-house servers. At ACEINNA, the decision was made to consider a new approach based on “web technologies.”

In one example, a web technologies-centric stack uses Python for simulation, Microsoft’s open-source VS-Code, or GitHub’s Atom with Arm’s GNU Compiler Collection (GCC) for an IDE and uses JavaScript to build user interface and logging engines. Native user interfaces for desktop and mobile can even be deployed with compatible projects such as Electron and React Native.

Cloud services such as Azure or AWS make it trivial to catalog and manage large datasets collected from the field as well as share those datasets both internally and externally to the organization. Both JavaScript and Python are extremely well-supported by cloud vendors, allowing trivial integration of their compute and storage services into the stack. The license fees per developer are zero, and the libraries from numerical analysis in Python to JavaScript-based graphing libraries are endless.

The biggest issue is the upfront work required to make the embedded tool chain work. Professionally licensed tools can, at times, be more user-friendly out of the box. However, the large number of web developers and forums largely negate this advantage. With many specialized libraries targeted to help embedded developers use open-source tools, the path is rapidly becoming far easier to implement.

Driving forward

The creation of a truly autonomous vehicle that serves the user safely and effectively must use a full palette of sensors integrated into a system that is not only intelligent in its own right but also web-enabled to leverage the external functionalities available to maximize system performance. Proper systems integration can mean the difference between success and failure in the highly competitive and unforgiving autonomous vehicle marketplace.

Advertisement

Learn more about Electronic Products Magazine