BY DEEPAK BOPPANA, Senior Director of Product and Segment Marketing

Lattice Semiconductor

www.latticesemi.com

From smart homes to self-driving cars to medical devices, artificial intelligence (AI) and edge computing have been center stage, and there’s no doubt that AI is spreading rapidly across multiple industries. The continued development of the internet of things and connected devices are driving shifts in systems architectures and new applications, welcoming not only the rise of AI but also increased intelligence at the edge.

Designers building computing solutions on the edge are challenged to meet new requirements that are flexible, low-power, small-form-factor, and low-cost, all without compromising power and performance. These new applications will require AI and machine-learning-based computing solutions closer to the source of IoT sensor data than the cloud.

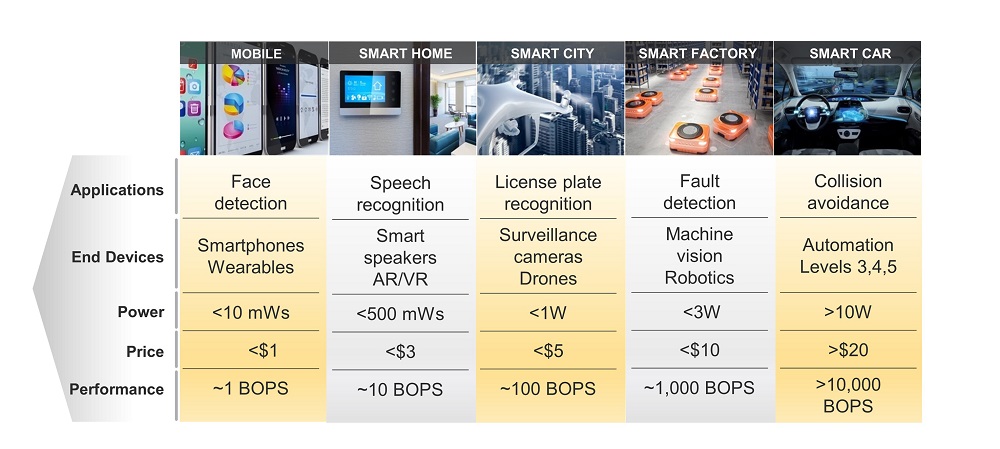

Fig. 1: A new generation of AI-based edge-computing applications demands a unique mix of requirements.

So how can developers build edge solutions that consume little power at a low cost without compromising performance? First and foremost, designers need to keep costs low for the solution to compete with high-volume edge solutions. Secondly, they need field-programmable gate arrays (FPGAs) that offer maximum design flexibility and support a wide range of I/O interfaces in a market where neural-network architectures and algorithms are rapidly evolving. Additionally, solutions are needed that, through custom quantization, allow them to trade off accuracy for power consumption. Utilizing lower-density FPGAs optimized for low-power operation have proven to meet the stringent performance and power limitations imposed on the network edge with a time-to-market advantage.

Designers also need FPGAs that allow them to build compact AI devices that deliver high performance without violating footprint or thermal management constraints. Finally, those who bring solutions to market first reap a tremendous advantage. Any prospective solution must have access to the appropriate resources to customize solutions and shorten the development cycle accordingly — whether it is demonstrations, reference designs, or design services.

Given this, how do FPGAs play a role in edge computing? Machine learning typically requires two types of computing workloads. Systems in training can learn a new capability from existing data. A facial-detection function, for example, learns to recognize a human face by collecting and analyzing tens of thousands of images. This early training phase is, as one might imagine, highly intensive. In these cases, developers typically employ high-performance hardware in the data center that can process large amounts of data.

Inferencing, the second phase of machine learning, applies the system’s capabilities to new data by identifying patterns and performing tasks. Continuing the aforementioned example, the facial-detection function would continue to refine its ability to correctly identify a human face. In this phase, the system is consistently learning, which increases its intelligence over time. Given the many constraints to performing on the edge, designers cannot afford to perform inferencing in the cloud. Instead, they must extend the system’s intelligence by performing computational tasks close to the source of the data on the edge.

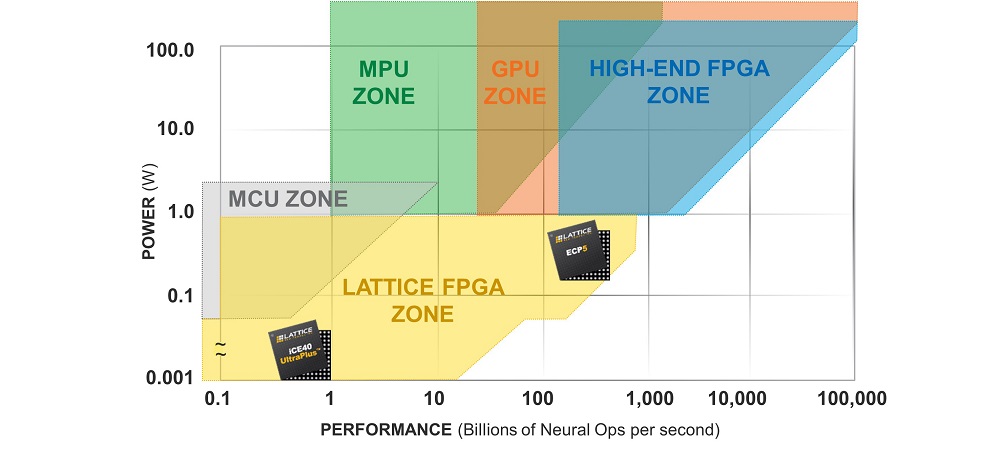

So how can designers replace the vast computational resources available in the cloud to perform inferencing on the edge? An effective solution is to take advantage of the parallel processing capability inherent in FPGAs and use it to accelerate neural-network performance. By using lower-density FPGAs specifically optimized for low-power operation, designers are able to meet the performance and power needs at the network edge. For example, Lattice’s ECP5 FPGA can accelerate neural networks under 1 W, and the iCE40 UltraPlus FPGA can accelerate neural networks in the milliwatt range, both of which allow designers to build efficient, AI-based edge-computing applications.

Fig. 2: An example of an ultra-low-power stack at the edge is the recently launched Lattice sensAI.

In addition to access to computational hardware, designers need a variety of IP, tools, reference designs, and design expertise to build effective solutions and enable faster time to market. An example of a comprehensive developmental ecosystem helping to address this challenge is sensAI, based on both the ECP5 and iCE40 UltraPlus FPGA series. Designed to accelerate the deployment of AI into consumer and industrial IoT applications, the Lattice sensAI stack offers flexible inferencing solutions optimized for the edge.

Lattice sensAI meets the requirements of edge computing by:

- Combining modular hardware platforms, neural-network IP cores, software tools, reference designs, and custom design services from ecosystem partners

- Simplifying the task of building flexible inferencing solutions

- Optimizing for low power consumption from 1 mW to 1 W in package sizes starting as small as 5.5 mm2 and priced for high-volume production anywhere between $1 and $10

- Providing companies and developers with all the necessary tools needed to quickly and efficiently develop tomorrow’s consumer solutions as machine learning moves closer to the edge

As the IoT continues to advance, so will the number of connected devices and the amount of data. Eventually, the power and bandwidth required to send data to the cloud will be too immense, and the space needed to store the data will challenge the resources of even the most powerful cloud companies. This is the start of the revolution for AI and edge computing. As users seek higher levels of intelligence, demand will grow for systems that integrate low-power inferencing close to the source of the IoT data and favor platforms with computational capacity on the edge device. FPGAs are increasingly important in meeting the requirements for this new generation of AI-based edge computing as it provides security latency, design flexibility, and enables quick time to market for programming intelligence in AI systems.

Advertisement

Learn more about Lattice Semiconductor