When you hear people refer to cars becoming “data centers on wheels,” they’re usually thinking about how cars can stream a multitude of music services or serve up navigation tips in 75 languages. For consumers, it is about enhancing the driving experience. For automotive OEMs, it promises to be a way to differentiate and potentially generate new revenue streams.

But there’s another important implication lurking behind the statement. To deliver that seamless experience, cars increasingly will need to be built like data centers and adopt a computing architecture that can accommodate new applications and performance demands.

Cars will need more powerful processors, vast storage systems and versatile networks that can transfer data between sensors, cameras and other devices that may not have communicated much before. These computing systems will also have to make decisions in real time, deflect attacks from hackers, operate flawlessly in confined spaces, be impervious to heat and vibration, and consume as little energy as possible.

And these digital capabilities will need to be affordable so they can be integrated across makes and models. Autonomous cars? Futurists say these top-of-the-line vehicles will generate 5 TB or more of data per day, while a typical single automotive camera will generate a few hundreds of gigabits per hour of uncompressed video. Integrating a system into an economy vehicle that can absorb this information and process it so it can be acted on will be a challenge, especially in the low- to middle-income markets internationally that will be a driver of growth for the industry in the future.

The digitization of the car, of course, is already underway. Cars contain 3× to 4× more chips than they did a decade ago, according to some experts, and the value of those chips in higher-end models has grown from about $350 to $2,000. (Electric vehicles, meanwhile, can contain 3× or more chips than internal-combustion-engine [ICE] vehicles, depending on the complexity of the vehicle.)

To eke out further performance gains and differentiate their products, many carmakers are collaborating with chip designers on customized semiconductors optimized for specific vehicle performance goals and/or software applications. Software development groups inside automakers, meanwhile, have been growing. Many manufacturers have also begun to roll out software-defined services that weren’t available a few years ago.

Still, we have a long way to go. Here are some of the next steps necessary for making that vision a reality.

Going zonal

One of the first and most important changes underway revolves around the architecture for organizing the computing resources in a car. Fifteen years ago, the architecture was fairly rudimentary. The “network” consisted of a link between a sensor, a microcontroller (MCU) and a dashboard warning light. Manufacturers would sometimes brag about having 70 to 100 processors in one of their cars, but most of those processors were simple, fixed-function devices.

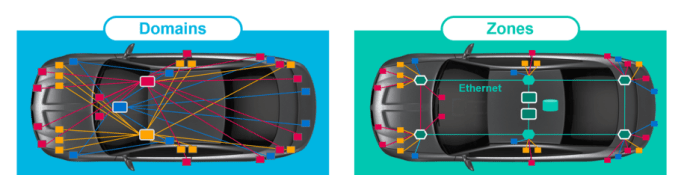

Since then, we’ve seen the rise of domain architectures, wherein sensors and MCUs are grouped into networks by function. The infotainment domain, for instance, might include the radio, driver’s console and navigation system, while the body domain would include the brakes and the advanced driver-assistance system (ADAS) domain would include cameras.

Domain architectures represent an improvement, but they also mean multiple networks made up of numerous localized point-to-point connections crisscrossing the body of the car. It doesn’t scale well.

What if surrounding cameras are on the infotainment network and need to transfer data to the ADAS network? Or if the manufacturer wants to introduce a predictive maintenance service that requires data from the engine to an external 5G link? It means more hops and travel time: Unnecessary complexity and degradation in performance is the result.

The extra wiring also adds quite a bit of heft. The cable harness is typically the third-heaviest component in a car and, if stretched end-to-end, can measure over 1.5 km. Cable harnesses are also typically installed by hand in the factory, adding production costs. Robots aren’t sensitive enough for this work, and the crevices and voids available for cables can change across car models.

Enter zonal architectures. In zonal architectures, all of the devices in a particular physical zone of a vehicle are linked to a local zonal aggregation switch with short cables (Figure 1). The right front zonal switch would link to the brakes, headlight, tire sensors and other devices close to each other, even though they once lived in separate zones.

Zonal aggregation switches—and there will be four to six zones in cars by the second half of the decade—then link to central switches that coordinate and organize traffic flow between the zones, central computing and storage, and external services.

Figure 1: Communication breakdown—communication between the rear right camera (red) and rear left camera requires a trip to the front of the car in domain architectures, and connection to the right front brake (yellow) is impossible. In zones, it is a direct connection or short loop through nearby zonal routers (green hexagon). (Source: Marvell Technology Inc.)

The advantages are numerous, as you can see in Figure 1. First, the amount of cable needed to connect everything is drastically reduced: Less weight means greater mileage in EVs and ICEs. Second, and arguably more important, communication is typically faster, a key consideration for the real-time computing problems inherent in driving. Third, it provides a way to use computing resources more effectively.

With zonal architectures, manufacturers can replace dozens of fixed-function MCUs with powerful, centralized, programmable CPUs that can perform predictive diagnostics or other complex tasks that would have been impractical or impossible with the old web of distributed devices. Storage can be enhanced through centralization as well.

If a hierarchical architecture like this sounds familiar, it should: It’s similar to the architecture you see in enterprises and data centers, with edge switches managing the devices in its territory and then linking to its kindred switches through a central aggregation switch.

Technology transfer

There is another big advantage: Zonal architectures pave the way for automakers to move from their current industry-specific technologies, which tend to evolve slowly, to technology being propelled forward by other industries.

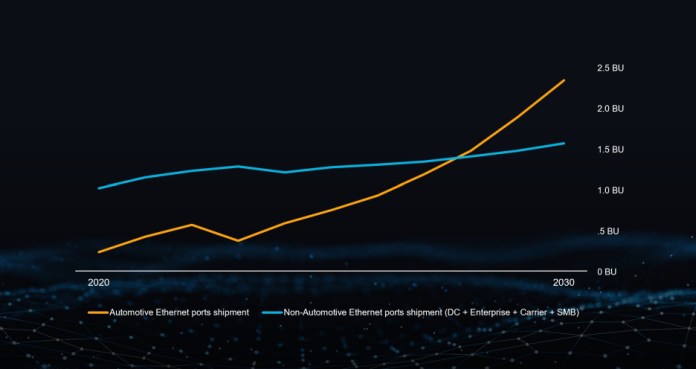

The default choice for zonal networking is Ethernet, the networking standard for IT networking for over 50 years. The number of Ethernet ports shipped annually to the automotive industry will likely pass 1 billion over the next few years, more than double today’s rate and 10× the number shipped in 2018. Toward the end of the decade, the number of Ethernet ports shipped to automotive may even pass the number shipped to data centers (Figure 2). Relying on established standards reduces development costs, reduces the risk of vendor lock-in and helps accelerate the pace of innovation, three problems the auto industry has faced in electronics.

Figure 2: Auto Ethernet ports won’t be as fast, but annual shipments could soon reach over 1 billion. (Source: Marvell Technology Inc. and industry analyst estimates)

Additionally, it will make it easier to scale. Current domain switches in cars are capable of transferring data at about 1–10 Gbits/s. And that’s at the high end. After 2028, zonal switches will climb to up to 25 Gbits/s, with central switches topping 90 Gbits/s and, if history is any indication, bandwidth will continue to double every three to four years.

Why would anyone need a 90-Gbits/s switch in a car? Managing cameras, for one. The number of devices capturing visual data for improved safety will spike dramatically in the coming years. LiDAR and thermal cameras will also become more prevalent to capture other types of data that can complement video streams or body sensors. Camera bridges, which can streamline the process of managing the large volumes of real-time data generated by cameras, will also be developed to ensure networks can seamlessly handle the surge in traffic.

Security will also potentially improve rapidly: Cloud and security companies already invest deeply in improving technology. AI-enhanced technology to identify vulnerabilities, contain attacks and secure data, if hackers can even get that far, will be standard features of cars. And again, taking advantage of technologies built for data centers will enhance the level of protection.

The technology in cars, of course, won’t be identical to what you find in data centers. In some ways, it will be more sophisticated. Most automotive processors and Ethernet devices, for instance, contain redundant, parallel cores. In case one fails, the other takes over. That’s because cars can’t tolerate a temporary outage like data centers can.

In many ways, we’re at the start of a new journey for the automotive industry. We don’t know exactly where the road will go, but we have a pretty good sense. And by leveraging the technology and lessons learned from data centers, we can get there faster.

Advertisement

Learn more about Marvell Semiconductor