Advanced driver-assistance systems (ADAS) are a big driver for growth and innovation in the sensor market. The sensor market for ADAS vehicles is expected to reach $22.4 billion in 2025, led by radars, according to Yole Développement (Yole). In 2025, radar revenue is expected to reach $9.1 billion, and despite being a relatively small market today, LiDAR revenue is forecast to grow to $1.7 billion. Although LiDAR in ADAS represented only 1.5% of the automotive and industrial LiDAR market in 2020, the ADAS share is expected to reach 41% in 2026, according to Yole.

In general, radar works by transmitting a signal that bounces off an object to determine its presence and range. By sending a signal at a specific frequency, the system then analyzes the return frequency. For ADAS, the difference between the two, including the possible Doppler effect, determines the position, distance, and speed of obstacles.

Radar is also able to scan the surrounding environment. Therefore, it has become a critical sensor for applications like collision avoidance because it works in the dark and adverse weather conditions and is relatively inexpensive.

Similarly, LiDAR is a sensing technology whose main task is to detect objects and map their distances. This is achieved by illuminating a target with an optical pulse (whose width ranges from a few nanoseconds to several microseconds) and measuring the characteristics of the reflected return signal.

Key factors for extracting useful information from returned light signals are pulse power, round-trip time, phase shift, and pulse width. Even though several different types of LiDAR systems are available, they can be grouped into two categories with respect to the beam-steering type: mechanical and optical LiDARs.

A mechanical LiDAR relies on high-grade optics and a rotating assembly to create a wide field of view (FoV), up to 360°. The associated signal-to-noise ratio is quite excellent over the FoV, but the solution is bulky and heavy. Solid-state LiDARs, on the other hand, feature no spinning mechanical parts, providing a high degree of reliability. Even though their FoV is reduced, there is a way to overcome this limitation.

Yole analysts expect that the ADAS market will reach more than $60 billion in 2026, with a 6.5% compound annual growth rate from 2020 to 2026, as part of the automotive connectivity, autonomous, sharing/subscription, and electrification (CASE) industry that is forecast to reach $318 billion by 2035.

A key driver for this growth is continuous innovation and integration of new functionality. As an example, Yole said that more than 80 LiDAR companies have been established since Velodyne’s 3D real-time LiDAR was introduced in 2005, and many of them are based on new technologies.

Click for a larger image. (Source: Yole)

True solid-state radar

One of those innovations is a solid-state LiDAR technology from XenomatiX, designed for ADAS and autonomous driving. This novel solution takes a fundamentally different approach with respect to conventional optical LiDARs, which use sequential measurements to send laser light in one direction, take a measurement, and then move on to the next position. They measure and acquire the surrounding scenario in a step-by-step way.

The whole scene can be detected in “one flash” without the constraints of shorter range or high power, with ranges beyond 200 meters and normal power consumption. Unlike scanning LiDARs, the high-resolution point clouds need no post-treatment for time-space correction, allowing a much higher frame rate and providing a better correction.

As a result, XenomatiX’s LiDAR does not have to move very fast, as conventional “point and measure” optical systems do. Because the scene is measured by sending all the beams at the same time without performing any scanning, the system has more time to process the high-resolution grid of measurement points.

Today, many LiDAR systems are mechanical, though there is a big trend toward solid-state technologies. They use spinning heads, resulting in bulky, heavy, and expensive solutions. To overcome these limitations, technologies such as oscillating mirrors have been adopted to downscale the size of the solution. However, it still remains a somewhat mechanical device.

XenomatiX, founded in 2013 and headquartered in Leuven, Belgium, introduced the term “true” to identify solid-state LiDAR systems that are built using a semiconductor-based laser source and detector and without scanning or moving parts. The company’s approach is a solution for scanning while in motion, as it removes the lag time caused by scanning sensors as they move through their scanning pattern.

This concept is well-suited for automotive applications, as it eliminates the need to compensate for motion: All the beams are sent out at exactly the same time, acquiring all of the points at the same time via a global shutter.

The design of the XenoLidar-X true-solid-state LiDAR is effective in all scenarios in which lighting and weather conditions can vary to a great degree. The XenomatiX next-generation solid-state solution features 15,000 laser beams, projecting simultaneously, which improves resolution to a level of 0.15° horizontal and vertical, in line with today’s most demanding market requirements.

XenomatiX’s XenoLidar-X LiDAR (Source: XenomatiX)

In its solid-state LiDARs, XenomatiX uses vertical-cavity surface-emitting lasers (VCSELs), which are extremely low-power laser sources that provide very good durability and lifetime, much better than traditional diode lasers.

The company’s solutions are known as 6D LiDARs, meaning they provide two types of outputs with perfect overlay. The first is a point cloud, a 3D geometry that includes all the detected laser spots. The second is a visual 2D camera image. It can be seen as a LiDAR with an inherently incorporated camera, or a camera with LiDAR performance and no parallax error. The availability of redundant data enables sensor fusion, providing complementary information that supports safety applications. The sixth dimension is the reflectivity of objects, based on the amount of returned laser light.

The specially designed CMOS detector, which can operate in 2D or 3D modes, uses proprietary AI algorithms to process the visual image or point cloud.

XenomatiX calls it four-dimensional AI, meaning it performs pattern recognition in a 4D space, where x, y, and z coordinates are combined with the intensity of the reflected laser beam. The sensor is designed to also work as a detector in a 2D mode when the laser is off. If the laser is switched on, the system can use the same pixels to do 3D measurements and generate the 3D point cloud.

Solid-state LiDARs also provide excellent reliability, which is a key factor in automotive applications. Mean time between failure is, in fact, very good due to the absence of moving parts, the use of VCSELs (which are lasers with a long lifetime), and the maturity of the CMOS technology.

In addition to automotive applications, the LiDAR technology can be used for 3D aerial and geographic mapping, safety systems in factories, smart ammunition, and gas analysis.

4D imaging radar chip

Improving the “eyes” of ADAS extends beyond LiDAR to include new sensors that are capable of handling complex driving scenarios, or what’s called Level 4, or high automation.

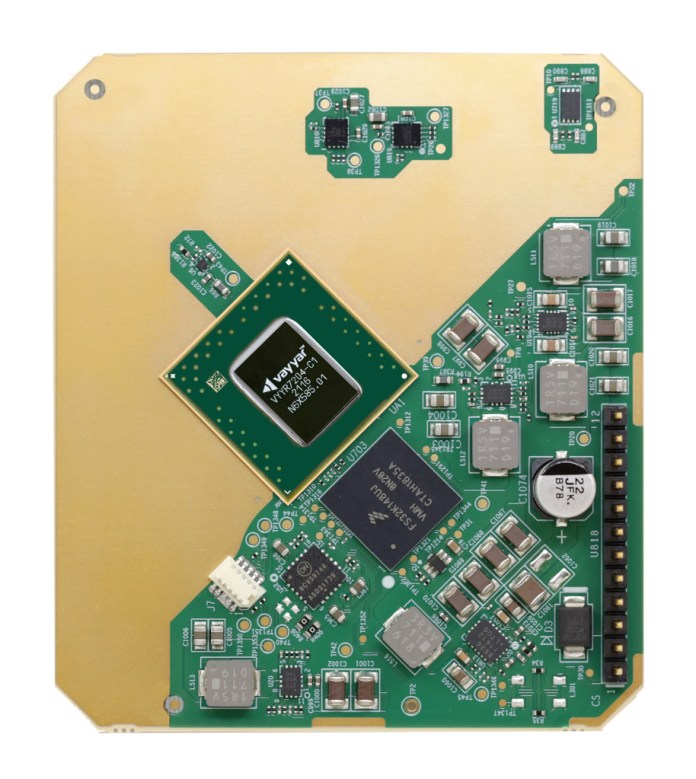

Among the developers is Vayyar Imaging, an Israeli sensor specialist. The company’s XRR platform for ADAS is a single 4D imaging radar chip with a range of up to 300 meters. The radar chip also provides a 180˚ FoV, operating without the need for an external processor.

The 4D feature refers to the chip’s ability to measure distance and relative velocity along with the azimuth of objects and their height relative to the road level.

A 48-antenna MIMO array supports the new platform, which is also AEC-Q100–qualified and ASIL-B–compliant. The RFIC is said to eliminate the need for external devices such as LiDAR sensors, reducing cabling costs, power consumption, and integration efforts.

The multi-range XRR chip operates in the 76- to 81-GHz radar bands and can differentiate among static obstacles such as dividers, curbs, and parked vehicles along with moving vehicles and other hazards.

In low-speed environments such as parking lots, the chip scans the surroundings for pedestrians and obstacles using ultra-short and short-range radar imaging detection. At longer ranges, the radar chip enables ADAS applications such as adaptive cruise control, blind-spot detection, collision warning, cross-traffic alerts, and autonomous emergency braking.

Board with Vayyar’s XRR chip (Source: Vayyar Imaging)

The 4D imaging radar provides nearly 500 virtual channels (as opposed to one channel in traditional radar). Unlike cameras and LiDARs, 4D imaging radar works in all conditions, including fog, heavy rain, and at night. Its longer range meets requirements for higher levels of vehicle automation. Radar also captures Doppler shifts, which detect whether an object is moving toward the vehicle or away.

Also, unlike cameras and LiDARs, 4D imaging radar uses echolocation and the principle of time-of-flight measurement to scan its surroundings. Along with 300-meter range, radar also performs well in snowstorms, when imaging is very difficult.

The 4D sensor uses time variables to analyze 3D environments for elevation. This can help detect and identify stationary objects along the roadway.

Being able to scan the roadside environment around the vehicle with increasing precision and definition would allow on-board electronics to interpret greater amounts of data, requiring higher processing speeds for ADAS application. The result, Vayyar said, is greater reliability.

The company’s radar-on-chip also incorporates an internal DSP and MCU for real-time signal processing without the need for an external CPU.

Advertisement