Sometime in 1972 a digital item was created.

Let’s say an employee worked 8 hours that day and so the number 8 was carefully placed into 16 bits of a core memory. This core memory was someplace inside a mainframe or minicomputer with an IBM or DEC or Honeywell label on its front panel. Core memory had some wire wrapped around a tiny donut shaped thing that held one bit. It was driven by transistors in a TTL logic IC that pulled current through the wires and a single card full of core held 32 Kbytes.

There were millions of digital items created that day in 1972. The program that made the digital item was created using Cobal, or Fortran, or maybe the recently released Small Talk language. That one digital item was later moved from core memory to an IBM 3330 disk storage unit with a capacity of 200 Mbytes and 30 ms average access time. The 8-inch floppy disk from IBM was emerging just about then – with a capacity of about 80 Kbytes.

And now look at what we have done. People are making digital items by the zillions.

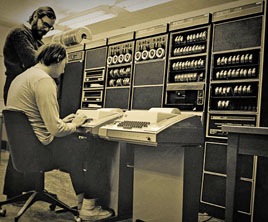

Figure 1: Ken Thompson (seated) and Dennis Ritchie at a PDP-11 in 1972.

I’m sure you have heard all the IoT talk. The annual global IP traffic is predicted to pass a zettabyte (Zetta=1021 ) by the end of 2016. But that’s just part of it. Think about how many items are created every day that never get sent on the web. Like all those photos you took on the trip to Yellowstone last year and then transferred to your desktop or laptop – never to be seen again.

All that stuff, plus all the traffic, is at some point in memory . It’s in RAM, it’s in flash, it’s on a disk. These days it’s mostly in flash. Along with the huge processor ICs we have today, memory is the key to many advances in technology. If only I had a penny for every byte of memory in use right now…..

Samsung recently said they are shipping a 32 Gbyte 3D V-NAND flash memory chip. Not in a module of some kind; it is a standard memory chip. In the devices Charge Trap Flash (CTF) structure cell arrays are stacked vertically to form a 48-storied mass that is connected through some 1.8 B channel holes punching through the arrays. So, back in paragraph one, we had 32 Kbytes on a board, and now we have 32 Gbytes on a chip.

And now Intel and Micron have jointly introduced 3D XPoint memory. They have not given out the details yet (they modestly call it a revolutionary breakthrough in memory technology). It is NV memory and is said to bring speeds up to 1,000 times faster than NAND flash and to offer “unique material compounds and a cross-point architecture” with density 10 times that of “conventional memory.” It is also said to have “up to” 1,000 times the endurance of NAND flash.

The other side of the revolution is programming languages – which have not changed a heck of a lot since 1972. Software engineers and coders are writing code for applications or embedded stuff using C, or Java, C++, Objective-C, or Python or PHP languages, and a little Lisp here and there. You can still find job openings for Cobol and Fortran programmers. Crazy. Didn’t see any for PL/1 or Pascal, at least.

Bloomberg Business week devoted their entire June 15, 2015 issue to the topic of “What is Code,” written by Paul Ford. It was well done. On pages 66 and 67 Mr. Ford attempts to list most languages. A good try with nearly 500. We certainly have too many languages. Please stop. By the way, what is the best programming language?

If you have comments twitter me at @jharris99 or email at jharrison@aspencore.com.

Advertisement

Learn more about Electronic Products Magazine