By Patrick Mannion, contributing editor

Artificial intelligence (AI), electrification, and in-cabin entertainment are just some of the revolutionary changes underway for automobiles, causing a complete rethink of how an automobile should be designed and used. They’re also cause for designers to rethink their own role in the automotive design chain.

From a semiconductor and components environmental performance point of view, the same rules apply, namely AEC-Q100, which has been around since 1994. This defines the temperature, humidity, and other reliability factors. Since 1994, however, much has changed, and soon, “auto” mobiles will start living up to their name, thanks in large part to advances in sensor integration, AI, Moore’s Law, and some people in remote regions making a living by tagging images to make smart systems more accurate.

For example, accurate labeling can make the difference between distinguishing between the sky and the side of a truck. Mighty AI is one company focused on ensuring accurate tagging using teams of humans spread globally. According to its founder, S. “Soma” Somasegar, there is a large role for humans in this loop for a long time to come. “We’re not building a system to play a game; we’re building a system to save lives,” said Mighty AI CEO Daryn Nakhuda.

Getting to the point at which autonomous vehicles can be considered relatively safe for everyday use is an interesting challenge that has captured the imagination of automobile OEMs and electronic system designers and spurred innovations in sensors, processing, and communications.

For some time, it was believed that LiDAR would be the critical breakthrough technology that would enable autonomous vehicles, but now, developers have realized that it’s a combination of every sensor input possible, including sonar, high-definition cameras, LiDAR, and radar, all to ensure accurate ranging and identification of objects. According to GM, the autonomous version of its Chevy Volt electric vehicle (EV) has 40 more sensors and 40% more hardware.

Lowering the cost and power consumption of that hardware, especially for advanced image processing, is one of many critical enabling factors for autonomous vehicles. To that end, Dream Chip Technologies announced an advanced driver assistance system (ADAS) system-on-chip (SoC) for computer vision at Mobile World Congress (MWC) that greatly increases performance while lowering power consumption.

The ADAS SoC was developed in collaboration with Arm, ArterisIP, Cadence, GlobalFoundries (GF), and Invecas as part of the European Commission’s ENIAC THINGS2DO reference development platform. It was developed on GF’s 22FDX technology to lower the power required for AI and neural network (NN) processing so that it can be embedded into a vehicle without the need for active cooling techniques, which can add weight, size, and cost while increasing the probability of failure.

The SoC uses Dream Chip’s image signal-processing pipeline, Tensilica’s (Cadence) P6 DSPs, and a quad-cluster of Arm Cortex-A53 processors to get to 1 tera operations/s (TOPS) with a power consumption “in the single digits.”

Distributed vs. centralized sensor processing

The low-power performance of Dream Chip Technologies’ SoC at low power for image processing is critical, given that latency needs to be minimized to avoid incidents. The further that a vehicle can see, and the sooner that it can process what it sees, the safer a vehicle will become. However, as mentioned, there are many sensors required for reasonably intelligent decision-making, which raises the question of where and how all of those sensor inputs should be processed.

Sensor fusion techniques are well-known in applications such as drones, in which gyroscopes, accelerometers, and magnetometers are managed in such a way that the benefits of each are accentuated and the negatives attenuated. How can this be done for autonomous vehicles with so many and varied sensors?

To tackle this, Mentor Graphics decided to work backwards and start with Level 5 autonomy in mind. Its approach is called DRS360 and it takes (fuses) raw sensor data from LiDAR, radar, and cameras and processes it to develop a 360-degree real-time view of vehicle surroundings. The centralized approach reduces latencies but does require a high level of centralized processing, which Mentor provides using Xilinx Zynq UltraScale+ MPSoC FPGAs with its advanced NN algorithms. The alternative is to do the image, LiDAR, or radar processing locally at the sensor and send the results upstream, but that approach doesn’t scale as efficiently as DRS360, nor does it take full advantage of rapidly changing and evolving algorithms. The downside is a single point of failure, but built-in redundancies and good design can offset that.

The importance of automotive sensors is not lost on the MIPI Alliance, which is bringing its experience with defining sensor physical-layer interfaces on mobile handsets to the rapidly evolving automotive space. On Oct. 7, it announced the formation of the MIPI Automotive Working Group (AWG) to bring standardization to sensor interfaces to lower development costs, reduce testing time, and improve reliability. With its background in mobile devices, MIPI Alliance is also confident that it can help ensure that highly sensitive, mission-critical automotive applications suffer minimally from EMI.

Electrification of vehicles accelerating

Though often considered to be synonymous with autonomous vehicles, the move to electrification to replace the internal combustion engine (ICE) is moving at its own pace with different drivers. Primarily, electric vehicles with their large batteries are seen as a more sustainable form of transportation. However, that battery does add weight, up to 32% more versus an equivalent ICE vehicle, according to Dan Scott, market director for integrated electrical systems at Mentor Graphics.

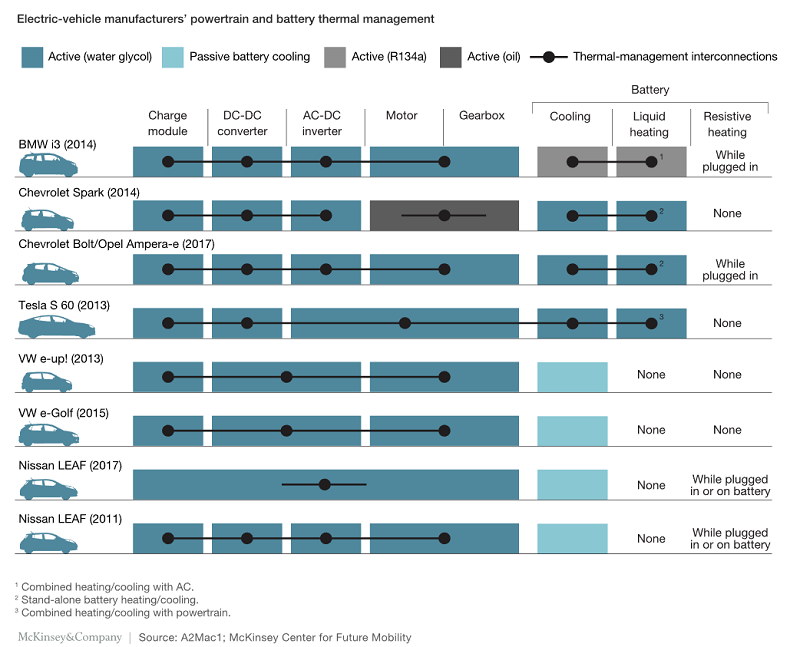

The issue, then, is to minimize weight, which goes directly to cables, harnesses, and connectors. The other issue with electrical vehicles is the higher power requirements, with currents of up to 250 A at 400 V for a typical 1-KW battery implementation. Such high currents cause more energy losses to heat generation, which is driving a move to 800-V power for EVs. This will also reduce the cable weight, as will other techniques, such as removing shielding on high-power cabling. McKinsey & Company tore down eight EVs just to see how they were differentiated, and power and thermal management proved to be an interesting factor with no convergence on power-train design approaches yet (Fig. 1 ).

Fig. 1: A teardown of eight EVs showed large differences in how power trains are designed and how thermals are managed. Image: McKinsey & Company.

However, there are trade-offs associated with that, said Scott, such as EMC issues. “Also, with things like regenerative braking, now you have power electronics, motor controls, and battery chemistry to consider, so you have to share that data between different tools,” said Scott. “This requires a much more holistic design approach.”

For this, Mentor developed its Capital software suite for electrical and wire harness design and layout for automotive and aerospace applications. The goal of Capital is to maintain a seamless data flow from vehicle concept and electrical architecture definition to wire harness manufacture and vehicle maintenance.

“Capital excels at managing complex systems,” said Scott. “We’ve got generative design, where we can take basic architectures and auto-generate wiring diagrams from that.” While Capital doesn’t pick the specific components required, Scott said that Mentor does have a design services team that can help with next-level design decisions.

Comfort and services replacing performance

As vehicles evolve toward autonomy, comfort, entertainment, and services are becoming seen as more differentiating factors than performance and road “feel.” Ford has recognized and championed this migration toward services, and Intel has long promoted the focus on data, both on the vehicle and on its usage.

How vehicles are being used is changing the business model, with younger users showing an affinity for a subscription model versus owning a vehicle. Also, they like to be both informed and entertained, which inspired Imagination Technologies to announce its PowerVR Series8XT GT8540 four-cluster IP for high-definition heads-up displays and infotainment. The GPU core can support multiple high-definition 4K video streams as well as up to eight applications and services simultaneously.

Advertisement

Learn more about Electronic Products Magazine