Written by Brian Santo, contributing writer

People keep accepting new limits as to how much potential intrusion they are willing to accept in their homes. With the introduction of the Echo Look, Amazon might become the first corporation to figure out how to successfully de-creepify putting a camera in people’s bedrooms. The company is going to try to accomplish that feat by marketing the device as an enabler for a service called Style Check that tells you if you’ve managed to dress yourself properly this time.

It started with video monitors as elements of home security systems. Later, webcams became common. More recently, fretful parents have been installing child-monitoring “nanny cams.” As predicted, both webcams and nanny cams have been hacked.

Companies offering home automation services (including cable companies) ran into some initial resistance to cameras that could record both video and audio. Many consumers thought that this constituted too much potential for eavesdropping, which is why some home security cameras still lack audio input.

Cable TV companies and their set-top vendors (and some TV manufacturers) keep trying to install cameras. The supposed benefits are that if they can identify who is watching, they can automatically enforce parental controls, create an individual guide for each person in a household based on that person’s viewing habits, or select ads appropriate for each viewer. Most attempts to include cameras in TVs and set-tops have failed thus far because of privacy concerns, which include a strong distaste for having someone else controlling cameras installed in bedrooms — that’s about where the line is today, and that’s the line that Amazon is attempting to obliterate with Echo Look.

The Echo is a voice-activated device that is backed by Alexa, Amazon’s AI-based personal assistant. Users can direct Alexa to perform a growing number of functions that include fetching facts (“What time is it?” “How much does the sun weigh?”), playing tunes from Amazon Prime Music, and — if connected to home automation systems — changing settings on those systems. She will not open the pod bay doors (I have asked).

The Look is a $200 version of the Echo that incorporates a camera. With a voice command, it will take a still image or video. If you take two stills of two different outfits and invoke Style Check, Alexa will recommend one over the other based on algorithmic evaluations of several factors; for example, how well either outfit conforms to current styles. Amazon promises that Style Check will be backed both by advice from human fashionistas and by machine learning.

The line of acceptable intrusion typically shifts on security concerns, but in attempting to get Look into peoples’ bedrooms, Amazon will be playing off a different concern — in security (“Do I look okay?”).

Echo Look has some built-in privacy filters. Voice activation represents one. The device does nothing until it hears you say “Alexa” first, so it won’t be taking pictures unless you instruct it to. Furthermore, users can delete their photos at any time, Amazon assures.

Echo Look will also restrict its analysis to the user. Amazon will not analyze anything in the background, the company told Wired .

Personal assistants such as Siri (Apple), Cortana (Microsoft), Hey Google (must I?), and Alexa exist to do one thing: sell you to advertisers. Amazon insists that it will not sell Alexa/Look data to third parties, apparently meaning that you will only get those ads from Amazon itself (“That outfit would look really smart with a pair of spinach-colored espadrilles. Would you like to see a selection?”).

What other privacy concerns might there be? This is speculative, but so were warnings about nanny cams getting hacked. No technology that ever gets used fails to get misused as well.

Images have always been information-rich. It can be easy to detect a pregnancy, for example. But now? The range of information that can be extracted is eye-popping. Researchers at Stanford have trained an AI to identify and diagnose skin cancers as effectively as human dermatologists. There are any number of other ailments and conditions that might be surmised from photographs. Amazon is encouraging people to use Style Check daily. Rapid weight gain or weight loss can be indicative of an ailment. Do we want Amazon to have that record?

Do we want anyone to have that record? No U.S. government agencies are supposed to indiscriminately monitor citizens’ communications. But communications companies keep records, and various government agencies can easily access them, sometimes without having to make their requests public. What additional records of ourselves do we want to risk being accessed by organizations that should not have that information?

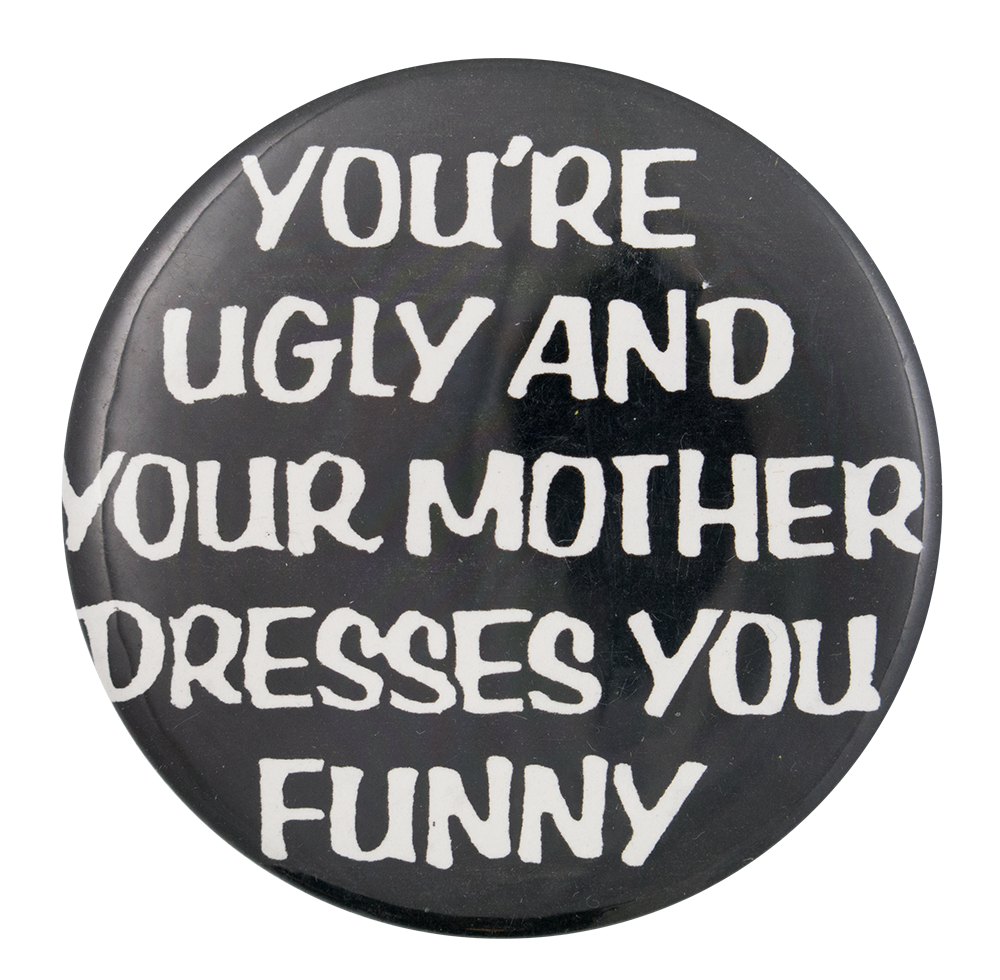

Image Source: Button Museum

Advertisement

Learn more about Electronic Products Magazine