By Gina Roos, editor-in-chief

When artificial intelligence (AI) processing moves from the cloud to the edge of the network, it challenges battery-powered and deeply embedded devices to perform AI functions such as computer vision and voice recognition, said Microchip Technology Inc. The company’s Silicon Storage Technology (SST) subsidiary said it has solved this challenge by significantly reducing power with a new analog memory technology called memBrain.

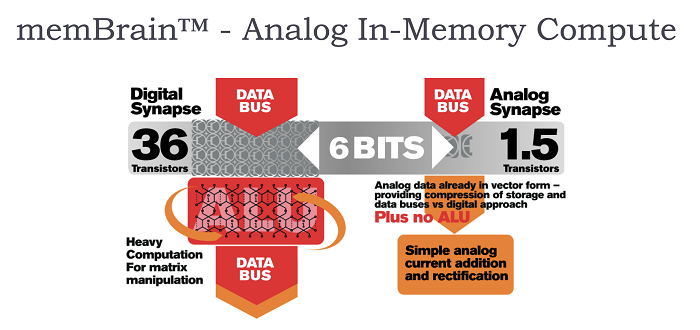

The analog memory technology is a neuromorphic memory solution based on SST’s SuperFlash technology and optimized to perform vector matrix multiplication (VMM) for neural networks. It improves system architecture implementation of VMM through an analog in-memory compute approach, which enhances AI inference at the edge.

In current neural net models, which can require 50M or more synapses (weights) for processing, it’s been a challenge to have enough bandwidth for an off-chip DRAM, which creates a bottleneck for neural net computing and increases overall compute power, said Microchip.

With the memBrain solution, it stores synaptic weights in the on-chip floating gate, which improves system latency. Microchip said it offers 10 to 20 x lower power and a reduced overall bill of materials when compared to digital DSP and SRAM/DRAM-based solutions.

The analog embedded memory can be used in any AI application, including automotive, industrial, and consumer electronics. SST offers design services and a software toolkit for neural network model analysis.

Advertisement

Learn more about Electronic Products Magazine