By Sally Ward-Foxton, contributing writer, EE Times

Arm has unveiled two new IP cores designed to power machine learning in endpoint devices, IoT devices, and other low-power, cost-sensitive applications. The Cortex-M55 microcontroller core is the first to use Arm’s Helium vector-processing technology, while the Ethos-U55 machine-learning accelerator is a micro-version of the company’s existing Ethos neural processing unit (NPU) family. The two cores are designed to be used together, though they can also be used separately.

Enabling AI and machine-learning applications on microcontrollers and other cost-sensitive, low-power, resource-constrained devices is known as the tinyML sector. With the rise of 5G initiating a trend for more intelligence in endpoint devices, tinyML is expected to grow exponentially into a market that encompasses billions of consumer and industrial systems.

“When we look back five years from now, we may all agree that this time marked a true paradigm change in computing,” said Thomas Ensergueix, senior director for IoT and Embedded, Arm. “We have seen within a few years how AI has revolutionized how data analytics runs in the cloud, most of us have an AI-augmented smartphone in our pockets, and now, here is the next step: getting ready for AI everywhere.”

Machine learning, including voice-recognition and computer-vision applications, will increasingly take place in the microcontroller. A range of microcontroller alternatives is springing up, based on Arm cores and others, which Arm is targeting with these two new cores.

“We know all this data at the endpoint level cannot go back to the cloud,” said Ensergueix. “Video cameras in the home or smart city create literally gigabytes a day of data, and the infrastructure is not built for this upstream dataflow. We are convinced that what we need to scale toward billions or trillions of IoT endpoints, we will need AI inferencing capability directly in the IoT endpoint. And it needs to be secure.”

Cortex-M55

The latest addition to Arm’s well-known Cortex-M series for microcontrollers, the Cortex M-55 is designed to be Arm’s most AI-capable Cortex-M core. The M55 is the first to use Arm’s new Helium vector-processing technology, which promises 5× faster DSP performance and 15× faster ML performance, compared to previous Cortex-M generations. Based on the Armv8.1-M architecture, custom instructions can be created to optimize the processor for specific workloads, perhaps to squeeze out every last drop of power.

Combining the M55 and U55 takes advantage of the M55’s increased DSP horsepower, which can be used for signal pre-processing. However, the M55 can run neural network workloads itself. It features dedicated instructions for INT8 numbers, including dot product, which is commonly used in machine-learning applications.

A successful AIoT application “depends on not only good compute performance but also being able to get the right data, the right coefficients, and the right machine-learning weights at the right time, so the memory interface of the processor has been optimized to be able to handle all the data in and out,” said Enserqueix. “It’s much more capable than any other Cortex-M core on this aspect.”

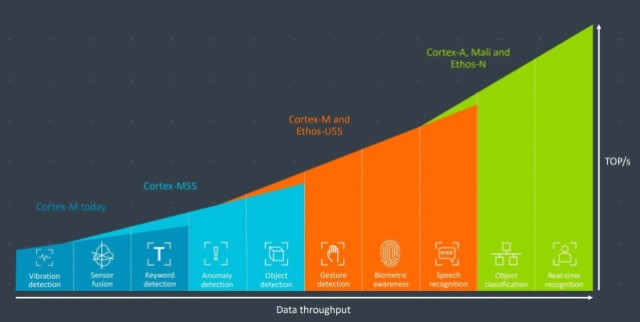

The combination of Cortex-M55 and Ethos-U55 has enough processing power for applications such as gesture recognition, biometrics, and speech recognition. (Image: Arm)

The combination of Cortex-M55 and Ethos-U55 has enough processing power for applications such as gesture recognition, biometrics, and speech recognition. (Image: Arm)

Ethos-U55

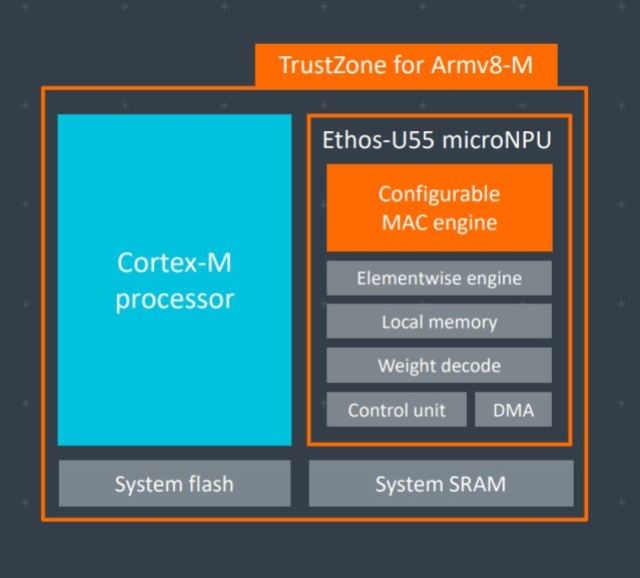

The Ethos-U55 is being billed as Arm’s first “micro-NPU,” offering up to 0.5 TOPS of acceleration (based on smaller geometries such as 16 nm or 7 nm, running at 1 GHz). Arm has not released power-efficiency figures yet (TOPS/W). It is configurable — 32 to 256 multiply-accumulate units (MACs) can be used — and it has a weight decoder and direct memory access for on-the-fly weight decompression.

The Ethos-U55 joins the Ethos N77, N57, and N37 — which, by comparison, offer 4, 2, and 1 TOPS, respectively. Performance can be scaled up by using multiple Ethos cores.

The Cortex-M55 and Ethos-U55 are designed to be used together but can be used separately as well. (Image: Arm)

The Cortex-M55 and Ethos-U55 are designed to be used together but can be used separately as well. (Image: Arm)

The two new cores, M55 and U55, are designed to be used together, where they can process ML tasks 480× faster than any previous-generation Cortex-M device alone. Arm says that typical figures for an end-to-end voice-assistant application using ML are 50× speedup, compared to using a Cortex-M7 alone, and 25× increase in power efficiency.

“The Cortex-M would run the application system code and then, when processing a neural network workload is required, the command stream for that is placed in SRAM, an interrupt is given to the U55, and it says, here, go work on this command stream,” explained Steve Roddy, vice president of the machine-learning group at Arm. “That could be a single inference of a single model. The U55 runs to completion, puts the results back in SRAM, and then lets the Cortex-M take over. Or it could be the type of situation where you run continuously while you’re doing some sort of processing of streaming data, perhaps audio or video.”

Silicon based on these new cores should hit the market in early 2021.

This article originally appeared at sister publication EE Times.

Advertisement

Learn more about Electronic Products Magazine