BY TOM WONG

Director of Business Development, IP Group, Cadence Design Systems Inc.

www.cadence.com

Autonomous driving is a hot topic, and anything associated with it is hot as well. As we are all aware, automotive systems-on-chip (SoCs) are going to play a critical role in enabling ubiquitous autonomous driving in the next couple of years. And when we look at automotive SoCs, two major applications are driving the excitement: advanced driver assistance systems (ADAS) and infotainment.

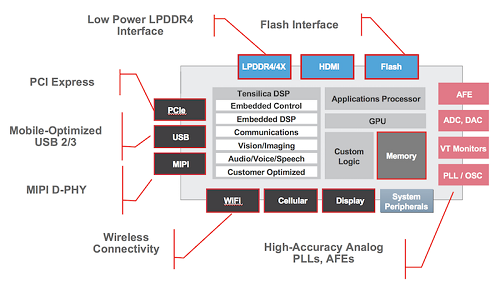

At first glance, this looks like a repeat of the smartphone application processor evolution, wherein a single-chip solution came to dominate the brain of a smartphone. One can debate that infotainment SoCs are automotive-hardened application processors because they have very similar attributes, but in the case of ADAS chips, the conclusion may be different. The ADAS technology caters to a collection of applications that may require dedicated solutions instead of being a one-size-fits-all solution. Fig. 1: Block diagram of a typical smartphone application processor.

Fig. 1: Block diagram of a typical smartphone application processor.

Key ADAS features

Let’s first examine the various driving assistance features found in modern automobiles. The names may vary depending on the manufacturer, but the following list is a good summary of the ADAS use cases available today:

Lane-departure warning system: It detects unindicated lane departures from detected lanes. Alerts will flash and beep to get your attention to correct the lane drift.

Lane-keep assist and road-departure mitigation: When the vehicle begins to stray from the center of the detected lane without signaling, the steering adjusts to help bring you back to the lane center. If the vehicle detects that it is drifting too close to the side of the road without a turn signal activated, the system alerts you with rapid vibrations on the steering wheel and then provides a mild steering input to help keep you on the road or applies braking to keep you from going off the road.

Adaptive cruise control (ACC): This feature helps you maintain a safe distance between your car and the one ahead of you on the highway. So you don’t have to manually change your speed and adjust the cruise control.

Collision-avoidance system: It applies the brake when it senses an unavoidable collision with a vehicle detected ahead of you. This type of system is sometimes referred to as automatic emergency braking (AEB).

Forward-collision warning (FCW): This system uses a camera mounted on top of the front windshield to detect the presence of a vehicle in front of you. If the FCW system determines that you are at risk of a collision with a detected vehicle, it activates audio and visual alerts to warn you. If you fail to act, the collision mitigation braking system (CMBS) can automatically apply brake pressure.

Blind-spot information system: It alerts you when your turn signal is on and a vehicle is detected in an adjacent lane. An indicator blinks and a beep sounds until the area is clear or the turn signal is off. There are some systems that display live video on your in-dash display, showing you four times more than a typical passenger mirror will show you.

Cross-traffic monitoring system: This feature activates the multi-angle camera in the car when you are in reverse. The driver is alerted to a detected vehicle approaching from the side with a series of beeps and indicators shown on the in-dash display in the car.

Diverse ADAS requirements

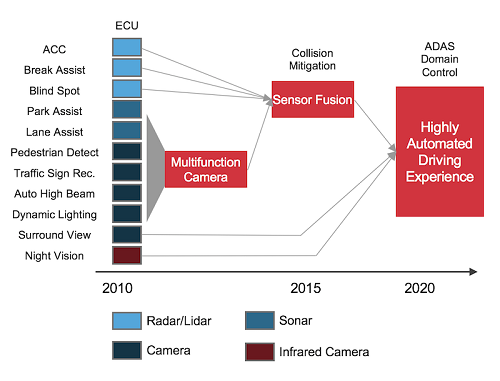

Fig. 2 dissects various ADAS functions and relates them to the type of sensing technology (radar, lidar, sonar, etc.) and the type of hardware required to realize various ADAS use cases. Fig. 2: Spectrum of ADAS control functions and associated sensing technology.

Fig. 2: Spectrum of ADAS control functions and associated sensing technology.

For example, lane assist, pedestrian detection, and traffic-sign recognition require high-resolution cameras. Collision mitigation systems such as ACC, AEB, and blind-spot detection demand the sensor fusion technology. And ADAS domain controllers will require high-performance compute resources and DSPs to perform data processing and analysis and perhaps chips that can support neural network computational needs when addressing Level 4 and Level 5 autonomous driving.

Cameras are used for surround view, parking assist, traffic-sign recognition, and lane-departure warning. Radar is needed for blind-spot detection, cross-traffic alert, and ACC with the need for both long-range radar and ultra-short-range radar. Parking assistance will continue to rely on ultrasound sensors as has been the case for many years now. No new invention is needed here.

Aside from ADAS, there are other systems that use semiconductors extensively. These include the instrument cluster, human-machine interface (HMI), head unit, media interface, and premium audio.

Moreover, there are systems to support in-cabin active-noise reduction or sound manipulation. That’s how you can make your in-cabin experience sound like a Mercedes. It is all done with advanced DSP chips providing the “manipulated sound.”

Each of the above applications will require different kinds of SoCs, and this is how the market is typically being served today. However, with the move beyond ADAS to achieve true autonomous driving, a different approach may be needed. Some vendors are taking the approach centered around a highly integrated autonomous driving subsystem.

To be able to support native autonomous driving, some companies are designing autonomous SoCs that pack teraflops of compute capability, so it has the capacity to implement true artificial intelligence (AI), as envisioned in Fig. 3 . For instance, Nvidia and Mobileye are targeting complete solutions for Level 4 and Level 5 autonomous driving. Fig. 3: The promise of autonomous driving to be enabled by advanced SoC designs.

Fig. 3: The promise of autonomous driving to be enabled by advanced SoC designs.

You will also find Renesas fielding a family of 40-nm to 16-nm SoCs — R-Car Generation 3 — to support infotainment, integrated cockpit, automotive computing, and perhaps even neural networks in the not-too-distant future. Next, TI has multiple offerings in its TDA family of automotive SoCs.

TDA3x supports the front camera, surround view, rear camera, radar, driver monitoring, and camera mirror systems. The TDS2Eco family performs surround view and recording applications. The TDA2x family supports the front camera, surround view, and sensor fusion systems. These SoCs can support up to 10 cameras and three video outputs as well as H.264 hardware and 3D graphics capabilities.

Then there is Qualcomm, which has recently introduced a cellular vehicle-to-everything (C-V2X) chipset to improve autonomous driving safety. The Qualcomm 9150 C-V2X chipset supports both cellular- and DSRC-based roadside communications.

Summary

As you can see from our short and quick survey, a diverse array of chip designs is needed to support various automotive applications. Especially for ADAS applications, it’s not a one-size-fits-all automotive SoC. And we have not even started tracking high-performance neural network processing chips for full Level 4 and Level 5 autonomous driving.

The automotive industry is going through a sea change as we move beyond ADAS to the era of autonomous driving. We can see some of the trends today, but there may be other innovative solutions that have yet to be invented. Looking back over the past 10 years, who would have guessed that automotive electronics would be so amazing today?

Advertisement

Learn more about Cadence Design Systems