BY MARK PITCHFORD, Technical Specialist

LDRA Software Technology

www.ldra.com

Automotive embedded applications have traditionally been isolated, static, fixed-function, device-specific implementations, and practices and processes have relied on that status. Connecting automobiles to the outside world changes things dramatically because it makes remote access possible while requiring no physical modification to the automobile’s systems. There is now a clear obligation for automotive developers to ensure that insecure application software cannot compromise safety, not just throughout a production lifetime, but as for long as a product is out in the field.

Although the well-known ISO 26262:2012 “Road Vehicles — Functional Safety” standard helps by providing a formalized software development process, it does not explicitly discuss software security. That said, where security vulnerabilities may threaten safety, ISO 26262 does demand safety goals and requirements to deal with them. The action to be taken to deal with each safety-threatening security issue needs to be proportional to the risk, using ISO 26262’s Automotive Safety Integrity Level (ASIL) risk classification scheme.

Still, to ensure security, developers of embedded systems must consider that application code is but one vital component and that in developing that code, many of the techniques familiar to safety-critical code developers can be deployed with a stronger security perspective.

Beyond development, however, connectivity also gives system maintenance a new significance with each newly discovered vulnerability, because software requirements and security vulnerabilities have the potential to change after development and for as long as a product is being used out in the field. For automobiles, this can be up to 10 years or longer.

Securing application code

There is a lot more to a secure embedded system than ensuring that the application code is secure. Technologies such as security-hardened operating systems, hypervisors, separation kernels, and boot image integrity verification all have vital parts to play. These technologies not only offer a multi-level defense against aggressors, but they can also be used in a mutually supportive manner.

An example is when an automotive system is deployed with a multi-domain architecture using a separation kernel or hypervisor. The domain-separation approach, which keeps highly critical applications separate from more mundane parts of the system, provides an attractive foundation for a secure system. But there will be strengths and weaknesses in that underlying architecture.

The use of risk analysis can help by systematically exposing weak points, which are likely to be found in such areas as:

- Interfaces between different domains

- Interfaces to files from outside the network

- Backwards-compatible interfaces

- Old protocols, sometimes old code and libraries, and hard-to-maintain and hard-to-test multiple versions

- Custom application programming interfaces (APIs)

- Security code

- Cryptography, authentication, and authorization session management

Employing this focused approach helps ensure that even where existing code is deployed, if high-risk areas are addressed in support of a secure architecture, then the system as a whole can be made adequately secure without resorting to the impractical extreme of rewriting every component part.

Safe and secure application code development

The traditional approach to secure software development has tended to be reactive: Develop the software, and then use penetration, fuzz, and functional test to expose any weaknesses. Useful though that is, in isolation, it is not good enough to comply with a functional safety standard such as ISO 26262. This standard implicitly demands that security factors with a safety implication are considered from the outset because a safety-critical system cannot be safe if it is not secure.

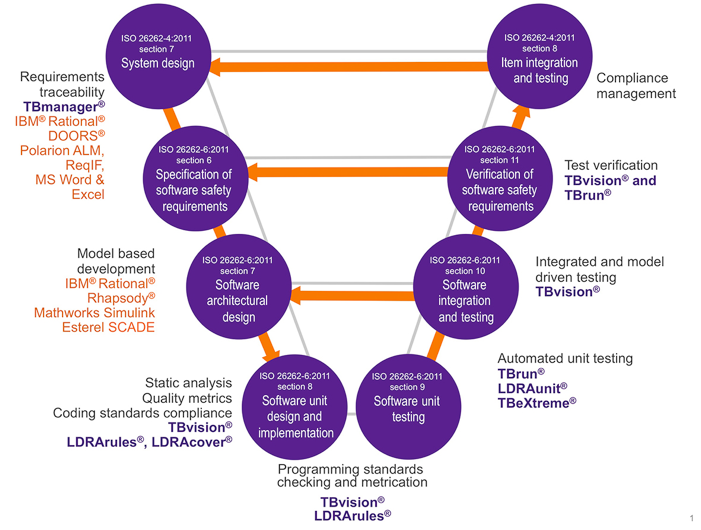

The common V-model is useful in this regard. This model cross-references to both the ISO 26262 standard and to tools likely to be deployed at each phase in the development of today’s highly sophisticated and complex automotive software (Fig. 1 ). For the purposes of this article, this model helps as a reference as to how the introduction of a security perspective impacts each phase. Note that other process models such as agile and waterfall can be equally well-supported.

Fig. 1: Software-development V-model with cross-references to ISO 26262 and standard development tools.

The products of the system design phase (top left) include technical safety requirements refined and allocated to hardware and software. In a connected system, these will include many security requirements because the action to be taken to deal with each safety-threatening security issue needs to be proportionate to the risk. Maintaining traceability between these requirements and the products of subsequent phases generally causes a project management headache.

The specification of software safety requirements involves their derivation from the system design, isolating the software-specific elements and detailing the process of evolution of lower-level, software-related requirements, including those with a security-related element.

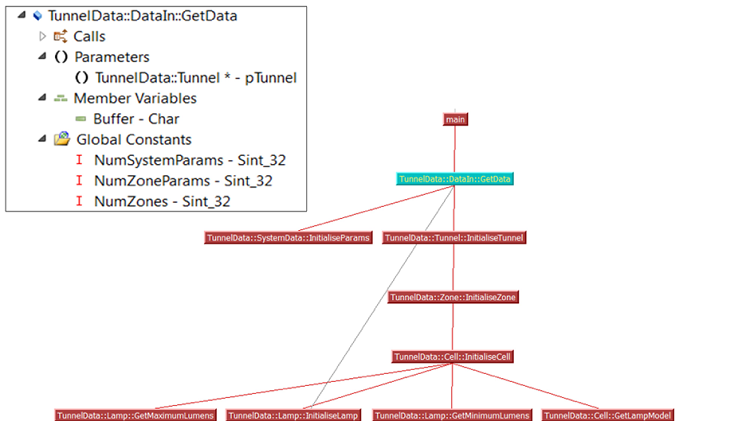

Next comes the software architectural design phase, perhaps using a UML graphical representation. Static analysis tools help here by providing graphical representations of the relationship between code components for comparison with the intended design (Fig. 2 ).

Fig. 2: Static analysis tools provide graphical representations of the relationship between code components for comparison with the intended design — in this case, between Control and Data Flow.

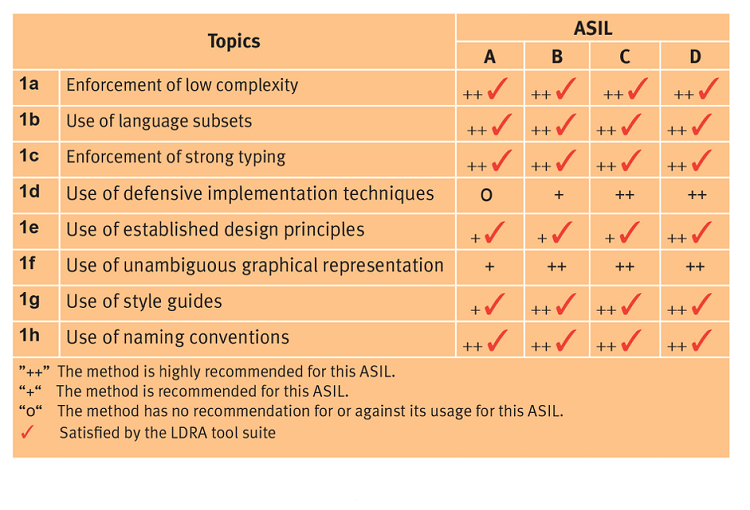

A typical example of a table from ISO 26262-6:2011, relating to software unit design and implementation , is shown (Fig. 3 ). It shows the coding and modelling guidelines to be enforced during implementation, superimposed with an indication of where compliance can be confirmed with the aid of automated tools.

Fig. 3: ISO 26262 coding and modelling guidelines.

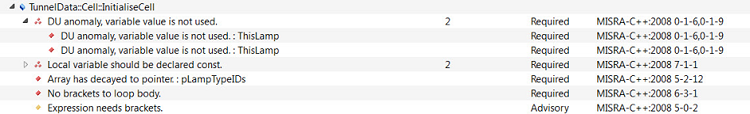

The “use of language subset” (Topic 1b in the table) exemplifies the impact of security considerations on the process. Language subsets have traditionally been viewed as an aid to safety, but security enhancements to the MISRA C:2012 standard and security-specific standards such as Common Weakness Enumeration (CWE) reflect an increasing interest in the role they have to play in combating security issues. These, too, can be checked by means of static analysis (Fig. 4 ).

Fig. 4: Coding standards violations of security enhancements to the MISRA C:2012 standard and security-specific standards such as Common Weakness Enumeration (CWE) can be checked by means of static analysis.

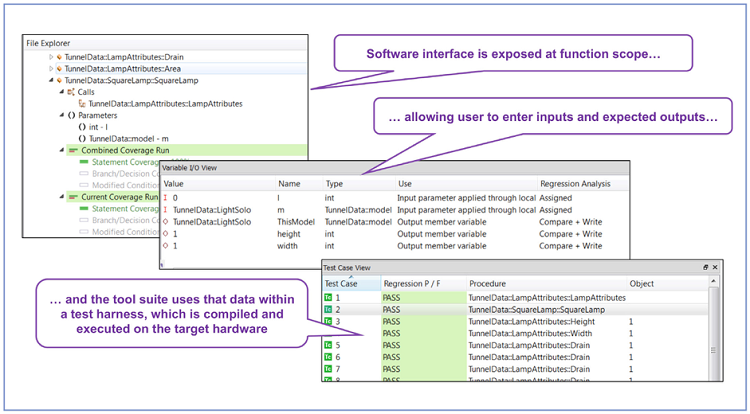

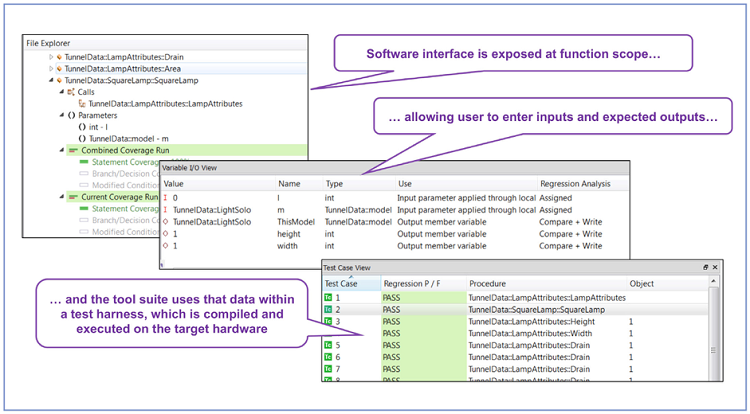

Dynamic analysis techniques (involving the execution of some or all of the code) are applicable to item integration and testing . Item testing is designed to focus on particular software procedures or functions in isolation, whereas integration testing ensures that safety, security, and functional requirements are met when units are working together in accordance with the software architectural design (Fig. 5 ).

Fig. 5: Item testing interface showing how the software interface is exposed at the function scope, allowing the user to enter inputs and expected outputs to form the basis of a test harness.

In a good test tools suite, the software interface is exposed at the function scope, allowing the user to enter inputs and expected outputs to form the basis of a test harness. That harness is then compiled and executed on the target hardware, and actual and expected outputs are compared. Such a technique is useful not only to show functional correctness in accordance with functional, security, and safety requirements but also to show resilience to issues such as border conditions, null pointers, and default switch cases — all important security considerations.

In addition to showing that software functions correctly, dynamic analysis is used to generate structural coverage metrics. The security standard CWE requires that code coverage analysis is used to ensure that there is no hidden functionality designed to potentially increase an application’s attack surface and expose weaknesses.

Managing the infinite development lifecycle

The principle of bi-directional traceability runs throughout this ISO26262 V-model, with each development phase required to accurately reflect the one before it. In theory, if the exact sequence of the standard is adhered to, then the requirements will never change and tests will never throw up a problem. But life’s not like that.

For example, it is easy to imagine these processes as they relate to a “green field” project. But what if there is a need to integrate many different subsystems? What if some of those are pre-existing, with requirements defined in widely different formats? What if some of those systems were written with no security in mind, assuming an isolated system? And what if different subsystems are in different development phases?

Then there is the issue of requirement changes. What if the client has a change of heart? A bright idea? Advice from a lawyer that existing approaches could be problematic?

Should changes become necessary, revised code would need to be re-analyzed statically, and all impacted unit and integration tests would need to be re-run (regression-tested). Although that can result in a project management nightmare at the time, in an isolated application, it lasts little longer than the time that the product is under development.

Connectivity changes all of that. Whenever a new vulnerability is discovered, there is a resulting change of requirement to cater for it, coupled with the additional pressure of knowing that a speedy response could be critically important if products are not to be compromised in the field.

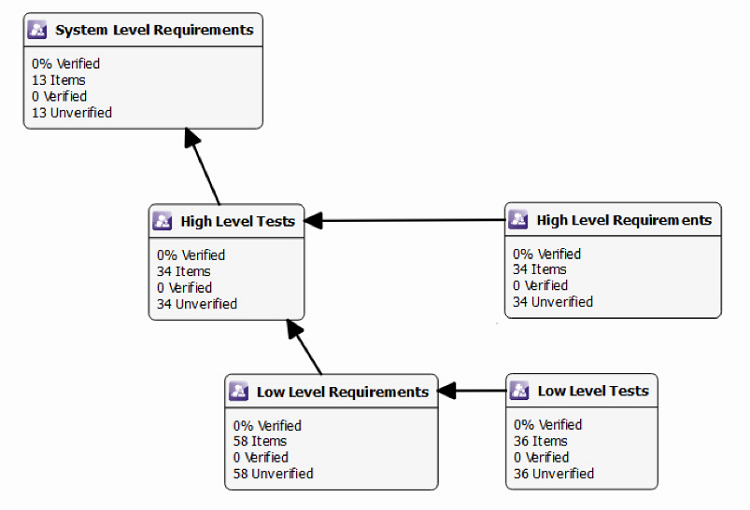

Automated bi-directional traceability links requirements from a host of different sources through to design, code, and test. The impact of any requirement changes — or, indeed, of failed test cases — can be assessed by means of impact analysis and addressed accordingly. And artifacts can be automatically regenerated to present evidence of continued compliance to ISO 26262 (Fig. 6 ).

During the development of a traditional, isolated system, that is clearly useful enough. But connectivity demands the ability to respond to vulnerabilities because each newly discovered vulnerability implies a changed or new requirement and one to which an immediate response is needed — even though the system itself may not have been touched by development engineers for quite some time. In such circumstances, being able to isolate what is needed and automatically test only the functions implemented becomes something much more significant.

Fig. 6: Automated bi-directional traceability links requirements from a host of different sources through to design, code, and test.

Conclusion

An embedded automotive system depends on technologies such as security hardened operating systems, hypervisors, separation kernels, and boot image integrity verification. These technologies not only offer a multi-level defense against aggressors, but they can also be used in a mutually supportive manner to present a level of defense commensurate with the risk involved.

Existing tools and techniques can be used during such a development, sometimes adapted to suit such as in the case of secure coding rules to complement those existing with a safety emphasis. Some techniques, such as coverage analysis, use a precisely similar approach with a subtly different purpose — including, in this case, ensuring the absence of any additional “back door” code.

Bi-directional traceability between requirements, designs, code, and tests is a fundamental requirement of ISO 26262, and its automation can save considerably on project management overhead. The significance of such automated traceability is heightened when the impact of a security breach can imply new requirements for projects well after product launch. In such circumstances, a speedy response to requirements change has the potential to both save lives and enhance reputations.

Advertisement

Learn more about LDRA