Developed by scientists working at Korea University, Korea, and Technical University at Berlin, Germany, a brain-computer interface could allow users to control a lower limb exoskeleton by decoding signals from the brain.

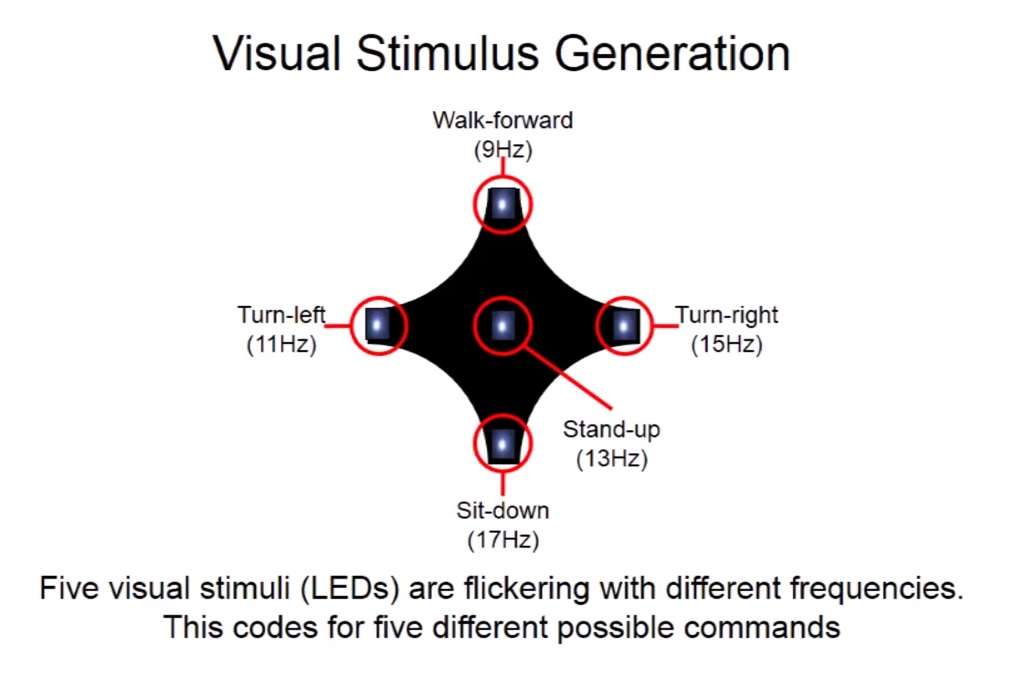

Using an electroencephalogram (EEG) cap, the device allows users to move forward, turn left or right, sit and stand, all by staring at one of five flashing light emitting diodes (LEDs). The five lights flicker at different frequencies, which are reflected in the EEG readout depending on which LED the user stares at. After the desired signal is interpreted by the EEG cap, system instructions are then converted and forwarded to the exoskeleton.

This method is ideal for those with no voluntary controls except for eye movements, and who are able to operate a traditional exoskeleton, such as quadriplegics. The KU/TU Berlin team who developed this system states that it offers a better signal-to-noise ratio by separating brain control signals from the surrounding noise of ordinary brain signals for a more accurate exoskeleton operation than conventional hard-wired systems.

“Exoskeletons create lots of electrical 'noise',” said Professor Klaus Muller, of the TU Berlin Machine Learning Group. “The EEG signal gets buried under all this noise – but our system is able to separate not only the EEG signal, but the frequency of the flickering LED within this signal. People with amyotrophic lateral sclerosis (ALS) [motor neuron disease or Lou Gehrig's disease], or high spinal cord injuries face difficulties communicating or using their limbs. Decoding what they intend from their brain signals could offer means to communicate and walk again.”

Researchers tested the brain-controlled exoskeleton on volunteers, which only took a few minutes to train to operate. Due to the flickering LEDs and their reaction to them, epilepsy patients were unable to participate. Results found that the system was reasonably simple to manage, but a downside included ‘visual fatigue’ associated with staring at the LED lights for a long period of time, which researchers are currently working to fix.

“We were driven to assist disabled people, and our study shows that this brain control interface can easily and intuitively control an exoskeleton system – despite the highly challenging artifacts from the exoskeleton itself,” said Professor Muller.

Published in the Journal of Neural Engineering , the team’s paper can be found here.

Source: Gizmag

Advertisement

Learn more about Electronic Products Magazine