On one hand, there are dog people, and on the other, there are bird people. I identify as one of the latter. Not the kind of bird-man who celebrates secret personal victories allotted only to those who record bird sightings in notebooks, but the kind who keep parrots as companions. I’ve done this for the last 15 years. Naturally, I was excited when I learned that scientists from Queen Mary University of London have developed an automated classification algorithm to understand when birds are talking to each other.

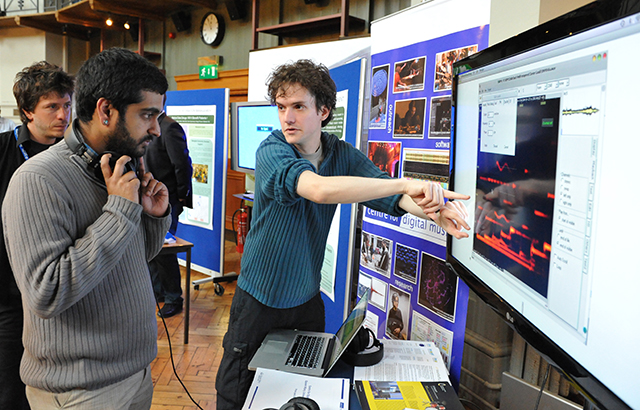

Dr. Dan Stowell from QMUL’s School of Electronic Engineering and Computer Science believes that studying bird songs will enable us to gain a clearer understanding of how human languages evolved and the social organization in the animal kingdom; just like humans, songbirds go through similar stages of vocal learning as they grow. Stowell and his team have created an automated algorithm that analyses and classifies bird songs and have tested it on recordings taken from the British Library Sound Archive.

“Automatic classification of bird sounds is useful when trying to understand how many, and what type, of birds you might have in one location,” adds Dan Stowell in a statement.

According to MIT linguist Shigeru Miyagawa, this isn’t nearly as far-fetched of an endeavor as it may seem on the surface; bird song shares many similarities with human language even though they’ve evolved independently. Human language seemingly sprang out of nowhere nearly 100,000 years ago and represents the merging of the lexical system found in monkey calls with expressive one used in bird songs.

In his published study, Dr. Stowell explains that automatic species classification of avian by sound requires a computational tool that adopts machine learning into the process of deciphering the acoustic measurements based on spectrogram-type data, such as the Mel-frequency cepstral coefficient (MFCC), which is usually manually organized. He adds: “I'm working on techniques that can transcribe all the bird sounds in an audio scene: not just who is talking, but when, in response to whom, and what relationships are reflected in the sound, for example who is dominating the conversation.”

Via Qmul.ac.uk

Advertisement

Learn more about Electronic Products Magazine