Electronics has always been a hotbed of virtual cycles. In the 1980s, higher capacity disk drives allowed software companies, such as Microsoft, to create more powerful applications that needed more storage. This, in turn, made it possible for even more powerful applications that required even more storage. As Intel has packed more and more processing into its microprocessors, calculation-intensive applications such as graphics and video appeared to fill the processing headroom. And, of course, there is the Internet, which makes all previous virtual cycles seem like small stuff.

So it is not surprising that data centers – and more recently, cloud computing – are both profiting from, and driving innovations in hardware technology at all levels within the data center. The arrival of cloud computing made faster processing, faster data retrieval, larger capacity storage, and faster machine-to-machine communications more important than ever.

The term “cloud computing” refers to the amorphous “cloud” shown in textbooks and on whiteboards that constitutes the complex computing and communications architecture behind the Internet. Cloud computing had a false start in the 1990s when some vendors touted “thin-clients,” which were basically dumbed down PCs that relied on remote networked services. Unfortunately, the infrastructure was slow and not up to the task. This time, the approach has changed as network communications and processing is a couple of orders of magnitude faster.

While the traditional data center generally kept the server, computing resources, applications, and storage in more or less the same physical location, cloud computing introduced a new paradigm of computing on a virtual machine with parallelization and redundancy paramount. Different threads of a single application can be running on multiple computers around the world, retrieving information from multiple network-attached-storage (NAS) locations, and sharing geographically diverse server resources as well as communications channels.

The prevalence of long-haul fiber-optic communications, to a large extent, made cloud computing feasible. However, the same throughput bottlenecks that have bedeviled engineers for decades – subsystem-to-subsystem interconnects and chip-to-chip interfaces – are still with us. Intel ignited a glimmer of hope in September 2013 by unveiling optical chip-to-chip interconnects, based on “silicon photonics,” in which lasers create the interface link. The first prototype to be made public achieves data rates of 100 gigabits per second, which is about an order of magnitude faster than copper PCIe data cables that connect servers on a rack. Intel’s silicon photonics technology will not be integrated into server motherboards for a few years. In the meantime, engineers designing data center products will have to squeeze as much speed out of copper interconnects as possible.

Data center technologies

There is plenty of room for hardware innovation in the server part of the data center market, which was $52.8 billion worldwide in 2012, according to the research firm Gartner. Since a tweak in hardware performance can deliver a significant market advantage, not more than a couple of months go by without the introduction of a new, more powerful server family. The rewards to keep in front of the pack are great, because more than 100,000 servers are typically deployed in each new data center. To a lesser extent, new storage and communications technologies are also contributing to the virtual cycle.

While servers may be the core technology of data centers, energy consumption, communications, and seemingly mundane technologies such as air conditioning contribute to the overall market size in terms of dollars. A single data center can use as much as 10.5 million watts. In total, data centers account for 2.2 percent of America's total electricity consumption. That percentage is forecast to grow as more data – and in particular more video – is consumed by individuals and corporations. The National Data Center Energy Efficiency Information Program’s factsheet states that the amount of energy consumed by data centers is set to continue to grow at a rate of 12 percent per year.

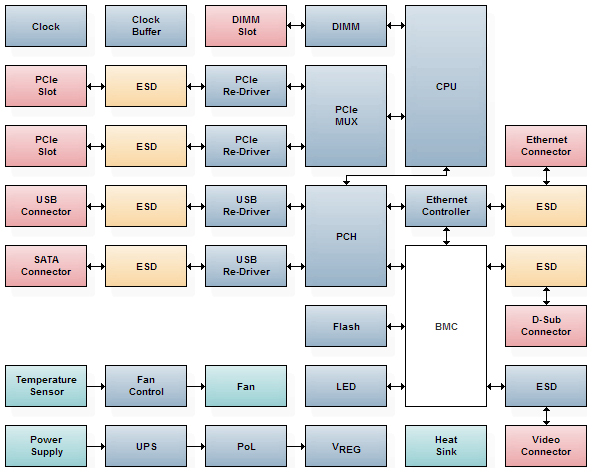

The perennial need for speed, higher density storage, and a stronger emphasis on energy efficiency are making the design of server motherboards among the most challenging in electronics today. Figure 1 shows a block diagram of the main components on a typical server motherboard. Figure 1 shows a block diagram of the main components on a typical server motherboard architecture

Figure 1: Typical server motherboard architecture. Learn More

Microprocessors are the core of server design and typically comprise a large percentage of the motherboard’s cost and energy consumption. Doing more with less is the obvious answer. The design challenge continues to be how to implement more efficient multiprocessing and multithreading. Recently, for chips, the term more efficient has come to mean not just more MIPs per chip but more MIPs per watt as well. The most common technique for implementing multiprocessing is to design chips with multiple embedded processing cores. This enables the software multithreading that allows the server to execute more than one stream of code simultaneously. Without it, cloud computing probably would not be a reality. Multithreading also reduces energy consumption. In addition to the multicore/multithread enhancements, processors can now execute 124-bit instructions, which results in a significant performance boost.

Intel’s Xeon processors, for example, integrate up to eight CPU cores. The most recent version of the Xeon E5-2600 delivers up to 45 percent greater Java performance and up to 45 percent better energy efficiency. Mouser stocks a broad line of Intel processors suitable for server motherboard design.

Platform Controller Hub (PCH in Figure 1) chipsets, such as Intel’s BD82C604, control data paths and support functions required by the CPU, including the system clock, Flexible Display Interface (FDI) and Direct Media Interface (DMI). Functions and capabilities include PCI Express 2.0, PCI Express Uplink, PCI Local Bus, and an integrated 10/100/1000 Gigabit Ethernet MAC.

Not all data centers need the most powerful processor, but every company that operates a data center wants to save on energy costs. Intel addressed that demand in September 2013 by introducing a portfolio of data center products and technologies, including the second generation 64-bit Atom C2000

product family of SoC designs, for what it refers to as microservers.

Memory and interconnects

As with any computing system, there is a spectrum of innovations can be brought to bear on the bottleneck between the processor, memory, and other I/O. Fully-buffered DIMMs (FBDIMMs), for example, reduce latency resulting in shorter read/write access times.

The FBDIMM memory architecture replaces multiple parallel interconnects with a single serial interconnect. To make this work, the architecture includes an advanced memory buffer (AMB) between the memory controller and the memory module. Instead of writing directly to the memory module (the DIMMs in Figure 1), the controller interfaces with the AMB, which compensates for signal deterioration by buffering and resending the signal. The AMB also executes error correction without imposing any additional overhead on the processor or the system's memory controller.

In Figure 1, the orange blocks indicate interface standards. PCI-Express, HyperTransport, Serial ATA, SAS, and USB are high-speed interfaces. The choice of interface depends on the particular use scenario on the server motherboard. The chosen interface must be capable of delivering data fast enough to align with the processing power of the server subsystem.

Signal conditioning with re-driver chips has an important role to play in satisfying the processor’s appetite for data. Because faster signal frequencies allow less signal margin for designing reliable, high-performance systems, re-drivers, which are also known as repeater ICs, mediate the connection between the interface device and the CPU.

Re-drivers regenerate signals to enhance the signal quality using equalization, pre-emphasis, and other signal conditioning technologies. A single re-driver can adjust and correct for known channel losses at the transmitter and restore signal integrity at the receiver. The result is an eye pattern at the receiver with the margins required to deliver reliable communications with low bit-error rates.

Texas Instruments, Pericom Semiconductor, and Maxim Integrated Circuits are among the leading semiconductor vendors supplying high-speed re-drivers suitable for server architectures. TI’s SN75LVCP601RTJT, for example, is a dual-channel, single-lane SATA re-driver that supports data rates up to 6 Gbps. Pericom’s PI2EQX6804-ANJE re-driver is a two-port, single-lane SAS/SATA re-driver with adjustable equalization. Maxim’s MAX14955ETL+ is a dual-channel IC that can re-drive one full lane of SAS or SATA signals up to 6 Gbps. Out-of-band signaling is supported using high-speed amplitude detection on the inputs and squelch on the corresponding outputs.

Storage

Without readily available data – and lots of it – the cloud does not get very far off the ground. To satisfy that appetite, an evolution toward the globalization and virtualization of storage is well underway. From a hardware perspective, this means the ongoing convergence of storage area networks (SANs) and network-attached storage (NAS). While either technology will work in many situations, their chief difference lies in their protocols: NAS uses TCP/IP and HTTP, while SAN uses Encapsulated SCSI over FibreChannel.

From a hardware perspective, both use redundant array of independent disks (RAID), a storage technology that distributes data across the drives in different RAID levels, depending on the level of redundancy and performance required. Controller chips for data-center-class servers must be RAID compliant and are considered to be a sufficiently “advanced” technology to require an export license to ship outside the United States. One such chip is PLX Technology’sOXUFS936QSE-PBAG, which is a universal interface to quad SATA Raid controllers that supports eSATA, FireWire800, and USB 2.0 data transfers. Its RAID compliance levels go up to RAID 10 (disk striping with mirroring).

Despite their differences, SAN and NAS can be combined into a hybrid system that offers both file-level protocols (NAS) and block-level protocols (SAN). The trend toward storage virtualization, which abstracts logical storage from physical storage, is making the distinction between SAN and NAS less and less important.

Conclusion

As cloud computing becomes pervasive, the traditional data center that located all major components in the same physical space has been evolving toward its next generation in which redundancy and high-speed communications are more important than ever. Although silicon photonics holds the promise for speed-of-light data transfers between subsystems in the future, today’s design engineers have to optimize every aspect of server, storage, communications and computing technology.

Advances continue to be made in multicore processors that implement multithreaded computing architectures. Finding solutions to signal integrity issues is an ongoing challenge with technologies such as re-drivers. Meanwhile, cloud computing has initiated major changes in storage technology, both from the point of view of capacity and architecture.

As the cloud continues to grow in importance across the spectrum of computing and communications technologies, it will both drive and benefit from a raft of innovations, enabling applications such as social media and mobile computing.

Advertisement

Learn more about Mouser Electronics