By Sally Ward-Foxton, contributing writer

While the acceleration of AI and ML applications is still a relatively new field, there is a variety of processors springing up to accelerate almost any neural network workload. From the processor giants down to some of the newest startups in the industry, all offer something different — whether that’s targeting different vertical markets, application areas, power budgets, or price points. Here is a snapshot of what’s on the market today.

Application processors

Intel Movidius Myriad X

Developed by the Irish startup Movidius that was bought by Intel in 2016, the Myriad X is the company’s third-generation vision-processing unit and the first to feature a dedicated neural network compute engine, offering 1 tera operations per second (TOPS) of dedicated deep neural network (DNN) compute. The neural compute engine directly interfaces with a high-throughput intelligent memory fabric to avoid any memory bottleneck when transferring data. It supports FP16 and INT8 calculations. The Myriad X also features a cluster of 16 proprietary SHAVE cores and upgraded and expanded vision accelerators.

The Myriad X is available in Intel’s Neural Compute Stick 2, effectively an evaluation platform in the form of a USB thumb drive. It can be plugged into any workstation to allow AI and computer-vision applications to be up and running on the dedicated Movidius hardware very quickly.

NXP Semiconductors i.MX 8M Plus

The i.MX 8M Plus is a heterogeneous application processor featuring dedicated neural network accelerator IP from VeriSilicon (Vivante VIP8000). It offers 2.3 TOPS of acceleration for inference in endpoint devices in the consumer and industrial internet of things (IIoT), enough for multiple object identification, speech recognition of 40,000 words, or even medical imaging (MobileNet v1 at 500 images per second).

In addition to the neural network processor, the i.MX 8M Plus also features a quad-core Arm Cortex-A53 subsystem running at 2 GHz, plus a Cortex-M7 real-time subsystem.

For vision applications, there are two image signal processors that support two high-definition cameras for stereo vision or a single 12-megapixel (MP) camera. For voice, the device includes an 800-MHz HiFi4 audio digital signal processor (DSP) for pre- and post-processing of voice data.

NXP’s i.MX 8M Plus is the company’s first application processor with a dedicated neural network accelerator. It’s designed for IoT applications. (Image: NXP Semiconductors)

XMOS xcore.ai

The xcore.ai is designed to enable voice control in artificial intelligence of things (AIoT) applications. A crossover processor (with the performance of an application processor and low-power, real-time operation of a microcontroller), this device is designed for machine-learning inference on voice signals.

It is based on XMOS’s proprietary Xcore architecture, itself built on building blocks called logical cores that can be used for either I/O, DSP, control functions, or AI acceleration. There are 16 of these cores on each xcore.ai chip, and designers can choose how many to allocate to each function. Mapping different functions to the logical cores in firmware allows the creation of a “virtual SoC,” entirely written in software. XMOS has added vector pipeline capability to the Xcore for machine-learning workloads.

The xcore.ai supports 32-bit, 16-bit, 8-bit, and 1-bit (binarized) networks, delivering 3,200 MIPS, 51.2 GMACCs, and 1,600 MFLOPS. It has 1 Mbyte of embedded SRAM plus a low-power DDR interface for expansion.

XMOS’s xcore.ai is based on a proprietary architecture and is designed specifically for AI workloads in voice-processing applications. (Image: XMOS)

Automotive SoC

Texas Instruments Inc. TDA4VM

Part of the Jacinto 7 series for automotive advanced driver-assistance systems (ADAS), the TDA4VM is TI’s first system-on-chip (SoC) with a dedicated deep-learning accelerator on-chip. This block is based on the C7x DSP plus an in-house developed matrix multiply accelerator (MMA), which can achieve 8 TOPS.

The SoC can handle a video stream from a front-mounted camera at up to 8 MP or a combination of four to six 3-MP cameras plus radar, LiDAR, and ultrasonic sensors. The MMA might be used to perform sensor fusion on these inputs in an automated valet parking system, for example. The TDA4VM is designed for ADAS systems between 5 and 20 W.

The device is still in pre-production, but development kits are available now.

The TI TDA4VM is intended for complex automotive ADAS systems that allow vehicles to perceive their environments. (Image: Texas Instruments Inc.)

GPU

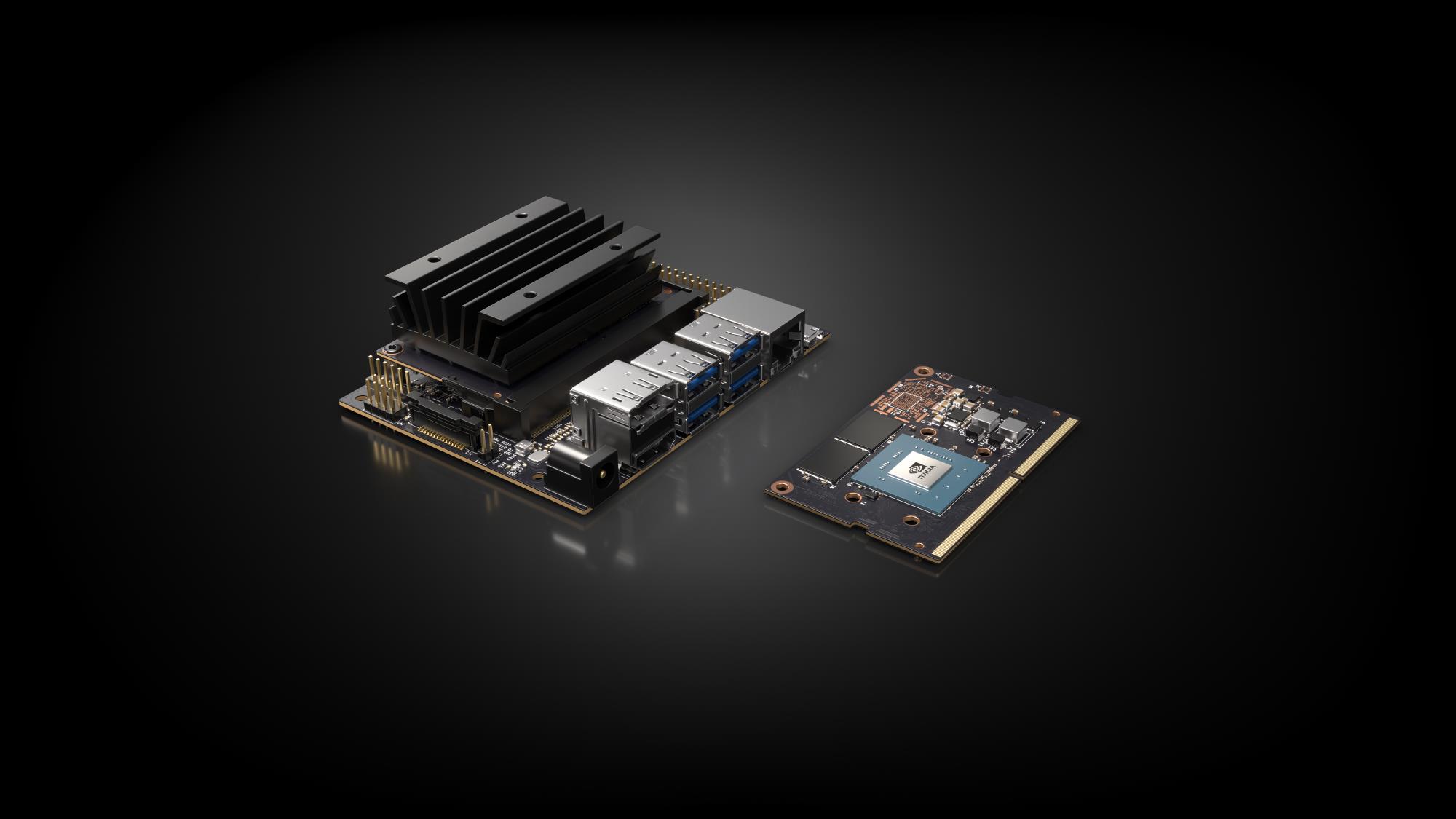

Nvidia Corp. Jetson Nano

Nvidia’s well-known Jetson Nano is a small but powerful graphics processing unit (GPU) module for AI applications in endpoint devices. Built on the same Maxwell architecture as larger members of the Jetson family (AGX Xavier and TX2), the GPU on the Nano module has 128 cores and is capable of 0.5 TFLOPS, enough to run multiple neural networks on several streams of data from high-resolution image sensors, according to the company. It consumes as little as 5 W when in use. The module also features a quad-core Arm Cortex-A57 CPU.

Like other parts in Nvidia’s range, the Jetson Nano uses CUDA X, Nvidia’s collection of acceleration libraries for neural networks. Inexpensive Jetson Nano development kits are widely available.

Nvidia’s Jetson Nano module houses a powerful GPU with 128 cores for AI at the edge. (Image: Nvidia Corp.)

Consumer co-processors

Kneron Inc. KL520

The first offering from American-Taiwanese startup Kneron is the KL520 neural network processor, designed for image processing and facial recognition in applications such as smart homes, security systems, and mobile devices. It’s optimized to run convolutional neural networks (CNNs), the type commonly used in image processing today.

The KL520 can run 0.3 TOPS and consumes 0.5 W (equivalent to 0.6 TOPS/W), which the company said is sufficient for accurate facial recognition, given that the chip’s MAC efficiency is high (over 90%). The chip architecture is reconfigurable and can be tailored to different CNN models. The company’s complementary compiler also uses compression techniques in order to help run bigger models within the chip’s resources to help save power and cost.

The KL520 is available now and can also be found on an accelerator card from manufacturer AAEON (the M2AI-2280-520).

Kneron’s KL520 uses a reconfigurable architecture and clever compression to run image processing in mobile and consumer devices. (Image: Kneron Inc.)

Gyrfalcon Lightspeeur 5801

Designed for the consumer electronics market, Gyrfalcon’s Lightspeeur 5801 offers 2.8 TOPS at 224-mW power consumption (equivalent to 12.6 TOPS/W) with 4-ms latency. The company uses a processor-in-memory technique that is particularly power-efficient, compared to other architectures. Power consumption can actually be traded off with clock speed by varying the clock speed between 50 and 200 MHz. Lightspeeur 5801 contains 10 MB of memory, so entire models can fit on the chip.

This part is the company’s fourth production chip and is already found in LG’s Q70 mid-range smartphone, where it handles inference for camera effects. A USB thumb drive development kit, the 5801 Plai Plug, is available now.

Ultra-low-power

Eta Compute ECM3532

Eta Compute’s first production product, the ECM3532, is designed for AI acceleration in battery-powered or energy-harvesting designs for IoT. Always-on applications in image processing and sensor fusion can be achieved with a power budget as low as 100 µW.

The chip has two cores — an Arm Cortex-M3 microcontroller core and an NXP CoolFlux DSP. The company uses a proprietary voltage and frequency scaling technique, which adjusts every clock cycle, to wring every last drop of power out of both cores. Machine-learning workloads can be processed by either core (some voice workloads, for example, are better-suited to the DSP).

Samples of the ECM3532 are available now and mass production is expected to start in Q2 2020.

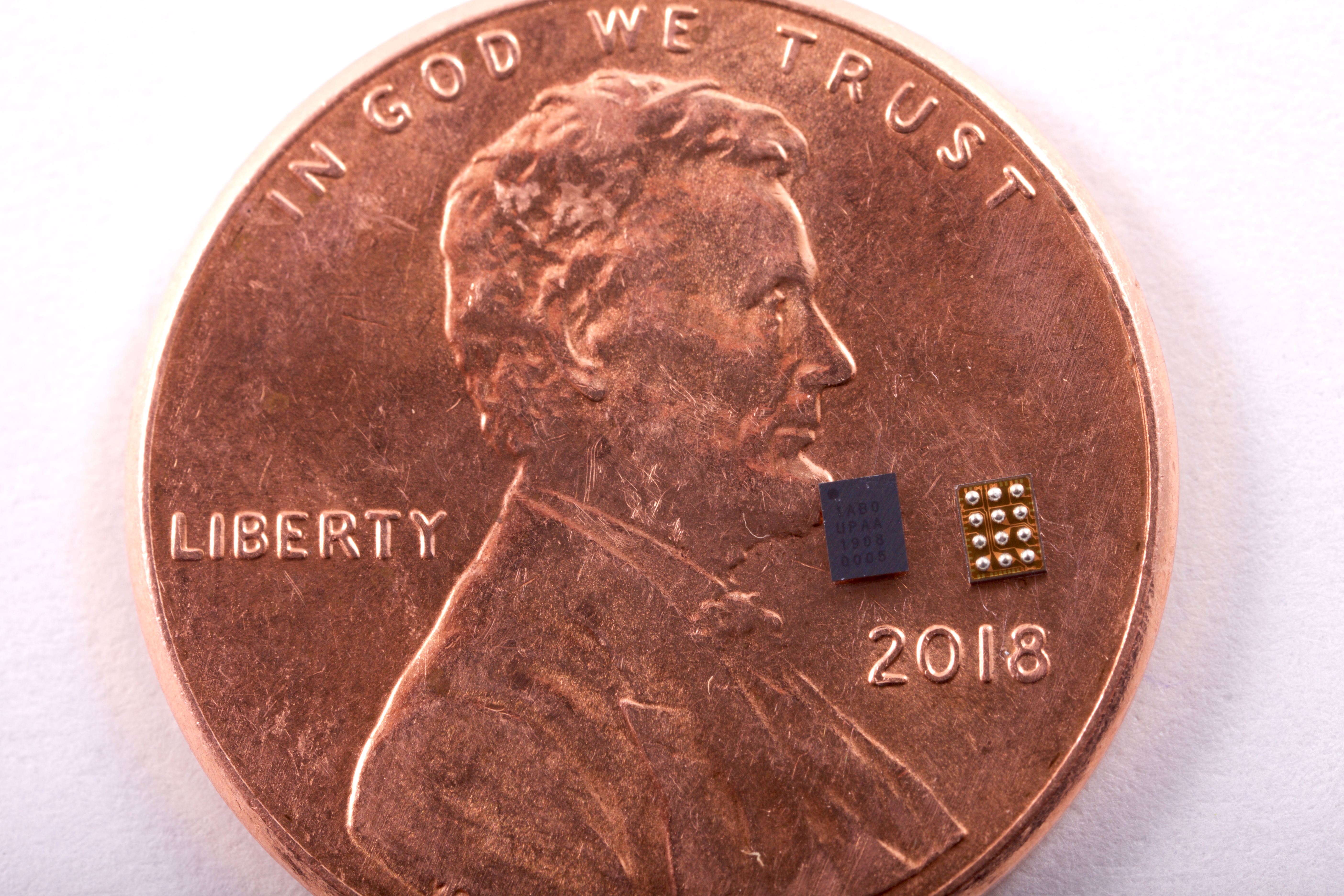

Syntiant Corp. NDP100

U.S. startup Syntiant’s NDP100 processor is designed for machine-learning inference on voice commands in applications in which power is tight. Its processor-in-memory–based silicon consumes less than 140-µW active power and can run models for keyword spotting, wake word detection, speaker identification, or event classification. The company says that this product will be used to enable hands-free operation of consumer devices such as earbuds, hearing aids, smartwatches, and remote controls. Development kits are available now.

Syntiant’s NDP100 device is designed for voice processing in ultra-low-power applications. (Image: Syntiant Corp.)

GreenWaves Technologies GAP9

GAP9, the first ultra-low-power application processor from French startup GreenWaves, has a powerful compute cluster of nine RISC-V cores whose instruction set has been heavily customized to optimize the power consumed. It features bidirectional multi-channel audio interfaces and 1.6 MB of internal RAM.

GAP9 can handle neural network workloads for images, sounds, and vibration sensing in battery-powered IoT devices. GreenWaves’ figures have GAP9 running MobileNet V1 on 160 × 160 images, with a channel scaling of 0.25 in just 12 ms and with a power consumption of 806 μW/frame/second.

Advertisement

Learn more about Intel Corp.NVIDIANXP SemiconductorsTexas InstrumentsXMOS