BY BENJAMIN M. BROSGOL, Senior Technical Staff

AdaCore

www.adacore.com

In a suspenseful scene from the 1976 movie “Marathon Man,” a sadistic Nazi dentist (Sir Laurence Olivier) poses menacingly with his assorted instruments. Referring to a cache of stolen diamonds that he has come to collect, Olivier repeatedly asks his terrified captive (Dustin Hoffman), “Is it safe?” Hoffman has no idea what this means, but his ignorance is far from bliss as he suffers the unanesthetized consequences.

As producers of modern software, we also need to confront the question, “Is it safe?” Not knowing the answer could easily lead to disaster — even if it doesn’t bring dental torture. Actually, the question for software developers is not “Is it safe?” but, rather, “Is it safe enough?” Safety is not a Boolean condition; it’s a continuum reflecting our confidence that the system’s safety properties have been satisfied. And safety implies security, since vulnerabilities can be exploited to produce unacceptable hazards. At the highest assurance levels, we need correspondingly high confidence that our software does what it’s supposed to do and does not do what it’s not supposed to do.

Software safety and security have traditionally received varying degrees of attention in the electronic products arena. High attention to these requirements are obviously relevant for medical devices; nevertheless, significant lapses have sometimes occurred, causing injury or death. In the Therac-25 radiation therapy machine incidents in the 1980s, a “race condition” between concurrent activities caused massive overdoses of radiation to be administered, and several years ago, software defects in insulin infusion pumps also resulted in improper and sometimes lethal dosages.

Safety and security requirements for software are fairly new in the more general area of small electronic products. Previously, the software in such systems was relatively simple, the devices were typically standalone, and safety was enforced through mechanical means. But nowadays, the software is performing increasingly complicated functions, and “smart” devices connected to the internet can be remotely controlled.

This article highlights the major issues that software developers need to confront, with a focus on the DO-178C certification standard (Software Considerations in Airborne Systems and Equipment Certification ) as an example of one industry’s approach. The guidance in DO-178C is not specific to aviation, and the article suggests how it can be adapted to other domains in which high assurance is required.

The big picture

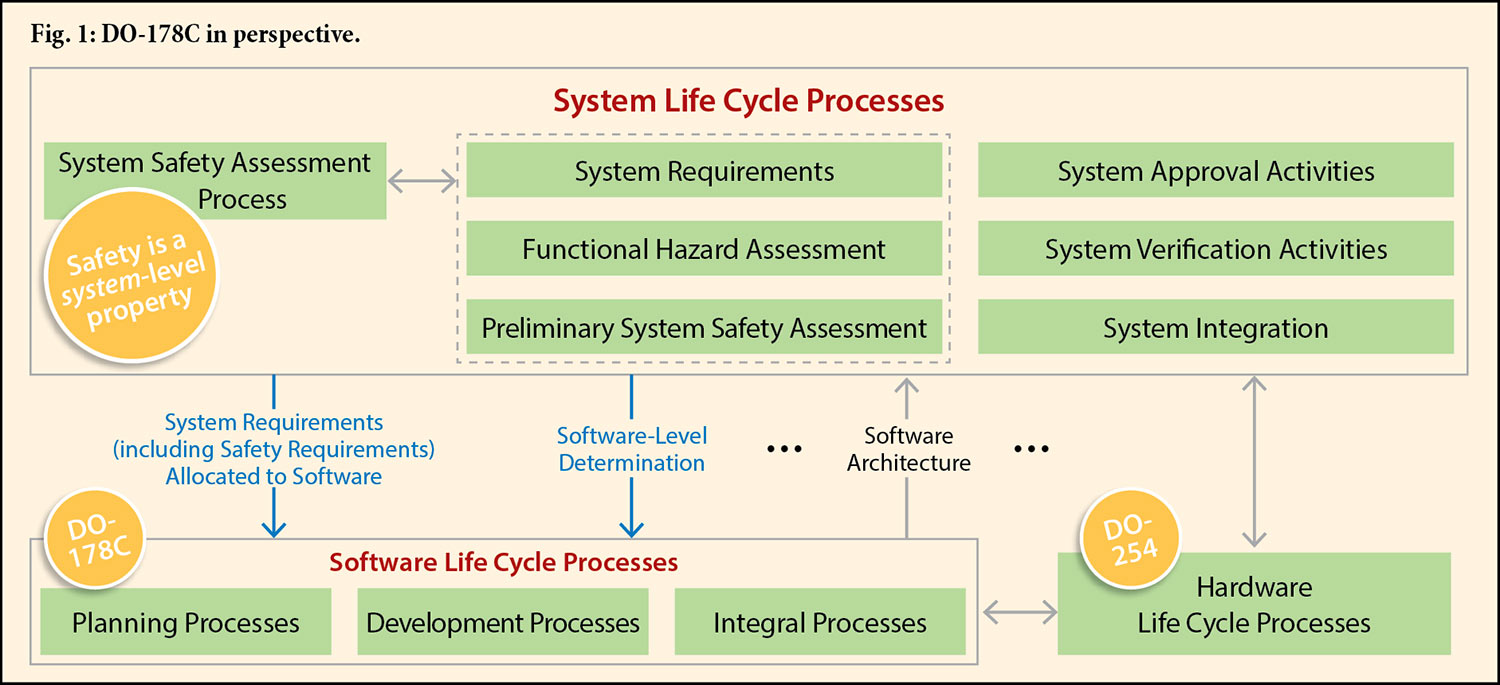

Fig. 1 , abstracted from a figure in DO-178C, shows the relationship between the system life cycle processes and the software and hardware life cycle processes . The key takeaway is that safety (and, implicitly, security) requirements and responsibilities must be determined at the system level but with feedback from the other life cycle processes. Each software component has a corresponding software level (also referred to as a Development Assurance Level, or DAL) based on the impact of that component’s anomalous behavior on the continued safe operation of the aircraft. As noted in Rick Hearn’s article on DO-254 in the October 2016 issue of Electronic Products , Level A is the most demanding because anomalous behavior could produce a catastrophic failure with a resulting loss of the aircraft.

A number of standards provide a recommended practice for conducting the system life cycle processes — for example, ARP 4754A and IEC 61508. Hazard and safety assessment approaches include those presented in official standards, such as ARP 4761.

A catalog of security functions and assurance requirements may be found in the Common Criteria. The assurance requirements are grouped into Evaluation Assurance Levels (EALs), with the highest level (EAL 7) calling for formal verification of security functions. More specific to aviation, an international working group has prepared DO-326A (Airworthiness Security Process Specification) to “augment current guidance for aircraft certification to handle the threat of intentional unauthorized electronic interaction to aircraft safety.” Such system-level analyses serve as input to the DO-178C processes but, in turn, may be affected by feedback from the DO-178C activities.

Software engineering common sense

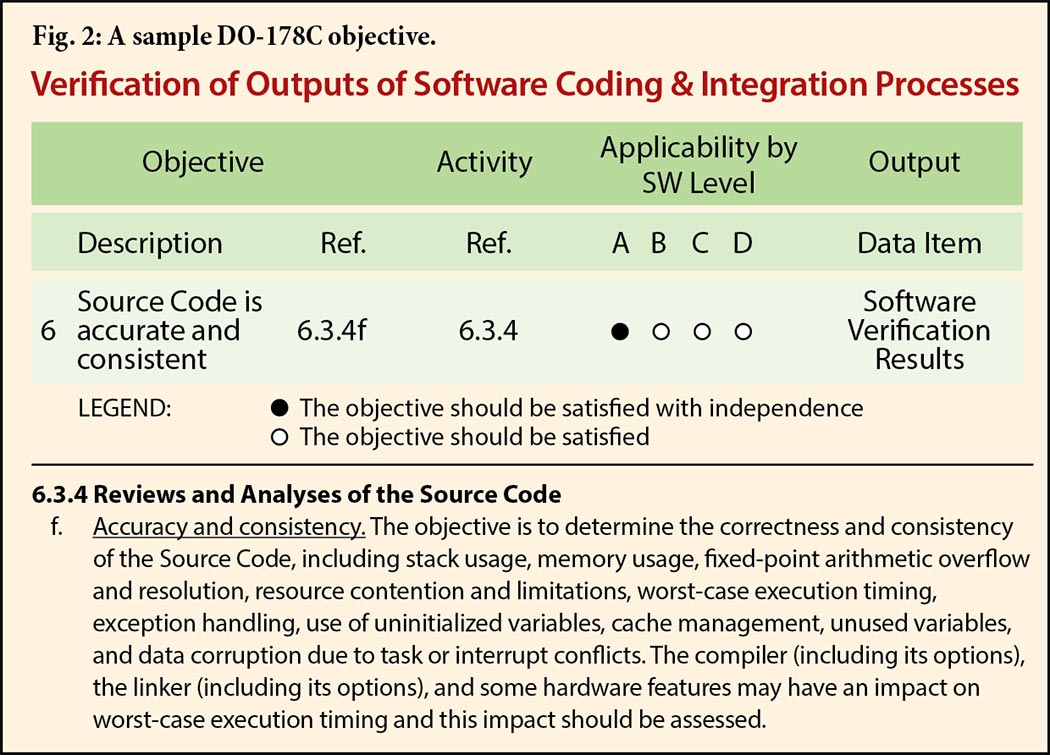

Oriented toward providers of aircraft software, DO-178C contains guidance in the form of specific objectives associated with the various software life cycle processes. A component’s DAL establishes which objectives are relevant, the rigor of the configuration management controls, and whether the parties responsible for meeting an objective and verifying that it has been met need to be independent. The objectives are listed in a set of tables that refer back to the body of the document for details; as an illustration, Fig. 2 shows Objective 6 in Table A-5.

The system provider needs to demonstrate compliance with the objectives by supplying a variety of artifacts (“data items”) that will be reviewed by a Designated Engineering Representative (DER) authorized by the national body’s certification authority. Upon acceptance of the data items, the system is considered to be certified.

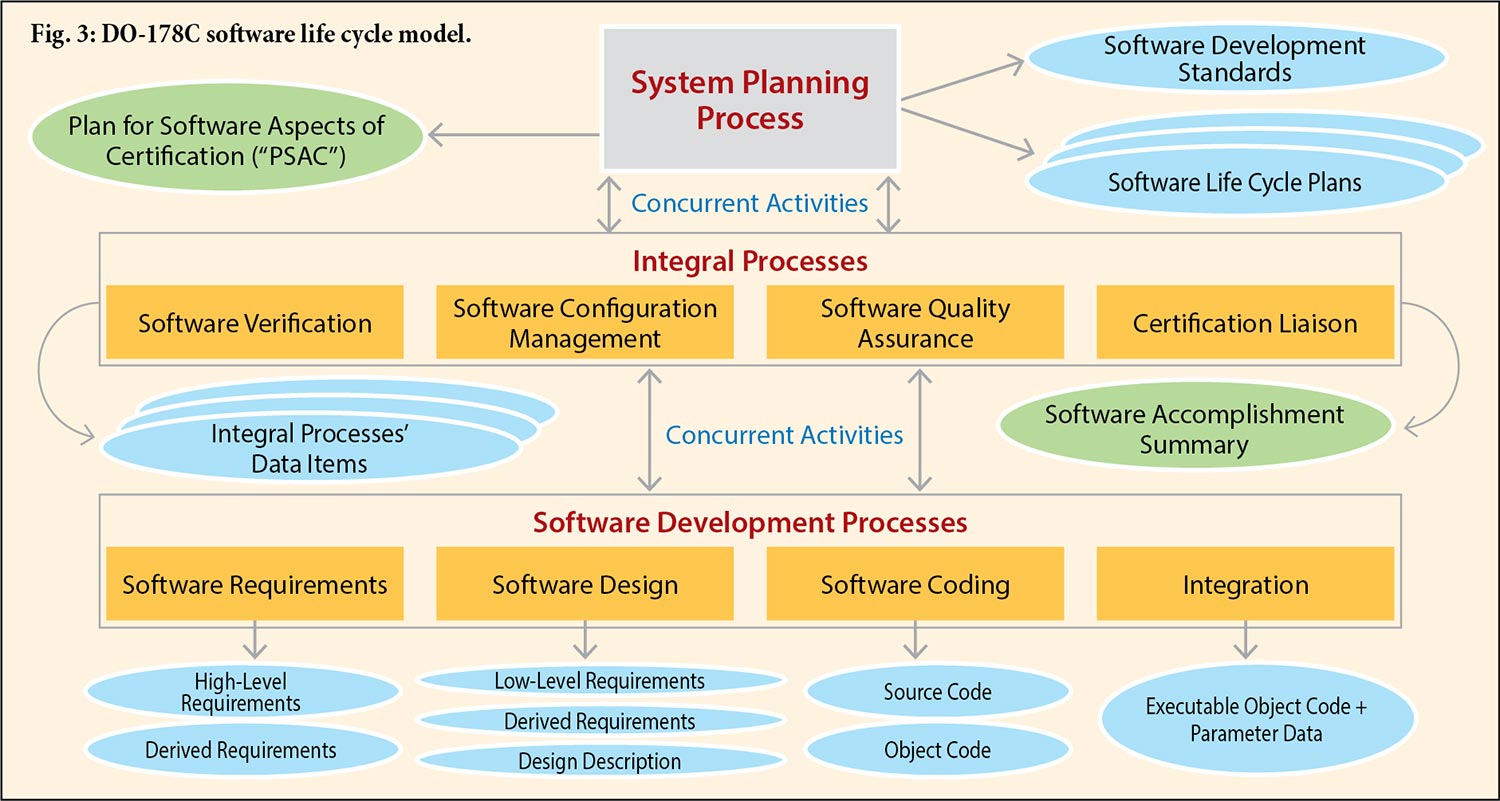

Fig. 3 shows the main elements of DO-178C; namely, the various software life cycle processes comprising planning, development, and several associated “integral” processes. This isn’t rocket science; in essence, the standard is an instantiation of software engineering common sense. Any piece of software is designed and implemented against a set of requirements, so development consists of elaborating the software requirements (both high-level and low-level) from the system requirements and perhaps teasing out additional (“derived”) requirements, specifying the software architecture, and implementing the design. Verification (one of the integral processes) is needed at each step. For example, are the software requirements complete and consistent? Does the implementation satisfy the requirements? Verification involves traceability: each requirement must be traceable to the code that correctly implements it, and in the other direction, each piece of code must be traceable to a requirement.

Complementing the actual development processes are various peripheral activities. The software is not simply a static collection of code, data, and documentation; it evolves in response to detected defects, customer change requests, etc. Thus, the integral processes include Configuration Management and Quality Assurance, and well-defined procedures are critical.

DO-178C is not biased toward or against any particular software development methodology. Although it seems to be oriented toward new development efforts, it’s possible to take an existing system and perform the relevant activities retrospectively to derive the necessary data items. The standard also deals with issues such as incorporation of commercial off-the-shelf (COTS) components and the usage of service history to gain certification credit.

Software verification

The majority of the guidance in DO-178C deals with verification: a combination of reviews, analysis, and testing to demonstrate that the output of each software life cycle process is correct with respect to its input. Of the 71 total objectives for Level A software, 43 apply to verification, and more than half of these concern the source and object code. For this reason, DO-178C is sometimes called a “correctness” standard rather than a safety standard; the principal assurance achieved through certification is confidence that the software meets its requirements. And the emphasis of the code verification objectives is on testing.

But testing has a well-known intrinsic drawback; as quipped by the late computer scientist Edsger Dijkstra, it can show the presence of bugs but never their absence. DO-178C mitigates this issue in several ways:

• Testing is augmented by inspections and analyses to increase the likelihood of detecting errors early.

• Instead of “white box” or unit testing, DO-178C mandates requirements-based testing. Each requirement must have associated tests, exercising both normal processing and error handling, to demonstrate that the requirement is met and that invalid inputs are properly handled. The testing is focused on what the system is supposed to do, not on the overall functionality of each module.

• The source code must be completely covered by the requirements-based tests. “Dead code” (code that is not executed by tests and does not correspond to a requirement) is not permitted.

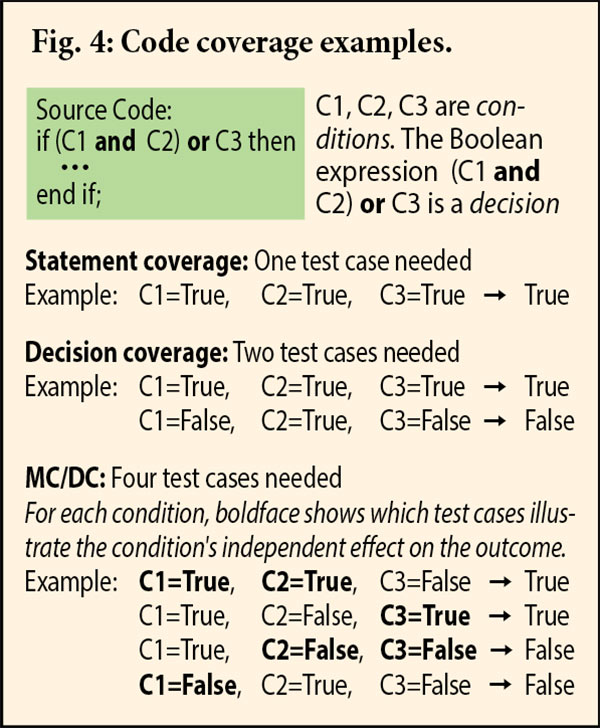

• Code coverage objectives apply at the statement level for DAL C. At higher DALs, finer granularity is required, with coverage also needed at the level of complete Boolean expressions (“decisions”) and their atomic constituents (“conditions”). DAL B requires decision coverage (tests need to exercise both True and False results). DAL A requires modified condition/decision coverage. The set of tests for a given decision must satisfy the following:

o In some test, the decision comes out True, and in some other test, it comes out False;

o For each condition in the decision, the condition is True in some test and False in another; and

o Each condition needs to independently affect the decision’s outcome. That is, for each condition, there must be two tests where:

> the condition is True in one and False in the other,

> the other conditions have the same values in both tests, and

> the result of the decision in the two tests is different.

MC/DC testing will detect certain kinds of logic errors that would not necessarily show up in decision coverage but without the exponential explosion of tests if all possible combinations of True and False were needed in a multiple-condition decision. It also has the benefit of forcing the developer to formulate explicit low-level requirements that will exercise the various conditions.

Fig. 4 shows possible sets of test cases associated with the various levels of source coverage for a sample “if” statement.

Software methodology

Advances in software methodology have presented both opportunities and challenges for verification:

• Model-based development expresses the control logic in a graphical language from which the source code is generated automatically, perhaps mixed with manually produced code. In light of the multiple forms of the program, it’s not clear which objectives apply to which form.

• Object-oriented programming’s principal features — inheritance, polymorphism, and dynamic binding — raise issues with objectives including code coverage and time and space predictability.

• For the highest levels of safety criticality, applying formal methods can help increase assurance (for example, to prove safety properties such as absence from run-time errors) and eliminate the need for some low-level requirements tests. However, it’s not clear how to justify to the certification authorities that formal proofs can replace such tests.

These issues were a major impetus for revising the earlier DO-178B standard, and they are addressed in supplements to DO-178C:

• DO-331 for Model-Based Development and Verification,

• DO-332 for Object-Oriented Technology and Related Techniques, and

• DO-333 for Formal Methods.

Tool qualification

Tools have a range of uses during the software life cycle. Some assist verification, such as checking that the source code complies with a coding standard, computing maximum stack usage, or detecting references to uninitialized variables. Others affect the executable code, such as a code generator for a model-based design. Tools can save significant labor, but we need to have confidence that their output is correct; otherwise, we would have to verify the output manually.

With DO-178C, we gain such confidence through a process called Tool Qualification, which is basically a demonstration that the tool meets its operational requirements. The level of effort that is required — the so-called tool qualification level, or TQL — depends on both the DAL of the software that the tool will be processing and which of several “what-if” scenarios could arise in the presence of a tool defect:

• Criterion 1: The tool output is part of the airborne software, and the tool could insert an error.

• Criterion 2: The tool could fail to detect an error, and its output is used to reduce other development or verification activities.

• Criterion 3: The tool could fail to detect an error.

TQLs range in criticality from 5 (lowest) to 1 (highest). TQL-1 applies to a tool that satisfies Criterion 1 and is used for software at DAL A. Most static code analysis tools will be at TQL-4 or TQL-5.

An auxiliary standard DO-330 (Tool Qualification Considerations) defines the specific objectives, activities, and data items associated with the various TQLs. DO-330 can be used in conjunction with other software standards; it is not specific to DO-178C.

What’s the catch?

Although there have been some close calls, to date, there have been no commercial aircraft accidents with loss of life where the software has been found at fault. So DO-178C (and its predecessor DO-178B) seem to be effective. Nevertheless, some questions have arisen.

Does correctness imply safety? As noted above, DO-178C is really a correctness standard, offering confidence that the software meets its requirements. How does that translate into overall system safety and the resulting low probability of failure? A paper by John Rushby argued that the successful track record for airborne systems may be credited not so much to the software standard as to the corporate safety culture in that industry and the relatively infrequent changes in the technology. Use of the DO-178C guidance by other industries might not yield the same benefits.

The relationship between software correctness and system safety is indeed not so straightforward; many factors affect the overall safety of an aircraft or any other computer-based system (for example, and perhaps most obviously, the training and skills of the operators). However, even though code correctness clearly is not sufficient for achieving safety, it is necessary in situations such as fly-by-wire, in which there is no backup for a “Plan B.” Even ignoring safety issues for a moment, faulty software in many industries can lead to expensive recalls that incur a direct financial cost as well as damage to a company’s reputation. The bottom line: principles and practices that give confidence in code correctness are worth following because they can help save lives and money.

Is the certification process unnecessarily heavy? System certification is not cheap. Attention should be focused on the area where the most significant problems appear; namely, at the requirements stage rather than at the coding level. A natural question is whether the advantages of DO-178C can be achieved at a lower cost. This cost versus benefit question has been raised by both systems suppliers and the U.S. Congress because the safety certification process for airborne software has been perceived as being overly complicated for new entrants and yet failing to adequately address how to deal with new methods and technologies. A working group tasked by the FAA to investigate this issue conducted a Streamlining Assurance Processes Workshop in September 2016, where they presented three high-level “overarching properties” that may form the basis of a simpler certification approach:

• Intent — The defined intended functions are correct and complete with respect to the desired system behavior.

• Correctness — The implementation is correct with respect to its defined intended functions under foreseeable operating conditions.

• Necessity — All of the implementation is either required by the defined intended function or is without unacceptable safety impact.

This work in progress may lead to an alternative framework for certifying airborne software.

Lessons learned

In summary, the guidance in DO-178C serves mainly to provide confidence that the software meets its requirements — not to validate that the requirements, in fact, reflect the system’s intended functionality. So getting the code correct is not sufficient, but it is necessary, and many of the benefits of the DO-178C approach can be realized without going through the effort of a formal certification. Here are some suggestions:

• Pay attention to the full software life cycle. Source version control and configuration management might not seem sexy, but they are essential. Implement source check-in “hooks” (for example, invoke a static analysis tool to enforce some aspects of code quality) and regularly schedule full regression test runs. Implement processes for dealing with defect reports and change control, so that software updates can be supplied without introducing new problems. Make sure that known problems have workarounds.

• Review the objectives in DO-178C and decide which ones to aim for. In the absence of official certification, there is no need to produce the various data items, but if an objective is intentionally omitted, make sure that you understand the reasons for the omission and the possible consequences of failing to comply.

• Use a programming language that detects potential problems early. As stated in Section 4.4 of DO-178C:

The basic principle is to choose requirements development and design methods, tools, and programming languages that limit the opportunity for introducing errors and verification methods that ensure that errors introduced are detected.

The Ada programming language is particularly applicable because it was designed to promote sound software engineering practice and it enforces checks that will prevent weaknesses such as “buffer overrun” and integer overflow. The most recent version of the language, Ada 2012, includes an especially relevant feature known as contract-based programming, which effectively embeds the low-level software requirements in the source code in which they can be verified either statically (with appropriate tool support) or at run time (with compiler-generated checks).

• Whichever language is chosen, settle on a subset that is appropriate for the needs of your application. General-purpose languages such as C, C++, Ada, and Java provide features that may be problematic in a high-assurance context. A feature may be error-prone, its semantics might not be completely specified, or it may require complex run-time support. Choose and enforce a suitable subset (or “software code standard,” in DO-178C parlance).

For example, the rules in MISRA-C have been designed to address a variety of the C language’s problematic features. However, some of the MISRA rules are not checkable (for example, the documentation requirements) and others may be enforced in different ways (for example, the prohibition against dangling references). In Ada, a standard feature known as “pragma Restrictions” allows the programmer to specify the features that are to be prohibited. Relevant subsets can thus be defined in an a la carte fashion, with almost all of the restrictions enforced by compile time rather than run-time checks. The Ada language standard also defines a particular set of restrictions on the concurrency (tasking) features, known as the Ravenscar profile. The Ravenscar tasking subset is expressive enough to be used in practice but simple enough for safety- or security-certified systems.

• Consider formal methods for high-assurance applications. Static analysis tools based on formal methods can prove program properties (including safety and security properties) ranging from absence of run-time errors to compliance with formally specified requirements. Use of such tools provides mathematics-based assurance while also eliminating the need for some of the low-level requirements-based tests.

One example is the Frama C technology which can prove properties of C programs, although the pervasive use of pointers in C presents a challenge. SPARK is a formally analyzable subset of Ada; hard-to-analyze features such as pointers and exceptions are absent, and the proof technology can exploit fundamental Ada features such as strong typing, scalar ranges, and contract-based programming.

• Automate manual processes with qualified tools. Static analysis tools are your friend; among other things, they can check compliance with a coding standard, compute complexity metrics, and detect potential errors or latent vulnerabilities (such as references to uninitialized variables, buffer overrun, integer overflow, and concurrent access to a shared variable without protection). An example of a static analysis tool for Ada that identifies such errors is AdaCore’s CodePeer. Practicalities to be considered include soundness (does the tool detect all instances of the error it is looking for?), “false alarm” rate (are the reported problems, in fact, real errors?), and usability (such as scalability to large systems, ability to analyze parts of a system without needing the full code base, and ease of integration into an organization’s software life cycle infrastructure).

For dynamic analysis, a code coverage tool is essential at both source and object level. Typical technology in this area generates special tracing code in the executable, but this means that the real executable is not the same as the one from which the coverage data is derived. Thus, extra work is needed to show that the coverage results obtained for the instrumented and actual executables are equivalent. An alternative approach is non-intrusive, with the compiler generating source coverage obligation data separately from the executable. Using trace data generated from either a target board with appropriate trace capabilities or an instrumented emulation environment, the coverage tool can then identify and report which source constructs and object code instructions have or have not been exercised. An example of such a non-intrusive tool is AdaCore’s GNATcoverage, which can derive coverage data up to MC/DC.

For any of these tools, qualification credentials will provide added confidence and also alleviate the need for additional effort in confirming the tool’s results.

Applying the principles of DO-178C to your software life cycle processes might not save you from an evil “Marathon Man” dentist, but when used jointly with system-level safety principles, it will help you give a confident “yes” answer to the question, “Is it safe?”

Advertisement

Learn more about AdaCore