BY JEFF VANWASHENOVA

Director of Automotive Market Segment, CEVA

www.ceva-dsp.com

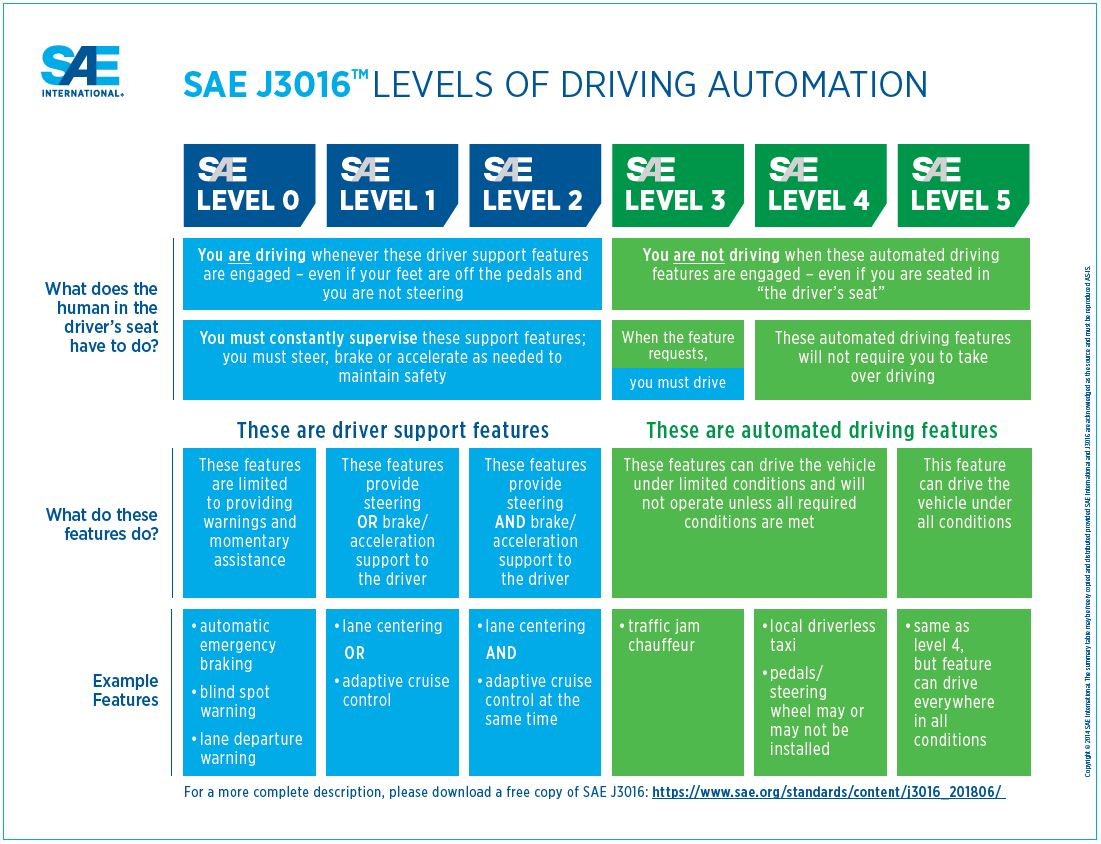

If you bought or rode in a new car recently, you’ll probably agree that what used to be advanced in driving automation, up to SAE Level 2, is now mundane. We’re no longer surprised to see or at least hear of driver-assistance features like blind-spot detection and lane-departure warning, lane centering, emergency braking, and adaptive cruise control.

The U.S. Department of Transportation (DoT) uses J3016’s six levels of automation for on-road motor vehicles in its Federal Automated Vehicles Policy. (Image: SAE International)

Now we’re looking forward to true autonomy, represented by Levels 3 through 5 (see figure), partially hands-free all the way up to fully hands-free control. What was once pure science fiction now looks much more reachable. The big automakers (who know how to scale to volume) have aggressive programs and partnerships. Proof-of-concept cars have been deployed in Pittsburgh, San Francisco, Phoenix, and other cities. Waymo’s launch in Phoenix goes even further with a self-driving taxi service, grabbing for first commercial market share while also aiming to recoup development costs.

Challenges

Levels 3 through 5 introduce new challenges, not all of which are technical. Public acceptance is likely the most important factor and can’t be taken for granted in today’s climate, wherein technology can appear to some as more of a threat than a benefit. Waymo has experienced some of these threats , though incidents are limited and some have been obvious behavioral outliers. But we are a fickle lot, so Waymo and others will have to manage social acceptance and inevitable media crises as carefully as they manage the technology.

Naturally, technical challenges also increase. More sensors are needed — optical, radar, LiDAR, and others — together with sensor fusion. It’s not unreasonable to expect a need for 20+ sensors in the upper levels of the SAE standard.

A challenge unique to Level 3 is safely managing the handover between self-driving and (human) driver control. Driver monitoring is a big factor here — are you awake, are you aware that the car wants you to take over, and are you looking ahead? These checks are already possible through driver gaze detection/tracking, monitoring movement of the driver’s head, or, even more accurately, pupil center detection. Both methods require object recognition and tracking inside the car.

Cost is also a challenge. The self-driving cars that we see today are not cheap. Last year, the CEO of Delphi Automotive (now Aptiv PLC) estimated the cost of the self-driving hardware/software stack at between $70,000 and $150,000. That’s on top of the cost of the car itself (he believes that this could come down to about $5,000 by 2025). One reason is some expensive sensor technology. LiDAR alone, a critical component in self-driving systems, currently depends on mechanical systems spinning lasers.

Over the past year, some of the best systems have dropped in price. Velodyne LiDAR, for example, cut the price of its VLP-16 Puck in half , from about $8,000 to $4,000. The company also introduced the ultra-high-resolution VLS-128 with 128 laser channels for advanced real-time 3D vision.

Solid-state advances to eliminate mechanical support should reduce costs further — some expect to as low as $100. At least today, LiDAR plays a big role in Levels 4/5, suggesting that these levels might be limited for a while to commercial/mass transit applications in which this higher cost may be more effectively offset. Then again, solutions based on phased arrays or MEMs mirrors might appear sooner than we think. Quanergy’s S3 solid-state LiDAR sells between several hundred and a few hundred dollars today, depending on quantity, but the company expects that the price will drop below $100 with higher levels of integration.

Also, RoboSense recently announced that its new RS-IPLS Intelligent Perception LiDAR system , combining MEMS solid-state LiDAR technology with an AI-based deep-learning algorithm, cost a fraction of the cost of more traditional LiDAR systems at $200.

Another cost driver is the intelligence behind the sensing. Widely used solutions today depend on platforms that run near $1,300 per device. This is already a healthy bite out of a total $5,000 budget if you only need one, but one doesn’t appear to be enough.

To manage response times for critical functions and to avoid network congestion in sending raw data from many sensors to a central artificial intelligence (AI) processor, some level of intelligence must be managed close to the sensors. When you consider the possible need for 20 sensors, AI hardware pricing must be reduced by more than an order of magnitude, inevitably driving OEMs and Tier Ones to ASIC design.

Another challenge is safety and reliability. The ground requirement is ISO 26262 compliance; however, that standard has no understanding of behaviors like driver out of the loop, non-determinism, learned behaviors, and others that are routine in Levels 3 through 5. Work is being done in these areas.

It already seems clear that “millions of miles driven” claims made by practitioners, while necessary, is a far-from-sufficient metric to prove reliable operation . How many driven miles are enough when underlying AI technologies are non-deterministic? A generally accepted solution is to use cross-checks between multiple systems or within a given system itself. In camera modules, AI-based object recognition can be compared with more conventional computer-vision algorithms to ensure proper operation. This same approach can also be implemented across different sensor modules through sensor fusion between multiple classes of object recognition (camera, radar, LiDAR). In addition to these safety measures, redundancy will play a critical role in safety assurance.

My net on all of this? Staking out market share remains a powerful factor and may drive technical/cost/safety progress faster than seems possible today. But the surest path to success is likely to be incremental introduction of Level 3 features in personal vehicles and more bounded/measured introduction of Level 4/5 capabilities in commercial vehicles such as driverless buses, food delivery vehicles, and maybe last-mile taxis. However this evolves, there clearly will be huge opportunities for sensors, AI components behind the sensors, and centralized AI subsystems in delivering the driverless promise.

Advertisement