By Rick Merritt, Silicon Valley Bureau Chief, EE Times

If you are looking to take your first steps into the forest of deep learning, you are not alone and there are lots of resources.

Deep neural networks are essentially a new way of computing. Instead of writing a program to run on a processor that spits out data, you stream data through an algorithmic model that filters out results.

The approach started getting attention after the 2012 ImageNet contest, when some algorithms delivered better results identifying pictures than a human. Computer vision was the first field to feel a big boost.

Since then, web giants such as Amazon, Google, and Facebook have started applying deep learning to video, speech, translation — anywhere they had big data sets that they could comb to find new insights. Recently, Google CEO Sundar Pichai said that the techniques are as fundamental as the discovery of electricity or fire.

The fire has caught on quickly. Last year, more than 300 million smartphones shipped with some form of neural-networking capability; 800,000 artificial intelligence (AI) accelerators will ship to data centers this year, and every day, 700 million people now use some form of smart personal assistant like an Amazon Echo or Apple’s Siri, said C.C. Wei, the co-chief executive of chipmaker TSMC. He called AI and 5G the two drivers of the semiconductor industry today.

So if you are considering your first board or SoC design for AI, you have plenty of company. And there’s plenty of help.

As many as 50 companies are already said to be selling or preparing some form of silicon AI accelerators. Some are IP blocks for SoCs, some are chips, and a few are systems.

But there are a few important steps before you start sorting through what Jeff Bier, founder of the Embedded Vision Alliance , calls “a Cambrian explosion” of new AI products.

First, gauge where your application sits on the wide spectrum of AI performance requirements. This will help you quickly eliminate many silicon and software options and zero in on the few best suited to your tasks.

At one end, self-driving cars need to sort through feeds from multiple cameras, radars, lidars, and other sensors to make driving decisions in real time. They need a network of dedicated accelerators.

At the other end, sensor networks in a farm field might only need to detect and report a significant change in soil moisture, throwing away hourly, or even daily, dribbles of data until a change occurs. A gateway with an Arm Cortex-M microcontroller running its CMSIS-DSP library may suffice here, said Bier.

Jeff Bier is the founder of the Embedded Vision Alliance.

Jeff Bier is the founder of the Embedded Vision Alliance.

The next step is selecting and training the right neural-network model from an alphabet soup of options. Two of the most popular are convolutional neural nets (CNNs), generally for imaging, and recurrent neural nets (RNNs), typically for voice and audio. However, data scientists are rolling new variants and hybrids almost daily as use cases expand.

The good news is that researchers often make their latest algorithms freely available through technical papers, hoping that they gain traction. Models can also be found through the AI software frameworks that web giants maintain and promote such as Amazon’s MxNet, Google’s TensorFlow, Facebook’s Caffe2, and Microsoft’s CNTK 2.0.

Next, you need to find appropriate data sets for your app, label them, and use them to train the algorithmic model that you have selected. Some data sets are available in the public domain, but for best results, you may need to create, or at least tailor, one for your needs.

Service companies such as Samasource and iMerit are springing up to help with the grunt work of curating and labeling large data sets. Web giants such as Amazon, Google, and Microsoft also have tools to get you started in hopes that you will use their cloud services to train and run your models.

You can burrow into the details in live and online education programs. For example, Bier’s annual Embedded Vision Summit runs a one-day training course on TensorFlow, one of the most popular AI frameworks. Startup Fast.ai also runs a series of online courses .

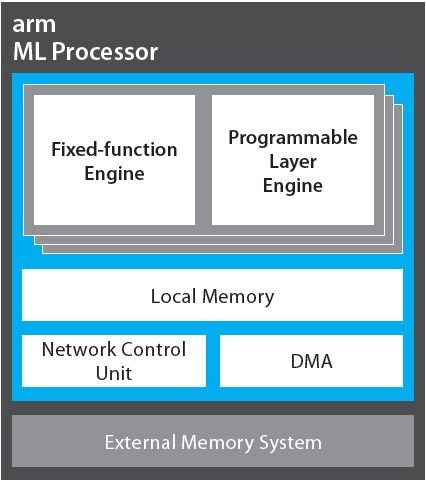

Arm is expected to ship and provide details this summer of its generically named ML core. Image source: Arm.

Choosing the right silicon

Many applications may not require any special silicon if you get the software right. Existing chips such as Qualcomm’s Snapdragon can deliver “tremendous performance on AI jobs if you know what you are doing,” said Bier.

In April , Qualcomm rolled out its QCS603 and QCS605 chips and related software, tailored to bring AI capabilities to the IoT. They are essentially variations of Snapdragon SoCs with some versions supporting extended lifetimes for industrial users.

NXP demoed in January its i.MX SoCs running deep-learning apps on a prototype smart microwave and refrigerator. The FoodNet demo showed the chips running up to 20 classifiers and handling inference operations in 8 to 66 milliseconds using a mix of existing GPU blocks and Arm Cortex-A and -M cores.

That said, NXP, like many embedded chip vendors, expects to partner with third-party accelerator makers soon. Eventually, it aims to offer its own AI accelerator blocks.

QuickLogic is a step ahead. It announced on May 4 its Quick AI platform, pairing its EOS S3 chips used in smart speakers with an AI accelerator chip from Nepes Corp. Software from two third parties helps customize algorithms and train them in the field for uses such as factory vision systems, predictive maintenance, and drones.

Since 2016, established and startup chip and IP vendors have been announcing AI accelerators, many of their parts available now. In general, they have added integer units to GPU blocks or extended SIMD units in DSP cores, said Linley Gwennap of The Linley Group.

More recently, Google’s in-house TPU made clear that deep learning needs accelerators of linear algebra, typically in the form of large multiply-accumulate (MAC) arrays with lots of memory. Some devices are adding hardware for specific aspects of neural nets like activations and pooling, said Gwennap.

Many made-in-China options

EE Times has tracked more than 20 companies working on client AI accelerators to date. There are many more. In a July 2017 report from China, where AI startups are in vogue, serial entrepreneur Chris Rowen reported on several that we had not yet heard of, including DeepGlint, Emotibot, Megvii, Intellifusion, Minieye, Momenta, MorphX, Rokid, SenseTime, and Zero Zero Robotics in vision and AISpeech, Mobvoi, and Unisound in audio.

A handful of companies in China are well worth considering.

Horizon Robotics is among the most interesting. Founded by a handful of AI experts from Baidu and Facebook, it is already shipping two 40-nm merchant chips as well as cameras and ADAS subsystems using them. It has pulled in more than $100 million in venture capital to fuel a roadmap that includes 28- and 16-nm chips.

Bier said that he “saw some very impressive demos” from the “two-year-old company that is moving really fast,” making them one of four AI chip startups that he recommends.

Another favorite of Bier’s is NovuMind , in part because its founder, Ren Wu, is one of the few technologists with a long background in both AI and processor design. Its 28-nm NovuTensor aims to deliver 15 tera operations/second (TOPS) at less than 5 W, and a 16-nm follow-on is in the works.

Two China startups got their AI cores designed into smartphones from handset giants.

An AI block from Cambricon powers Huawei’s Kirin 970 handset. In early May, the company announced its 1M core that promises up to 5 TOPS/W for handsets, smart speakers, cameras, and cars. It also announced the MLU100, a data center accelerator gobbling 110 W.

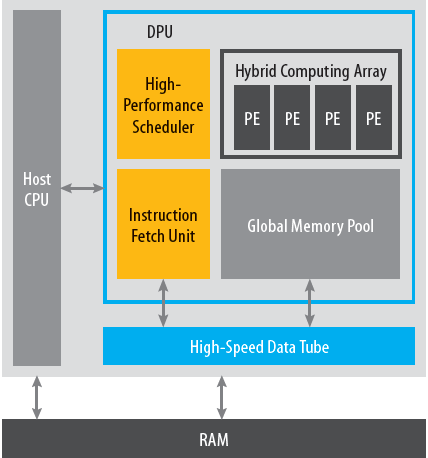

DeePhi’s Aristotle chip uses a hybrid CPU-/GPU-like architecture. Image source: DeePhi.

DeePhi of Beijing has an AI core designed into Samsung’s Exynos 9810 and the Galaxy S9 handset. The startup released its Aristotle chip for CNNs and Descartes chip for RNNs along with boards tailored for cameras, cars, and servers. Rowen considers them “one of the most advanced and impressive of all the deep-learning startups” in China.

Chris Rowen is a serial entrepreneur burrowing into AI.

Rowen also recommends Beijing-based Megvii. The startup’s Face++ face-recognition technology leverages the Chinese government’s face database also used by Alibaba’s AliPay. It also is leveraging its billion-dollar valuation to help migrate its cloud-based technology to embedded devices, said Rowen.

Heated competition in cores

Back in the U.S., Intel has been doing great work trying to stay at the edge of AI silicon. Its 2016 acquisition of Movidius is geared to client systems with chips already appearing in multiple drones from DJI and security cameras. It has released multiple generations of chips , and Gwennap expects it to be shrunk to a core and appear in PC chipsets within the next few years.

If you are designing your own SoC, there are plenty of AI cores available. Ironically, Arm, which dominates processor IP, will be one of the last to enter the AI field when it releases its ML core this summer, but the Project Trillium Arm announced in February suggests that its offering will be broad and deep.

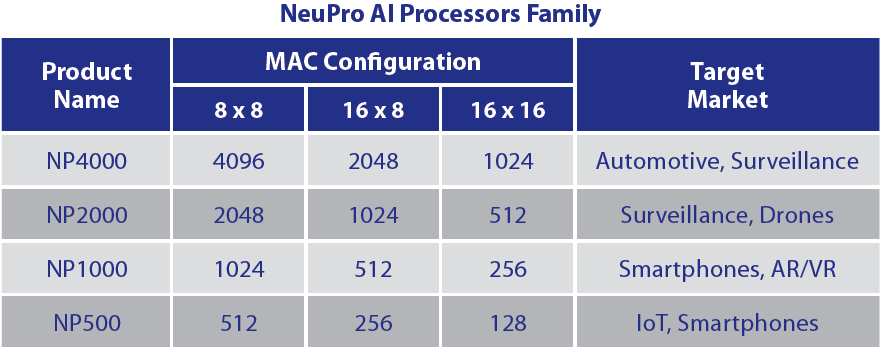

Cadence , Ceva, Imagination, Synopsys , and VeriSilicon have multiple AI cores available — in some cases, for nearly two years. They have made the space highly competitive and rich with options at different performance levels using diverse architectures.

Ceva provides a set of four AI cores for different use cases. Image source: Ceva.

Nvidia is a dark horse here. It dominates the market for cloud-based training with its massive Volta V100 GPUs announced in May 2017 . But it also wants to get into self-driving cars with its Xavier chip coming later this year.

In an effort to leapfrog the stiff competition, Nvidia made the Xavier IP open-source under the name NVDLA. Multiple chips are being designed with the IP, but none have been announced, said an Nvidia exec in March .

The automotive space is especially competitive. Intel acquired Mobileye for AI chips in cars and is working closely with OEMs including BMW . A host of startups are focused on the sector, including AImotive , which is currently designing a test chip to run in its own fleet of cars.

Another half-dozen startups

For those with the stomach to work with a startup, there are plenty of other hungry ones out there.

GreenWaves is leveraging RISC-V and PULP open-source projects to deliver GAP8, a 55-nm chip that was announced in 2016 and plans to deliver 12 GOPS at 20 mW and 400 MHz. It aims to lead in power consumption for IoT devices but does not expect volume production until the end of the year.

Videantis of Germany is licensing an AI core for vision systems. It aims to deliver at 16 nm a range from 0.1 tera multiply-accumulates/second (TMAC/s) for ultra-low-cost devices to 36 TMAC/s for high-performance devices using a multicore VLIW/SIMD DSP architecture.

ThinCI detailed its Graph Streaming Processor for vision and ADAS systems at Hot Chips last August but said that it had not yet taped it out. The company is already working with investor and partner Denso on a system that it hopes gets integrated into 2020-model cars.

Bier says that another of his favorites is Mythic, applying a decade-old processor-in-memory architecture to AI . It promises a big leap in performance/watt, but parts are not expected to be in production until late 2019.

A group of ex-Google silicon engineers formed startup Groq with a website claiming an inference processor capable of 8 TOPS/W and 400 TOPS/s, which will ship sometime this year. So far, the company has not granted any interviews.

Esperanto announced in November plans to take a clean sheet of paper to the AI challenge using RISC-V cores. Like many startups, it includes a team of veteran microprocessor engineers eager to take on an historic challenge, but it is providing no timeframe for delivering silicon.

Two closely watched startups plan to design chips but sell systems, probably targeting businesses who want to run AI jobs in their private clouds.

The dataflow architecture of Wave Computing is a natural match for AI algorithms, said Bier. However, it does not appear to address embedded systems, especially given its chip’s use of power-hungry HBM2 memory and its plans for 3U-sized Linux appliances.

Likewise, SambaNova came out of stealth mode in March with a team of two Stanford technologists and a former designer of SPARC processors at Sun Microsystems. While plans are still sketchy, an April talk by its CEO suggested that he will compete with Wave to deliver AI appliances for business users.

Both companies ultimately could spin chips or license technology for embedded systems, too, so they are well worth watching.

Advertisement