By Majeed Ahmad, contributing writer

There is a growing demand for high-performance hardware accelerators in chips powering data centers that employ artificial intelligence technology in applications such as deep learning, image classification, object detection and recognition, and natural-language processing. These hardware accelerator-based chips are rapidly taking over traditional CPUs and GPUs because of their ability to perform faster processing of AI tasks at lower power consumption.

Hardware accelerators — specialized devices that perform specific tasks such as cloud-based training, data analytics, genomics, and search rankings — are embedded into CPUs, GPUs, FPGAs, and ASICs to serve massively parallel AI workloads. There also are AI acceleration cards, such as Xilinx’s Alveo U50 and Intel’s D5005, that handle more specialized and compute-intensive workloads.

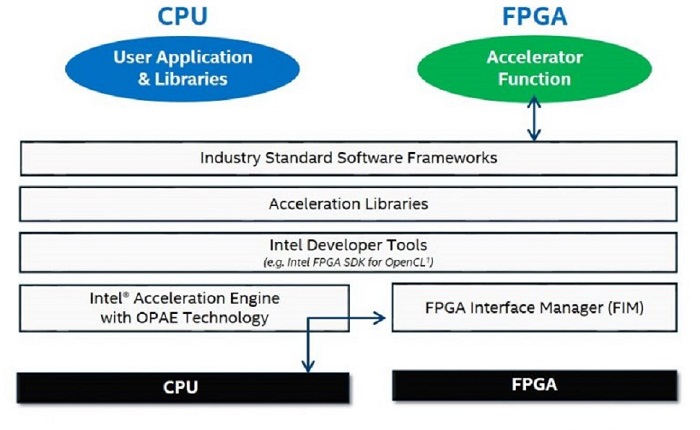

An FPGA provides workload acceleration to a CPU in a data center server design. (Image: Intel)

So for engineers engaged in AI-centric data center and cloud computing environments, what are the basic design considerations relating to hardware accelerators? This article delves into some key issues worth paying attention to.

Power efficiency

The AI chips and accelerator cards are designed and built to prioritize two key real-world considerations in data centers: performing training and inference tasks as fast as possible and doing it within a given power budget. The AI chips serve power-hungry workloads for data center applications such as video content streaming and large-scale simulations, so it’s imperative that hardware accelerators in these AI chips ensure energy efficiency while processing complex workloads.

When Shanghai, China-based Enflame Technology announced its deep-learning solution for data center training in collaboration with GlobalFoundries (GF), power-efficient data processing for cloud-based AI training platforms was mentioned as a key value proposition. The company’s Deep Thinking Unit (DTU) accelerator chip is based on GF’s 12LP FinFET platform with 2.5D packaging.

Data centers are bulging at the seams, and operators are looking for new ways to accelerate a host of data-driven workloads ranging from deep learning to natural language processing. We take an in-depth look at the agile and hyperconverged data center architectures accelerating model training and inference, data analytics and other distributed applications in our upcoming Data Center Special Project.

Data centers are bulging at the seams, and operators are looking for new ways to accelerate a host of data-driven workloads ranging from deep learning to natural language processing. We take an in-depth look at the agile and hyperconverged data center architectures accelerating model training and inference, data analytics and other distributed applications in our upcoming Data Center Special Project.

Hardware accelerator architecture

Besides power efficiency, a major issue in data center environments, it’s important that the AI chip designers determine what to accelerate, how to accelerate, and how to run accelerators on various neural networks — CNNs, DNNs, and RNNs — and a wide range of data types. That, in turn, underscores the importance of how multiple hardware accelerators are architected on an AI chip.

The AI designers are slicing the algorithms finer and finer while they add more hardware accelerators to address the constantly evolving demands of processing and analyzing huge datasets for modern data center workloads. That makes hardware architecture crucial in handling machine vision, deep learning, and other AI workloads.

Nvidia’s acquisition of Mellanox is a case in point. Mellanox’s interconnect technology could help the leading AI chipmaker create a more holistic architecture and bolster the data-center–scale workloads comprising tens of thousands of compute nodes. An efficient interconnect technology boosts the speed and accuracy of neural network training and reduces data center power consumption.

Programmable accelerators

Another acquisition points to an additional critical requirement for AI accelerators: programmability. When Intel acquired Habana Labs, the Israel-based developer of deep-learning accelerators for approximately $2 billion, it was mainly the programmability of Habana’s accelerators for data centers that made headlines. Subsequently, Intel stopped the development of its in-house Nervana neural network processor line.

The programmability features allow AI designers to cater to a variety of workloads and neural network topologies. It’s especially important when software algorithms are changing faster than AI chips are developed, and that makes hardware accelerators fixed-function devices.

Here, programmability enables AI accelerators to adapt to evolving data center design needs. For instance, flexible architecture with programmability features can help AI designers manage shifting workloads, new standards, and updated algorithms.

AI design ecosystem

The two acquisitions mentioned above also signal an effort on the part of AI chipmakers to cobble a broad mix of technologies that includes both hardware and software. Companies like Intel and Nvidia are aiming to provide offerings that range from AI processors to AI software toolkits.

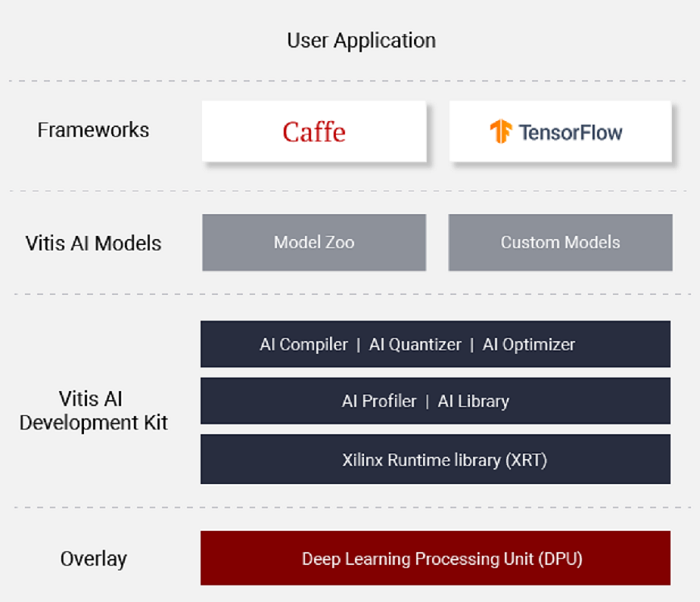

Therefore, AI designers must carefully review the availability of development tools for model creation, chip evaluation, and proof-of-concept designs. It’s also worth checking which AI frameworks — Caffe, PyTorch, TensorFlow, etc. — are supported by the hardware accelerators.

Then there are software development kits that present AI frameworks such as TensorFlow as a learning environment and provide data conversion tools that cater to learned models as well as inference processing.

A view of how development kits facilitate AI acceleration for data center workloads (Image: Xilinx)

Hardware accelerator IPs

The AI accelerators, incorporated into chips, are also available as hardware IPs. Several semiconductor firms provide AI accelerators for use in custom chips through an IP licensing model.

Take the example of Milpitas, California-based Gyrfalcon Technology Inc. (GTI), which designs AI chips for data centers and provides the Lightspeeur 2803 accelerator IP for data center chips. Gyrfalcon provides licensees with USB 3.0 dongles that AI chip designers can use on Windows and Linux PCs as well as on hardware development kits such as Raspberry Pi.

Advertisement

Learn more about Electronic Products Magazine