In 1968, author Philip K. Dick philosophically asked if androids dream of electric sheep. Now, 47 years later, Google gives us a potential answer through a series of fantastical images autonomously created by the company’s image recognition neural network, an advanced machine-vision system which taught to identify the characteristics of animals, buildings, and other objects, within pictures.

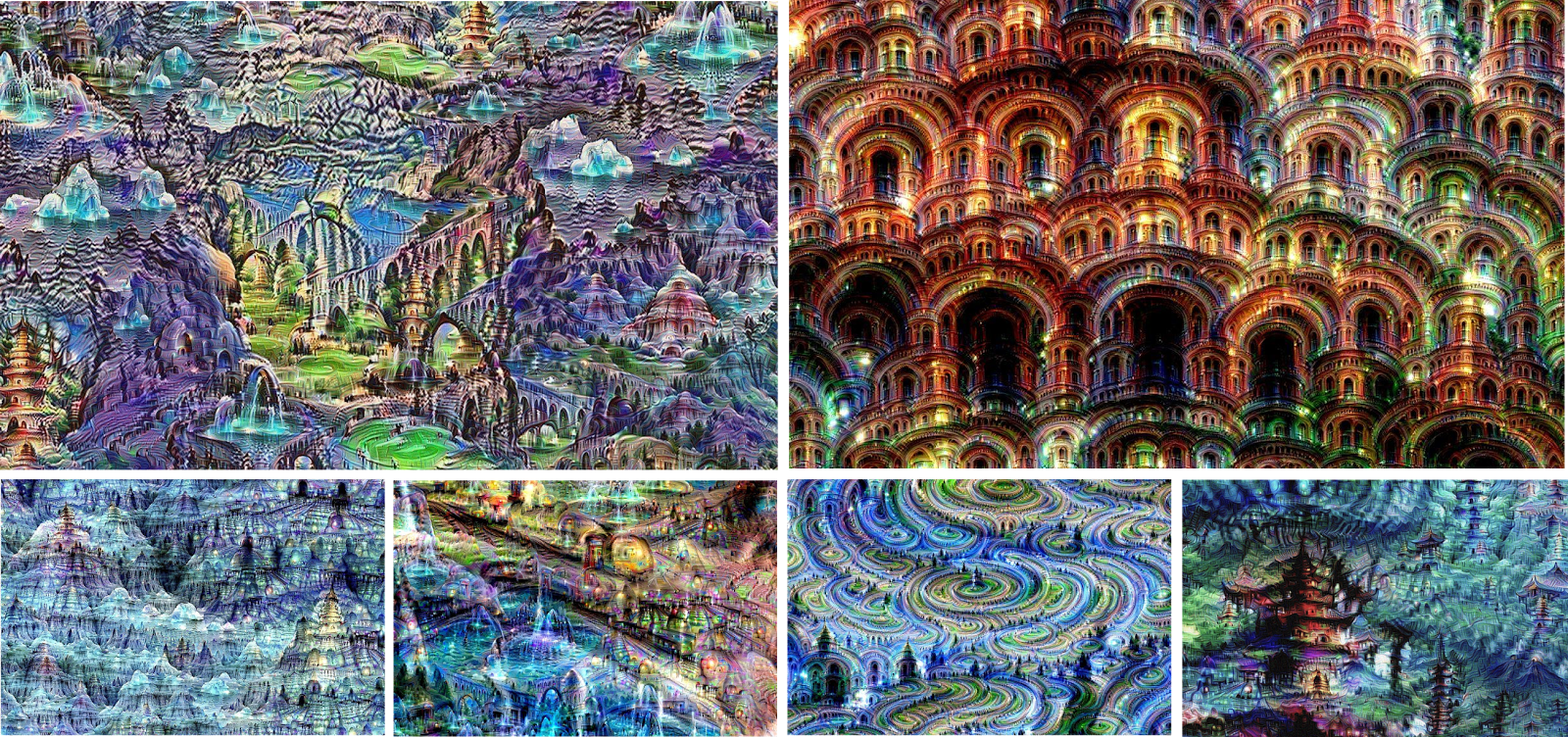

The end results range from horrifying to serene, often resembling an acid trip from the mind of Salvador Dali.

Fabricating such imagery required that Google “train” the artificial neural networks by showing them millions of examples of an intended subject in the hopes that they will extract its base characteristics (e.g., the shape and color of a banana) while ignoring unimportant criteria (the size of the banana, per say). Over time, the parameters are gradually adjusted to emphasize the traits it recognizes, before resubmitting the image, and repeating the process until the network produces the intended classification.

Physically, the network consists of approximately 10-30 stacked layers of artificial neurons. Images fed into the first layer are passed along on to the next, progressively extracting higher level features as it makes its way toward the “output” layer from where an answer is released.

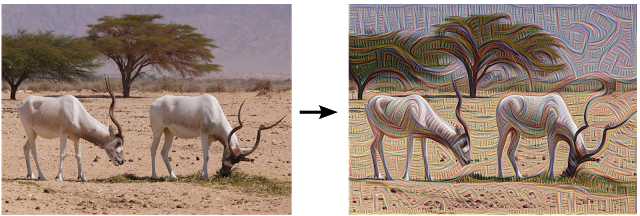

If when the network is permitted to make its own decision about the features we want amplified, some rather bizarre things occur. Bear in mind that each layer in the network deals with different features and levels of complexity; lower levels focus on strokes, lines, and ornament-like patterns, because they are sensitive to fundamental features such as orientation and edge.

Higher-level layer extractions are far more peculiar, sorting order from chaos in the similar way we in which we see familiar shapes in clouds. To demonstrate, when the network was fed an image of knight on horseback and ordered to identify animals, it began to detect dogs head embedded into the very fabric of the painting’s reality.

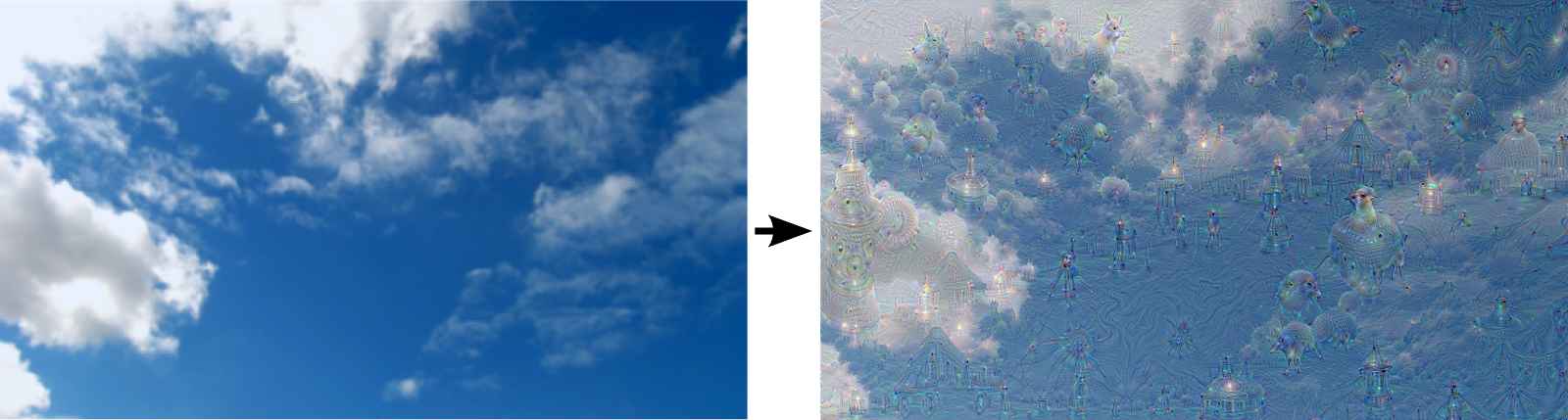

But, as far as cloud are concerned, asking the network’s higher-level layers to detect animals amidst an image of clouds beneath a blue sky yields some rather surreal results.

These intriguing interpretations arise because the neural network triggers a feedback loop once it detects the faintest beginnings of what it’s been taught to identity – in this case, mostly animals – meaning, that the network continues to make the clouds look more and more animal-like by imposing additional “bird and dog” characteristics atop of the existing results until a highly detailed animal emerges.

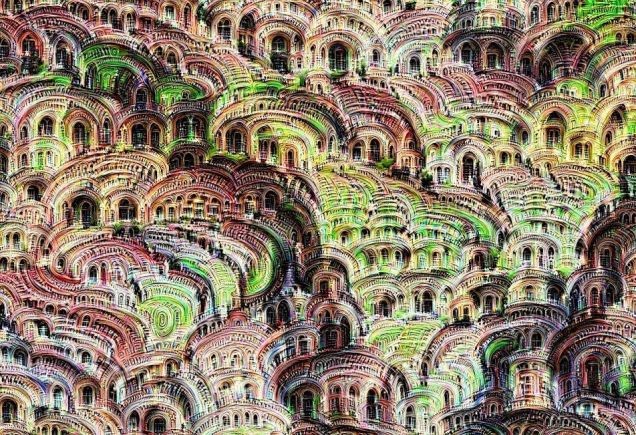

Google has coined the term “Inceptionism,” to designate this phenomenon, releasing a detailed blog post that outlines the results of the machine-vision experimentation. Apply this principle to a random-noise image and programming the network to create its own interpretation based on a pre-set parameter, such as arches in architecture, created the most abstract images in the series whose surreal characteristics grew more pronounced with each round through the feedback loop.

Although this type experimentation is crucial in furthering our understanding of machine vision, Google surprisingly understands very little why certain models work and others do not.

Source: Google

Advertisement

Learn more about Electronic Products Magazine