By Gary Hilson, contributing editor

There’s never been more pressure on memory to meet the demands of new applications — everything from edge computing and the internet of things (IoT) to increasingly smarter phones and smart cars. There’s also artificial intelligence (AI) and machine learning, both of which are becoming a big part of next-generation platforms being developed by the major hyperscale players — the Googles, Facebooks, and Amazons of the world.

All of them are expecting a great deal of innovation from the broad electronics industry and the memory makers, whether it’s further improvements to incumbent memories such as DRAM and NAND flash or making emerging memories that incorporate novel materials commercially viable as part memory devices for new computing architectures. But despite their deep pockets, it’s unlikely that any of the companies will ever invest in manufacturing equipment to make their own memory devices, and they’re not interested in paying a premium price. If DRAM still does the job, they’re not going to pay $5 more per device for an emerging memory, because at this scale, it adds up quickly.

Jim Handy, Principal Analyst of Objective Analysis, said that the clout that hyperscalers have today is unprecedented; the closest historical analogy that he could think of was the “enormous” buying power that Apple had 15 to 20 years ago. However, the company was looking for only a minor change to a conventional computing architecture — one pin changed, for example — and expected that change for no extra charge. “They were more into taking existing computer architectures and then delivering them in a prettier way or more friendly way to their customers.”

What hyperscalers are looking for are wholesale architectural changes, said Handy. “Their motivation is actually very different because they look at what it costs them to buy something, and then they also look at how much power it’s going to take to run it.” The hyperscalers expect the industry to solve that problem — they’re not going to go out and cover the costs of new capital equipment.

Nor are they going to start building their own memory devices, particularly DRAM, said Stephen Pawlowski, Micron Technology’s Vice President of Advanced Computing Solutions, and aside from its volatility, there’s nothing available that has the reliability, speed, and endurance of DRAM. NAND and some of the newer storage-class memories, meanwhile, are complicated from a materials perspective, as well as understanding how they work under temperature, multiple cycles, and different workloads — so a memory maker, such as Micron, is in no danger of becoming irrelevant. “It takes a lot of creativity and ingenuity to use those devices,” he said. “When it comes down to what do we need to do for the memory and storage subsystem in terms of improving capacity and performance efficiency, the collaboration seems to be pretty good.”

Pawlowski sees the hyperscalers as having taken over as the canary in the coal mine from the OEMs who played the role in the mid-2000s. OEMs drove innovation around moving storage closer to the CPU, while the hyperscalers are trying to push network bandwidth in a way that nobody has before, and that means a lot of power being consumed to move data around. “When we look at how we’re going to improve the efficiency of our data centers, we really need to make sure we can get the latency of the transferred information between the compute system and the memory storage subsystem down as much as possible.”

Martin Mason, GlobalFoundries’ Senior Director of Embedded Memory, said that there’s interest in both MRAM and ReRAM being deployed in the data center’s compute-intensive applications, including mainstream AI processing being done in a server farm, where a key challenge is power and memory bandwidth. “You are starting to see the emergence of novel memory technologies being deployed in that space. I don’t think any of them have really been truly commercially exploited at this point, but both MRAM and ReRAM are being looked at as various high-density memory technologies to replace SRAM in those applications.”

This trend reflects the evolution of hyperscalers over the past five years, said Martin. “They’ve migrated from being predominantly software-based companies to increasingly becoming more vertically integrated in both the solutions that they provide in terms of the enterprise infrastructure and, now, the silicon to go into those solutions.” They see silicon components helping them in two different ways, he said. The first is fundamental differentiation from all of the commoditized enterprise hardware, and the second is economic. By vertically integrating and taking their designs directly to the foundry, they end up with a more cost-effective solution that scales faster and more cost-effectively.

A good example of these hyperscalers going beyond just software is Google’s Tensor Processing Unit (TPU) for its own AI workloads, a technology normally expected from a company like Intel, said Mahendra Pakala, Managing Director, Memory Group, Advanced Process Technology Development at Applied Materials. Right now, these companies are using only what’s available to realize their AI accelerators, “but once you start designing and building your accelerators, you do see shortcomings.” He believes that accelerators will drive the adoption of emerging memories, too, as well as the overall memory roadmap because of their requirements.

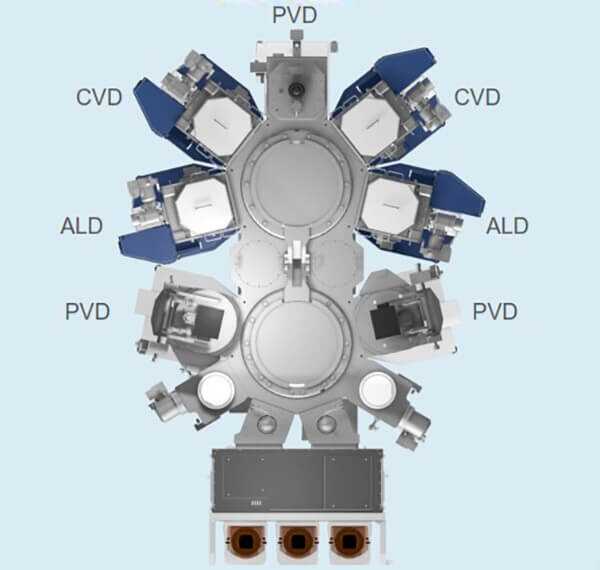

Applied Materials has evolved its Endura platform from a single-process system to an integrated process system as part of its materials engineering foundation for emerging memories. (Image: Applied Materials)

One established memory getting a lot of attention for AI applications is High Bandwidth Memory 2.0 (HBM2), which has traditionally been used for high-end graphics and high-performance computing, but Pakala noted that while it’s matured from a manufacturing perspective, it’s still relatively expensive. When it comes to emerging memories, PCRAM in the form of 3D XPoint has seen some commercial adoption, as has MRAM, he said. “We do see both of them maturing, and the name of the game is reducing the cost per bit. We do see a pathway to reduce the price.”

Ultimately, Applied sees materials engineering as the foundation for moving forward so that PCRAM, ReRAM, and MRAM can be cost-effectively manufactured to meet emerging use cases, including AI. Its latest Endura platforms, for example, focus on enabling the novel materials that are key to these new memories to be deposited with atomic-level precision. In the case of MRAM, which is seen as an excellent candidate for storing AI algorithms, Applied just announced a 300-mm MRAM system for high-volume manufacturing, made up of nine unique wafer-processing chambers all integrated in high-vacuum conditions and capable of individually depositing up to five different materials per chamber.

As much as the foundries are exploring how they can work with hyperscalers on devices based on emerging memories, Handy doesn’t see hyperscale applications significantly driving demand for them, as they would rather pay less for a complicated setup made of DRAM and flash. GlobalFoundries’ Mason also sees a pragmatic camp, which is about making what’s available work today and getting the best possible incremental solution. But there’s also a disruptive and “knock-it-out-of-the-park” mentality, wherein if the price is right, there would be a willingness to invest in the development of something that is truly disruptive in the industry, he said. “That’s how I think some of them see major breakthroughs happening.”

Handy said that these companies using memories would like them to continue to follow Moore’s Law, and that sets the boundaries going forward. “There needs to be continued capital spending by the memory companies, but if the capital spending becomes too great, if they try moving too fast, then it pushes up the costs rather than pushing them down.”

This article was originally published on EE Times .

Check out all the stories inside this Special Project:

Hyperscalers Getting Competitive With their IC Suppliers

Internet platform giants are all interested in custom silicon. Impatient, and with deep pockets, they’re getting more prone to going the DIY route.

The FANGs and the Foundries

As the FANGs design more and more of their own custom chips for AI, they’re getting ahead of traditional semiconductor designers and manufacturers. Something’s got to give.

A New Chipmaking Playbook for the AI Era

Applied Materials’ CEO says that the innovation required to drive the IC industry will rely on greater collaboration across the entire ecosystem. For example, Applied introduced a new piece of equipment to fulfill a specific need by its customers’ customer. That’s new.

Tech Titans Beginning to Drive Process Technology Roadmap

Locked in an AI arms race and sitting on piles of cash, hyperscalers are beginning to set the agenda in semiconductor process technology.

Semiconductors Swim with Seven Whales

The data center giants — the hyperscale companies — are pulling chip designers in many different directions in the search for big markets for big chips.

How Much Is Enough for Hyperscale Companies?

The world’s biggest companies have upended businesses and altered cultures. Now they’re starting to remake the semiconductor industry. Everybody should be worried — everybody.

Hyperscale Customers Changing the IC Game

The world’s biggest companies, used to upending businesses and altering cultures, are starting to remake the semiconductor industry. What exactly are they doing that’s so unprecedented, why are they doing it, and what might be the consequences? This article is the introduction to the Special Project.

Advertisement

Learn more about Electronic Products Magazine