Thanks to strides in technology, computers are capable of handling a lot of information. These days they can even recognize objects just like a human can, but just like a human, a computer can also be fooled by optical illusions.

Jason Yosinski, a Cornell graduate student and his colleagues at the University of Wyoming Evolving Artificial Intelligence Laboratory have created images that appear to be white noise or random geometric patterns but that computers perceive as common objects. This trickery raises security concerns and the idea that further research in computer vision needs to be conducted.

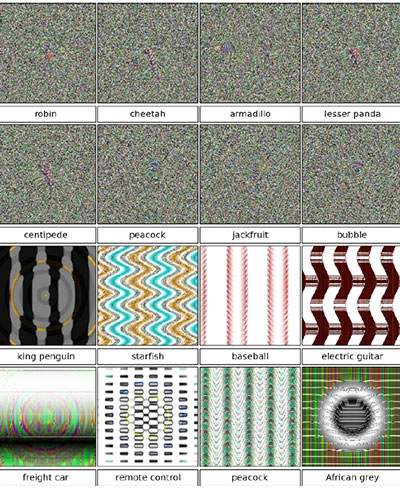

These images above have been recognized by a computer, with great certainty, as common objects. (The white noise versions and the pattern versions were created by different methods.)

“We think our results are important for two reasons. First, they highlight the extent to which computer vision systems based on modern supervised machine learning may be fooled, which has security implications in many areas. Second, the methods used in the paper provide an important debugging tool to discover exactly which artifacts the networks are learning,” said Yosinski.

How computers are trained

The computer-training process consists of showing images to a computer and relaying the name of the object in the picture. Even with different views of the same object, a computer can assemble a model that matches the name.

According to Cornell researchers, computer scientists have reached a high level of success in image recognition using Deep Neural Networks (DNN) that simulate the synapses in a human brain by increasing the value of a location in memory each time it is activated. These “Deep” networks function at different levels: One level recognizes that a picture is of a four-legged animal, another that it’s a cat, and another narrows it to “Siamese.”

What the Cornell researchers found was that as advanced as the computers are, blobs of color and patterns of lines may be enough to fool them. For example, the computer might say “school bus” given just yellow and black stripes, or “computer keyboard” for a repeating array of roughly square shapes.

Why this is dangerous

This trickery has the potential to produce wrong answers to certain questions that many web systems use to analyze large sets of data. So a web advertiser may not be able to decide which ad to show you on Facebook and government agencies may not be able to determine if certain web activity is suspicious.

Harmful web pages could have fake images that could fool search engines or bypass safe search filters. Even an abstract image may able to pass in a facial recognition system.

The next steps for the researchers involve re-training the computers and gaining a better understanding of what really goes on inside of the neural networks.

Story via Cornell University.

Advertisement

Learn more about Electronic Products Magazine