Intel Corp. has unveiled its most efficient client chip, the Intel Core Ultra mobile processor, the first built on its Intel 4 process technology and to use the Foveros 3D advanced packaging technology, and the 5th Gen Xeon processor with AI acceleration at its “AI Everywhere” event on Dec. 14. The new processors deliver big performance boosts across the board, including CPU compute, power, graphics, efficiency, cost and new AI features.

Intel CEO Pat Gelsinger also gave a quick peek into the Gaudi 3 AI accelerator for deep-learning and large-scale generative AI models, which is expected to be available next year. He also discussed the company’s AI footprint from the cloud and enterprise to the edge and meeting the company’s goal to deliver five new process technology nodes in four years.

Intel’s on track to deliver five new process technology nodes in four years. (Source: Intel AI Everywhere)

“Intel is enabling AI everywhere, not only in applications but in every device, data center, cloud, the edge and the PC, and making it safe, secure, trusted, well-engineered and regulated appropriately,” Gelsinger said. And it has to be sustainable, he added.

“AI will dominate the edge workload, and that’s what we think about with the AI PC,” Gelsinger said. “We’ve been seeing this excitement of generative AI—the star of the show for 2023, but we think 2024 marks the AI PC and that will be the star of the show in this coming year, unleashing this power for every person.”

Gelsinger calls it a “Centrino moment,” when the platform drove Wi-Fi adoption and untethered laptops, where every coffee shop, hotel and business needed to be connected and internet-enabled. “We think of the AI PC as that kind of moment driving this next generation of the platform experience.

“Andy Grove called the PC the ultimate Darwinian device,” he added. “The next major evolutionary step of that device is underway today that’s called the AI PC.”

Leading that charge is the new Ultra Core processor family.

Michelle Johnston Holthaus, Intel’s general manager of the Client Computing Group, called the Core Ultra the company’s largest architectural shift in 40 years, launching the AI PC. “It was a radical change in how we manufacture and assemble our microchips, but it was absolutely worth it.

“AI is a huge inflection point, and we believe that computers infused with AI will permanently change the PC market and fundamentally reshape how we interact with our computer,” she said. “Over the last five years, Intel has nurtured this intersection of AI and PCs with a steady drumbeat of new machine-learning, inferencing and computer-vision capabilities all within our client portfolio, and it was to prepare for this moment, putting powerful AI tools into the hands of millions.”

Core Ultra for AI PC

The Core Ultra features Intel’s first client on-chip AI accelerator, the neural processing unit (NPU), called AI Boost, to enable a new level of power-efficient AI acceleration with 2.5× better power efficiency than the previous generation.

The Ultra Core features a new performance-core (P-core) architecture with improved instructions per cycle, new Efficient-cores (E-cores) and low-power Efficient-cores (LP E-cores) for scalable, multi-threaded performance of up to 11% over the competition for ultra-thin PCs, according to Intel. The family offers up to 16 cores (six P-cores, eight E-cores, two LP E-cores), 22 threads and the next-generation Intel Thread Director for workload scheduling.

The Core Ultra also delivers a built-in Intel Arc GPU with up to eight X-cores, AI-based X Super Sampling (X SS), DX12 Ultimate support and up to double the graphics performance over the previous generation. It includes support for hardware-accelerated ray tracing, mesh shading, AV1 encode and decode, HDMI 2.1 and DisplayPort 2.1 20G.

Other features include up to 5.1-GHz maximum turbo frequency, up to 64-GB LP5/x and up to 96-GB DDR5 maximum memory capacity, and 40-Gbits/s connection speeds with Thunderbolt 4. Wireless features include integrated Intel Wi-Fi 6E (Gig+) and support for discrete Intel Wi-Fi 7 (5 Gig) for multi-gigabit speeds in more locations, wired-like responsiveness and extreme reliability.

Johnston Holthaus said the Core Ultra is the fastest processor for ultra-thin notebooks with a world-class GPU. In terms of energy efficiency compared with previous generations, it uses 40% less power in day-to-day activities, and when compared with the competition, it delivers up to 79% lower power in key metrics.

“It delivers Intel’s first on-chip AI accelerator,” she said. “It’s fantastic for offloading those long-running AI tasks that really allow us to improve battery life. The energy efficiency of the NPU is 2.5× better at executing the same code from our previous generation of products.

Michelle Johnston Holthaus, Intel’s general manager of the Client Computing Group, at the AI Everywhere event in New York. (Source: Intel AI Everywhere)

“With Core Ultra, we’re introducing the all-new NPU to enable low-power AI across the client,” Johnston Holthaus said. “But I think it’s important that in addition to the NPU, we architected our CPU and our GPU cores to deliver the most AI-capable and power-efficient client processor in Intel’s history.”

Because of the efficiency, Intel was able to expand the size of the GPU to deliver the next level of graphics performance, she said. “With the Intel Arc GPU, we’re seeing gaming and graphics performance that’s up to 2× our previous generation. More importantly, it’s the hardware and the software innovation combined that enables us to deliver this built-in GPU with world-class performance in this space.”

Intel also launched the AI PC Acceleration Program, Johnston Holthaus said. “This is a first-of-its-kind global initiative designed to increase and enable AI software development within the PC ecosystem, bringing those developers back to the heart of the PC. This is a multi-generation program where we’re providing hardware and software engineering to really create and dream that next great AI-powered application.”

Intel is partnering with more than 100 software vendors to bring several hundred AI-enabled applications to the PC market.

Intel also announced that Core Ultra–based AI PCs are available now from select U.S. retailers. The company expects the processor will be in more than 230 laptop and PC designs over the next year. AI PCs will account for 80% of the PC market by 2028, according to the Boston Consulting Group.

“Over the next two years, Intel is committed to shipping over 100 million client processors with dedicated AI,” Johnston Holthaus said. “That’s up to 5× more than all of our competitors combined. As I said, AI is a market changer.

“The power of AI is absolutely going to grow the PC industry,” she added. “All of this is driven by the seismic performance and experience improvements that we all know AI can offer.”

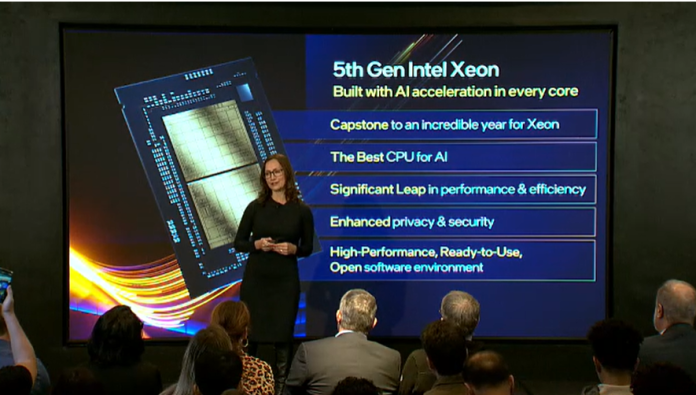

5th Gen Xeon processor

Intel also launched the AI-accelerated 5th Gen Intel Xeon processor family, delivering a 21% average performance improvement for general compute and enabling a 36% higher average performance per watt across a range of customer workloads, including AI, high-performance computing, networking, storage, database and security, compared with the previous generation. Intel said customers can reduce their total cost of ownership by up to 77% by upgrading from even older generations.

Designed for the data center, cloud, network and edge, the 5th Gen Xeon processors (code-named Emerald Rapids) support up to 64 cores per CPU and nearly 3× the maximum last-level cache from the previous generation. They offer eight channels of DDR5 per CPU, support DDR5 at up to 5,600 megatransfers per second (MT/s) and increase inter-socket bandwidth with Intel UPI 2.0, offering up to 20 gigatransfers per second (GT/s). They are pin-compatible with the previous generation.

“Intel started building AI acceleration into our Xeon processors more than a decade ago, and today, Xeon has established itself as the industry standard for powering the AI workflow,” said Sandra Rivera, Intel’s executive vice president and general manager of the Data Center and AI Group.

In 4th Gen Xeon, Intel integrated its Advanced Matrix Extensions, which boosts AI performance by up to 10×. Most of the world’s leading companies have adopted 4th Gen Xeon to accelerate their AI, including all of the top 10 hyperscalers, according to Rivera. It is also being adopted by cloud service providers.

Xeon is claimed as the only mainstream data center processor with built-in AI acceleration, with the new 5th Gen Xeon delivering up to 42% higher inference and fine-tuning on models as large as 20 billion parameters.

Sandra Rivera, Intel’s executive vice president and general manager of the Data Center and AI Group, at the Intel AI Everywhere event. (Source: Intel AI Everywhere)

“This is the most power-efficient, highest-performance and security-enabled Xeon we’ve ever delivered, offering a 21% average performance gain over the previous generation,” Rivera said. “Privacy and control over data is paramount, and 5th Gen Xeon offers increased confidentiality and security with Trust Domain Extensions [Intel TDX], which will be generally available to all OEM and CSP solutions providers.”

In addition, AI accelerators are built into every one of the processor’s 64 cores, giving users up to 42% higher inference performance versus the prior generation, Rivera said. “This means enterprises can easily and cost-effectively use the CPU to run the latest generative AI models like GPT-J, Dolly and LLaMA.”

In the cloud, AI-powered natural-language–processing applications will operate up to 23% faster, and at the edge, objects can be classified up to 24% faster, she added.

The event also highlighted a few customer wins. For example, IBM announced that 5th Gen Intel Xeon processors achieved up to 2.7× better query throughput on its watsonx.data platform compared with previous-generation Xeon processors during testing, and Google Cloud will deploy 5th Gen Xeon next year.

Servers based on 5th Gen Xeon will be available starting in the first quarter of 2024 from leading providers. Major CSPs will announce the availability of 5th Gen Xeon processor-based instances throughout the year.

Intel said future Intel Xeon processors expected next year will deliver major advances in power efficiency and performance. Sierra Forest, with E-core efficiency and up to 288 cores, is expected in the first half of 2024, followed by Granite Rapids, with P-core performance.

For developers, Intel builds optimizations into AI frameworks like PyTorch and TensorFlow, offers foundational libraries (through oneAPI) to make software performant across different types of hardware and provides advanced developer tools, including Intel’s oneAPI and OpenVINO toolkit.

Advertisement

Learn more about Intel Corp.