By Gina Roos, editor-in-chief

Intel Corp. released more details of its upcoming high-performance artificial intelligence (AI) accelerators — the Intel Nervana neural network processors (NNP-T for training and the NNP-I for inference) — at Hot Chips 2019. The company also shared more details on its hybrid chip packaging technology, Intel Optane DC persistent memory, and TeraPHY chiplet technology for optical I/O.

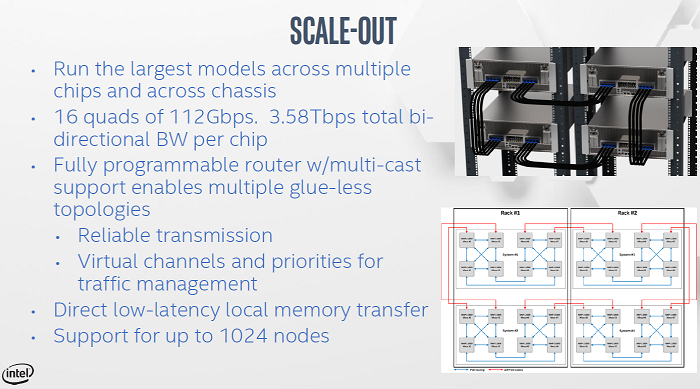

Intel’s dedicated accelerators, the Nervana NNPs, are built from the ground up with a focus on AI. The Intel Nervana NNP-T (code-named Spring Crest) is designed to train deep learning models at scale, focusing on two challenges: training a network as fast as possible and doing it within a given power budget.

(Image: Intel presentation)

The NNP-T builds in features and requirements that are needed for large models, without the overhead required to support legacy technology. With its programmability, the chip can be tailored to handle a variety of existing and emerging workloads.

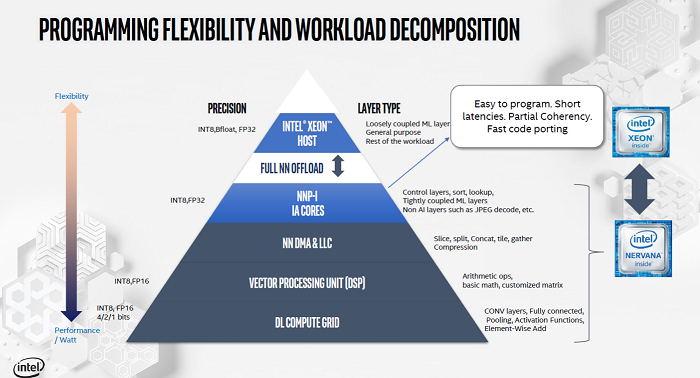

The Intel Nervana NNP-I (code-named Spring Hill) is developed for deep learning inference for datacenter workloads. This chip introduces specialized leading-edge deep learning acceleration, and leverages Intel’s 10-nm process technology with Ice Lake cores to deliver industry-leading performance per watt across major datacenter workloads, said Intel.

(Image: Intel presentation)

The chip also offers programmability without impacting performance or power efficiency. This dedicated accelerator offers easy programmability, short latencies, and fast code porting. It also supports all major deep learning frameworks.

Together, the Nervana NPPs and Intel’s packaging, memory, storage and interconnect technologies, are designed to address the growing demand to turn the huge amounts of data being generated by enterprises into actionable knowledge where it’s collected.

Advertisement

Learn more about Electronic Products Magazine