By Richard Quinnell, editor-in-chief

Even as CES 2018 introduced the latest in electronics for consumers and businesses, the next generation has already started to sprout. One of the germinating seeds is the i.MX8M family from NXP, also introduced at CES. The multi-core chip provides all of the processing needed to implement high-resolution music, 4K video, and voice activation in a single chip, backed by AI and machine learning to give next-generation streaming media centers and other IoT apps the ability to personalize.

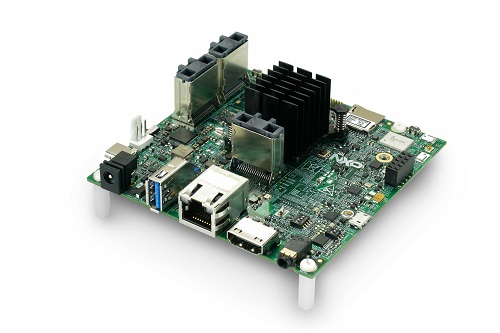

The i.MX8M offers a full package of media processing capabilities, aiming to turn machine interaction into a multi-sensory experience. It includes a graphics processing unit, video processing for both machine vision and streaming media decoding, a controller for dual 4K UHD displays, and audio processing for both high-definition playback and voice activation. In addition, the i.MX8M offers a quad-core ARM A53 processor for the compute-intensive operations and has an M4 microcontroller built in to offload simpler tasks such as listening for the wake word, reading sensor data, or issuing control signals. The combination allows developers to balance power and performance, keeping the larger processors asleep when not needed.

Touted by NXP as the most powerful processor that they have moved into media, one of the i.MX8M’s targets is to forestall a scalability problem looming on the horizon for voice-activated IoT. Currently, voice-activation systems use a fixed “wake word” for activation and then stream digitized audio to the cloud for speech recognition and interpretation. This approach presents several problems. First, the system requires a working internet connection for the system to function. Lose the ’net and your voice-activation system becomes deaf to your commands. Even with a working connection, latency becomes an issue. As more and more systems increase demand on the network’s and voice recognition engine’s capacity, response times increase.

With the i.MX8M (M for media), NXP vice president of consumer and industrial i.MX application processors Martyn Humphries told Electronic Products in an interview, there is enough processing power available to provide a measure of local voice recognition. Having a local recognition ability lets such systems continue offering basic control functions even without an active internet and helps reduce traffic and latency when there is a connection. In addition to smart TVs and other entertainment systems, the processor family is suitable for IoT systems such as smart thermostats, locks, home security, and other home automation IoT to provide a more intuitive and responsive device interface.

The rise in AI and machine learning brings another capability to voice systems with the kind of processing power that the i.MX8M brings to bear: personalization. Such systems could be configured to give users the chance to define their own wake words rather than depending on something that the vendor has chosen. Furthermore, by interacting with the user, the AI can use machine learning to familiarize itself with individual voice and word usage patterns. The result would be a system that not only recognized which user was speaking but would know enough about their speech patterns to interpret their commands. The machine would learn to deal with the speaker’s manner of expression rather than forcing the user to speak in the stilted manner of machines.

One thing that the i.MX8M does not have built in is Wi-Fi connectivity; that will require a separate device. But there are other interfaces on-chip, including PCIExpress, USB 3.0, and Ethernet, providing connectivity options when Wi-Fi is not needed.

Advertisement

Learn more about Electronic Products Magazine