Engineers and scientists have long been monitoring and recording the physical and electrical world. The first data recording system, the telegraph, was invented in the mid-19th century by Samuel Morse. That system automatically recorded the dots and dashes of Morse code, which were inscribed on paper tape by a pen moved by an electromagnet. In the early 20th century, the first chart recorder was built for environmental monitoring. These early chart recorders, which were completely analog and largely mechanical, dragged an ink pen over paper to record changes in electrical signals. The space program then created digital, high-speed data acquisition systems for both analog and digital data.

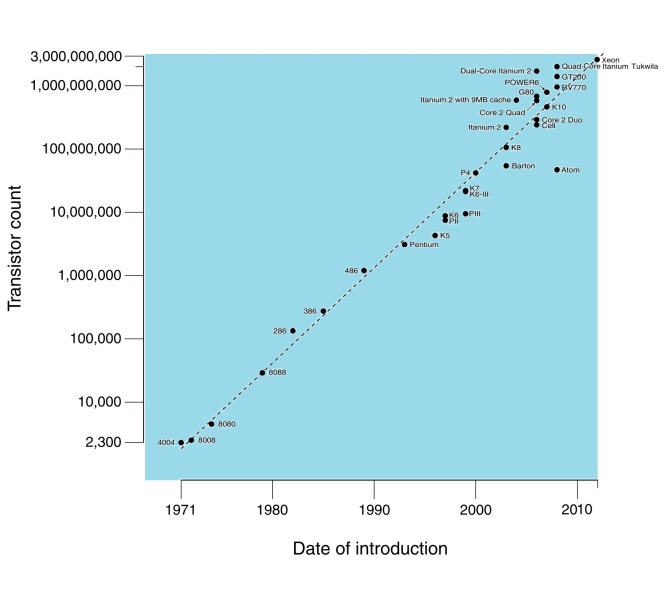

With our digital world growing more complex, the systems recording the physical and electrical phenomenon of today and tomorrow need to meet new data acquisition and logging challenges. Today, chart recorders and data-logging systems largely lack paper and are predominantly digital. They include digital processors, memory, and communications to link them to the ever-connected world. Over the past decade, digital storage has increased almost exponentially while the corresponding cost has plummeted. As Moore's law continues to progress (Fig. 1 ), creating more powerful, less expensive, and smaller processors that use less energy, future data acquisition and logging systems will leverage this technology to grow more intelligent and feature-rich.

Fig. 1: Over the past four decades, Intel has been a key contributor to Moore's law with inventions from its 4004 and 8088 to its latest Xeon processors containing 2.6 billion transistors.

Next-generation data-logging systems

Over the past two decades, the intelligence of data-logging systems has become more decentralized, with processing elements moving closer to the sensor and signal. Because of this change, remote DAQ systems and loggers are more integrated into the decision-making process as compared to just collecting data like they've done in the past. As Mariano Kimbara, Senior Research Analyst at Frost & Sullivan, noted, “We foresee the need for DAQ systems that not only acquire data over a network, server, or PCs but also provide intelligence to help with the decision-making process.”

There are many current examples of high-performance logging systems that integrate the latest silicon and IP from companies like Intel, ARM, Xilinx, and more. A majority of the systems leverage a processor-only architecture and some that leverage a heterogeneous computing architecture that combines a processor with programmable logic. Some examples of high-performance data logging systems in the market today are:

- HBM QuantumX CX22W

- Graphtec GL900

- NI CompactRIO and stand-alone CompactDAQ system

- Yokogawa WE7000

With data-logging systems featuring more intelligence and processing, the software they are running will be a primary way for vendors to differentiate themselves. Traditional data-logging software consists of turnkey tools that engineers use to configure the system and get to the measurements quickly, like HBM's Catman or Yokogawa's DAQLOGGER. The downside of turnkey tools is that they tend to be less flexible; what you see is what you get. On the other end of the spectrum, engineers and scientists can take advantage of a text-based programming tool like Microsoft Visual Studio or a graphical programming approach like NI LabVIEW system design software to program the processors within these systems. Programming tools offer the most customization for these data-logging systems, including a wider range of signal processing and the ability to embed any type of intelligence, but they have a steeper learning curve compared to turnkey tools.

Pushing logging systems' limits

A variety of applications and industries need far more intelligence in their data-logging systems. Industries such as automotive, transportation, and the electric utility are already using high-performance data-logging systems. Table 1 illustrates some future capabilities of high-performance data-logging systems based on Moore's law and advancements in processing technologies.

Table 1: Future capabilities of high-performance data-logging systems based on Moore'sLaw.

|

Category |

Future Features and Capabilities |

|

Storage |

|

|

Processing |

|

|

I/O Rates and Timing |

|

|

Application Software |

|

|

Visualization |

|

|

Connectivity |

|

|

Size and Ruggedness |

|

Vehicles being designed today include thousands of sensors and processors and millions of lines of code. With more intelligent vehicles come more parameters, both physical and electrical, to test and monitor. In addition, test engineers require the logging systems to be intelligent and rugged enough to use within the vehicles they're testing.

For instance, engineers at Integrated Test & Measurement (ITM) in the United States needed a high-performance and flexible in-vehicle test solution to determine the vibration levels of an on-highway vocational vehicle's exhaust system during operation. They built a high-speed vibration logging solution that provided a wireless interface from a laptop or mobile device with the stand-alone NI CompactDAQ system programmed with NI LabVIEW system design software. The high-performance 1.33 GHz dual-core Core i7 Intel processor within the stand-alone NI CompactDAQ system enabled advanced capabilities such as advanced signal processing, high-speed streaming at over 6MB/s to nonvolatile storage for all 28 simultaneously sampled accelerometer inputs, and Wi-Fi connectivity. In addition, with the latest version of the Data Dashboard for LabVIEW, engineers at ITM now have the ability to build a custom user interface and directly interact and control their vibration logging system on an iPad.

Another industry pushing the limits of traditional data-logging systems is the utility industry. The electrical grid is changing greatly and the utility industry is investing a lot of resources to make it smarter. One way the grid is getting smarter is through the integration of more measurement systems and devices into it. One such device is a power quality analyzer. A typical power quality analyzer acquires and analyzes three voltages of the power network to calculate voltage quality defined in international standards. Voltage quality is described by frequency, voltage level variation, flicker, three-phase system unbalance, harmonic spectra, total harmonic distortion, and signaling voltages level.

With the amount of analysis and high-speed measurements required within this application, a traditional logging system would not provide the horsepower needed. Engineers at ELCOM in India used LabVIEW and NI CompactRIO, an embedded acquisition system featuring an embedded processor and an FPGA, to create a flexible, high-performance power quality analyzer. Within this system, the processor was used for tasks such as advanced floating-point processing, high-speed streaming to disk, and network connectivity. The FPGA within CompactRIO allowed for an additional processing unit within the system and performed custom I/O timing and synchronization and any high-speed digital processing needed within the application.

Smarter future logging systems

As the world we live in becomes more complex, the systems monitoring and logging electrical and physical data from future machines, infrastructure, the grid, and vehicles need to keep up. The silicon and IP vendors seem to be doing their job by improving the performance, power, and cost of processing components. Now it's up to the data acquisition companies to follow suit with higher-performance logging systems that are intuitive, flexible, and smart enough to capture any and all types of data. With smarter data-logging systems, we should be able to get more intelligent data from any source and improve the performance, quality, and maintenance of the systems being built.

Advertisement

Learn more about National Instruments