At the GPU Technology Conference in San Jose, NVIDA has introduced the Tesla P100 graphics processor with 150 billion transistors in 16 nm FinFET with 10.6 Tflops of 32-bit floating point performance. The processor targets deep learning. The device is the largest ever made – 600 x 600 mm. It uses the NVIDIA Pascal architecture.

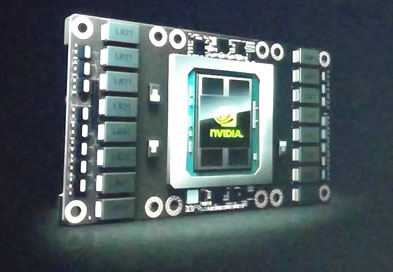

The Tesla P100 GPU

Nvidia also introduced the DGX-1 Deep Learning Supercomputer, which is built with multiple Tesla P100 GPUs. The single box computer uses a new NVLink high speed interconnect and Chip on Wafer on Substrate technology and is said to deliver more than 21 Tflops of peak performance for deep learning. The unit provides 12x faster training than the only-one-year-old four-way NVIDIA Maxwell architecture-based solution.

The system includes a complete suite of optimized deep learning software that allows researchers and data scientists to quickly and easily train deep neural networks. It will sell for only $129,000. Early models have been delivered already, and general availability will be this summer.

Advertisement

Learn more about NVIDIA