BY LARRY CHISVIN

Vice President of Strategic Initiatives

PLX Technology, www.plxtech.com

PCI Express (PCIe) has established itself as the universal interconnection pathway between electronic subsystems.If you peel back the cover from almost any storage, server, communication, embedded, or consumer box deployed in the past five years, you’ll find that PCIe is right there, guiding the high-speed signals between the components like a reliable sheepdog. Virtually every industrial design has at least one PCIe switching device as part of the mix. This is because PCIe is a modern, serial, point-to-point interconnect, and for every connection between components, a separate pathway is needed — something PCIe switches enable.

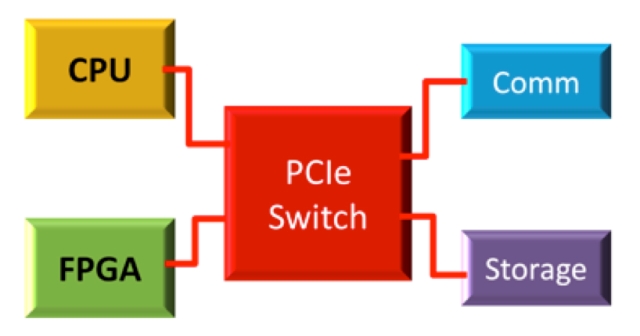

While it’s theoretically possible to build in enough connections in the components themselves, this is rarely done, since it’s expensive and complicated to add switching to devices that have a range of expansion needs. It’s much easier — and less expensive overall — to add an appropriately sized dedicated switch to a system based on the specific need (see Fig. 1 ).

Fig. 1: Using PCIe switches to connect devices

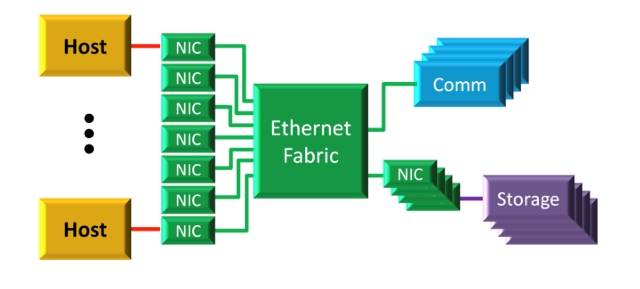

Narrowing the focus to certain markets — primarily the storage and server boxes used throughout today’s data centers — PCIe is well represented on the modules or blades that do all the work. But the interconnect that hooks up the blades to each other is usually some other technology, often 10G Ethernet. As seen in Fig. 2 , this approach isn’t particularly efficient; the subsystem on the blade is made up almost entirely of PCIe. That subsystem needs to be bridged to something else, sent across a backplane or through a cable, and then translated back to PCIe for use in a similar blade.

Fig. 2: An Ethernet-based fabric complicates interconnection.

This counterintuitive approach is primarily historical, rather than practical. Ethernet has become the most popular way to connect servers and storage boxes together, and it was convenient to treat the blades as separate local area networks. While robust and flexible, this approach is also slow, expensive and power-hungry.

Now, however, there is a better way.

With some standards-based extensions to PCIe, it’s a straightforward task to eliminate bridging and just connect up the blades in their more natural form. A new generation of switching devices from vendors such as PLX Technology is enabling PCIe to be used in this way. For example, ExpressFabric by PLX does just that; with it, a data center system designer can create low-latency, high-performance solutions.

One reason Ethernet has maintained its dominance, even through radical changes in the underlying hardware, is that it has continually offered a way to migrate from one generation to the next. The software — always the key ingredient in any migration — has remained compatible for a relatively smooth upgrade. Furthermore, the end user has been able to make use of existing hardware and add the new, faster (though usually more expensive) devices as necessary.

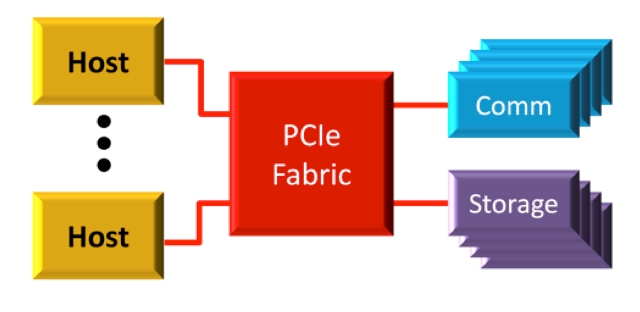

In contrast, a system using a PCIe-based fabric, such as that illustrated in Fig. 3 , offers this same advantage, with a few additional bonuses not available with Ethernet — or any other interconnect, for that matter. Ethernet-based applications will run on the new platform without change, except that they will operate at lower power and a reduced cost. Also, there’s an opportunity for substantial performance improvement through the use of RDMA (discussed later) on a PCIe-fabric-based system. Again, this is achieved without adding the expensive, complicated, and higher-power components this function would require in an Ethernet-based system.

Fig. 3: A PCIe-based fabric greatly simplifies interconnection.

With software compatibility essentially a non-issue, hardware compatibility is even more straightforward. While Ethernet is the dominant connection between servers, many devices that form the data center don’t even have an Ethernet connection — at least not of the 10G or higher variety. But virtually all those devices connect up to PCIe. In fact, an Ethernet NIC has Ethernet on one side — and PCIe on the other! So it is easier to create a blade-based backplane using PCIe than it is using Ethernet.

There are several functions that need to be accommodated to create a fabric beyond just the fundamental ability to connect up the components. The first is the need to share devices among a variety of hosts (shared I/O). The most common way to share devices is through a standard called Single Root — I/O Virtualization, or SR-IOV. This enables virtual machines (VMs) within a single host to share devices, each VM believing that it has its own device. A PCIe-based fabric can expand this to allow multiple hosts to offer the same capability, so that VMs running across multiple hosts can share the devices on the same network. This allows the reuse of both the SR-IOV devices and their software drivers, but extends their capability to the entire system.

Another major capability that a fabric has to offer is communication between hosts. There are two typical mechanisms for accomplishing this in current systems: direct memory access (DMA) and remote DMA (RDMA). DMA is usually offered in Ethernet-based systems, and it is a universal and reliable way to allow hosts to interact. The biggest drawback to a DMA system is that it entails copying the data quite a few times within the hosts, which, while providing a measure of security, makes the system very slow and inefficient. RDMA delivers a much faster way to do this, sending data from the application memory on one host to the application memory on another. When this type of transfer is necessary, the system normally doesn’t even use Ethernet.

PCIe-based fabrics such as ExpressFabric allow both DMA and RDMA to be used, without needing bridging devices, and using the same application software that runs on Ethernet or InfiniBand platforms.

Expect to see new developments in fabrics based on PCIe. This emerging approach to a more-efficient, highly reliable interconnect will enable data centers to free themselves from the legacy solutions that exact cost and power tolls, and to allow system engineers — and their sheepdogs — to sleep well at night!

Advertisement

Learn more about PLX Technology