By Patrick Mannion, contributing editor

A revival in the AMD versus Intel rivalry has been good news for data center or workstation designers looking for low-cost, low-power, high-performance processors. However, that does not overshadow a massive shift in emphasis toward dedicated or heterogeneous artificial intelligence processing approaches and the traction of the open-source RISC-V architecture. The latter comes in the face of widespread skepticism.

As a new architecture, it’s necessary for designers to be skeptical of RISC-V as they need to carefully manage risk, ensure long-term support and second sourcing, and have a reliable and relatively intuitive tool workflow. All of these were questions that surrounded the emergence of the open-source RISC-V architecture, along with the reality of strong incumbents, including ARM, Intel, and AMD. Customer wins looked unlikely. As one observer put it, “It’s a beautiful concept and architecture, but the doors are already shut for the foreseeable future.”

That observer was Loring Wirbel, an independent consultant and analyst, who added that it may be the current hobbyists or young engineers using Arduino that bring RISC-V into the mainstream, but this will take time.

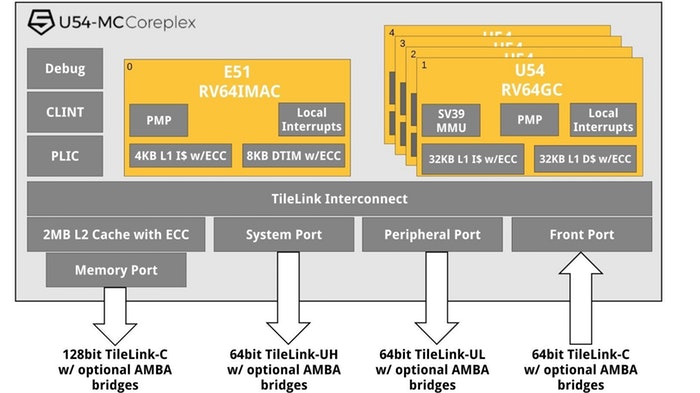

That said, SiFive, the main proponent of RISC-V IP, is making progress. It recently announced the availability of its U54-MC Coreplex IP, the first RISC-V-based, 64-bit, quad core, real-time application processor for Linux (Fig. 1 ). Electronic Products took the opportunity to put these questions directly to Jack Kang, VP of product and business development, at SiFive.

Fig. 1: SiFive’s U54-MC Coreplex IP is the first RISC-V-based, 64-bit, quad core, real-time application processor for Linux.

Fig. 1: SiFive’s U54-MC Coreplex IP is the first RISC-V-based, 64-bit, quad core, real-time application processor for Linux.

Regarding tool support, Kang said that there are many open-source tools already available for RISC-V. For example, Bintils and GCC are stable and mainlined, while LLVM and Linux are in the process of being upstreamed. “Glibc and newlib have already been upstreamed and will be in the next release, while GDB and OpenOCD support for RISC-V also exist,” he said.

SiFive itself offers Freedom Studio, an Eclipse-based integrated development environment (IDE), which integrates all of these tools into an easy-to-use environment.

On the commercial side, said Kang, vendors such as UltraSoC and Segger have announced support for RISC-V. “Multiple other leading commercial vendors for debug and trace will be announcing support later in 2017.”

In the face of incumbents Intel and AMD, Kang said that the opportunity for RISC-V lies in new areas of compute and demand, including edge computing, the IoT, and machine learning.

“These are markets that are not well-served by the monolithic companies and singular-style designs that the semiconductor industry tries to offer,” he said. “For SiFive and the RISC-V ecosystem, the ability to customize the chip and the core are key advantages.” The applications that Kang has in mind for SiFive’s technology will need thousands of different types of designs. “That is simply not something that traditional vendors have the ability to offer.”

As for RISC-V needing time for its users to mature and migrate from Arduino-type hobbyist applications, Kang was dismissive. “This sounds like the criticism that the commercial industry lobbied on open-source software when that concept first came out,” he said. “To think that innovation can exist only in the hands of multibillion-dollar corporations is a mistake.” It’s worth noting that Microsemi is SiFive’s other announced customer, along with Arduino.

Enough semiconductor startups have tried and failed for all to see that it’s exponentially more difficult and expensive to start a hardware company or build your own chip versus a software company.

“SiFive is out to change that by dramatically lowering the barrier to custom silicon,” said Kang. “Once we do so, we will see plenty of new ideas and innovations.”

AI and deep learning are the new hardware frontiers

SiFive’s emphasis on the IoT, edge computing, and machine learning is in line with the industry’s flight as a whole toward finding the right processing mix for IoT-based fog-computing-type analysis from the edge to the cloud, as well as artificial intelligence (AI). While the actual development of AI is very much a function of programming, the efficiency and speed with which data analysis and decision-making are performed are very much dependent upon the underlying hardware.

With that in mind, every vendor is looking for the right ingredients to complement their own silicon. Intel has already bought Altera for FPGA’s dataplane processing speed to complement its CPUs. It also bought Nervana for its deep-learning computational hardware engine. Nvidia has moved from being a GPU vendor to being an AI, machine-learning, and deep-learning solutions provider. As it does so, it is also partnering with others with complementary silicon to optimize for efficiency and speed.

But what is that right mix? Deddy Lavid, chief technology officer at Presenso, a Haifa, Israel-based developer of machine-learning software, took a stab at answering that. He said that the required processors and processing architectures to meet the needs of deep machine learning are:

- Several and parallel high-speed pre-processing units (for data feeding and crunching)

- Several parallel processing units (for each neuron-per-hidden-layer calculation)

- Several and parallel very large and fast registers (for fast storing and extracting data)

- High-speed network bandwidth

- CPUs that are at least 10 times less power-hungry

Lavid noted that such AI-specific processors are under intensive development from various companies, with Nvidia dominating this market with its customized GPUs.

CPU horsepower not the gating factor anymore

This effort to find the right mix of processing elements for AI and deep learning is a specific example of a broader struggle to find the right mix of elements in a post-horsepower era, when processing speed and power consumption is important but isn’t the deciding factor. In the data center, for example, scalability, memory bandwidth, I/O, and security are major considerations as raw processing for big data mixes with virtualization.

This was highlighted when the AMD-versus-Intel war began anew earlier in the year with AMD’s announcement in June of its Epyc 7000 system-on-chip (SoC) based on its Zen x86 cores (Fig. 2 ). The SoC comes with 32 cores, 64 threads, 8 memory channels with up to 2 terabytes of memory per socket, and 128 PCIe Gen 3 lanes. It also has hardware-embedded x86 security. It was introduced at a price ranging from $400 to $4,000.

Fig. 2: AMD’s Epyc SoC for the data center has up to 28 x86 Zen cores and 128 PCIe Gen 3 lanes.

To show the SoC’s memory controller performance, AMD compared Epyc directly to Intel’s Broadwell, using a memory-bound high-performance-computing (HPC) application. This serves as a proxy for applications such as virtual reality and 3D processing, in which data is moved back and forth from main memory into a local cache and back to main memory again.

It’s not surprising that AMD’s Epyc comes out on top with a 78% improvement over Intel’s Broadwell, but the over-arching point is valid: CPU horsepower can be choked by memory bandwidth. It needs the right ratio for the application. This applies to processor-to-processor communications, too, which is why AMD replaced PCIe with its own Infinity fabric for faster GPU communication.

The Epyc-Broadwell comparison became moot when Intel leapfrogged AMD with its Skylake-SP (Scalable Processor) SoC a few weeks later. Intel gave it a 65% percent improvement over its Broadwell line and introduced them with 50 versions ranging in price from $400 to $9,000.

Notably, Skylake-SP tops out at 28 cores versus Epyc’s 32 and has only 48 PCIe 3 Gen lanes versus Epyc’s 128 lanes. However, among other factors, designers will have to choose which has the best mix of features for the application as well as the number of sockets available. Both straddle two sockets, though Epyc’s I/O makes it competitive (to Broadwell) as a single-socket solution.

Edge virtualization and fog computing

As distributed, fog-computing-type concepts take hold for IoT analytics, the same principles are being applied to virtualization, moving it out from the data center and closer to the edge.

This was part of the reasoning behind NXP’s release of the LX2160A SoC with 16 ARM Cortex-A72 cores, 100-Gbit/s Ethernet, and a single PCIe Gen 4 interconnect (Fig. 3 ). Following the principles of fog computing, which aim to reduce latency, reliability, and overall power consumption, the SoC could be used to add a layer of both virtualization and analysis capability between the sensor and the cloud for IoT data analysis.

Fig. 3: NXP’s LX2160A SoC uses 16 ARM Cortex-A72 cores and comes with 100-Gbit/s Ethernet and a single PCIe Gen 4 interconnect.

Advertisement

Learn more about Electronic Products Magazine