By Majeed Ahmad, contributing writer

Just when robotic designs are entering the commercial arena to serve manufacturing, logistic, and service industries, it’s crucial to outline the key stumbling blocks that are still hampering the greater adoption of robots.

While hardware and software in robot systems have dramatically improved, their quickly evolving design trajectory shows that a lot is happening to make these devices more useful and intelligent in a variety of applications, including agriculture, warehousing, delivery and inspection services, smart manufacturing, and more.

Simply put, a robot — after taking input from sensors and cameras — locates itself and starts perceiving its environment. Next, it recognizes and predicts the motion of nearby objects and then plans its own move while ensuring the mutual safety of itself and nearby objects. All of these actions entail a lot of processing operations and power consumption.

There are three primary power usage venues in robot systems: motors and controllers that drive or steer robots, sensing systems, and processing platforms. A new breed of smarter and power-savvy sensors is required to quickly and accurately ascertain the orientation and position of the robot body at a lower cost and energy consumption. It’s also worth mentioning that robots don’t move quickly, so they generally don’t require cutting-edge processors operating at multi-gigahertz speeds.

Here, at this technology crossroads, all requirements or design challenges in taking robots to mass deployments lead to a key building block: system-on-chip (SoC). It runs diverse sensing systems as well as powerful artificial intelligence (AI) algorithms to enable a new generation of commercial robots.

Call for new SoCs

A dozen algorithms are usually processed concurrently and in real time to run robotic operations that encompass odometry, path planning, vision, and perception. That calls for a new crop of SoCs that can take integration to a whole new level. These SoCs are required to address specialized applications such as sparse coding, path planning, and simultaneous localization and mapping (SLAM).

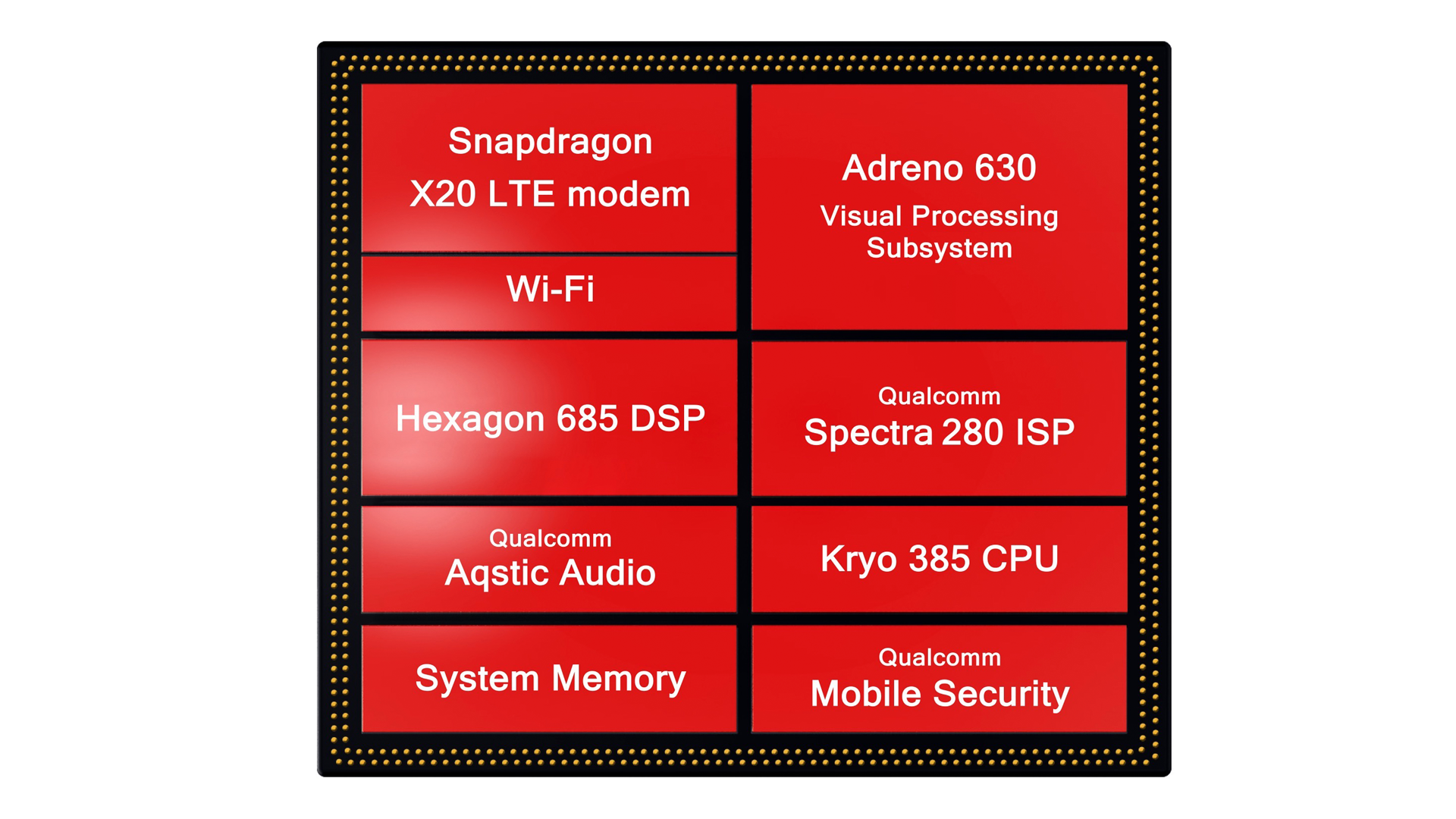

Qualcomm’s SDA/SDM845 chip (Fig. 1 ) highlights that new level of integration. Besides an octa-core Kyro CPU running at 2.8 GHz, it features a Hexagon 685 DSP for on-device AI processing and mobile-optimized computer vision for perception, navigation, and manipulation. A dual 14-bit Spectra 280 image signal processor (ISP) supports up to 32-megapixel (MP) cameras and up to 4K video capture at 60 frames per second.

Fig. 1: The architectural building blocks of the Qualcomm SDM845 chip for robotic designs (Image: Qualcomm)

The SoC platform also features a secure processing unit (SPU) to facilitate security capabilities such as secure boot, cryptographic accelerators, and trusted execution environment (TEE). For connectivity, it supports Wi-Fi links while aiming to add 5G to enable low latency and high throughput for industrial robots.

Qualcomm has also introduced the Robotics RB3 Platform that is built around the SDA/SDM845 chip. It’s accompanied by the DragonBoard 845c development board and kit for prototyping robot designs.

The hyper integration drive is also apparent in embedded modules like Nvidia’s Jetson Xavier (Fig. 2 ), aimed at delivery and logistic robots. The robotic compute platform comprises 9 billion transistors and delivers over 30 trillion operations per second (TOPS). And it features six processors: an eight-core ARM64 CPU, a Volta Tensor Core GPU, dual NVIDIA deep-learning accelerators (NVDLAs), an image processor, a vision processor, and a video processor.

As the above design examples show, AI accelerators are a key building block in SoCs and modules for robotic designs. A closer look also demonstrates how AI is working in tandem with sensors and actuators to perform tasks such as perception, localization, mapping, and navigation.

AI integration: a work in progress

When it comes to increasing the quality and accuracy of a robot’s response to a given situation or task, the role of AI technology is becoming critical, especially in object-detection and -recognition operations.

AI takes robots beyond the automation provided by rigid programing models and allows them to interact with their surroundings more naturally and with greater precision. Here, AI components work hand in hand with the robot’s image-processing function to automate tasks previously performed by humans.

However, robotic designers must add more AI features without increasing the component size and power consumption. Besides power constraints in robotic designs, the commercial adoption of robots is also hampered by large device form factors.

Fig. 2: The 80 × 87-mm Jetson Xavier module claims workstation-level compute performance at 1/10 the size of a workstation processing device. (Image: Nvidia)

Another critical issue is support for a variety of AI frameworks when industrial and service robots are starting to implement inference models for orientation detection and position estimation.

Smart sensors wanted

Robot systems like vacuum cleaners and hoverboards demand incredibly stable and high-performance sensors capable of operating in high-vibration environments. The high-precision processing of sensing elements poses additional challenges for designers. For instance, if they use software to control motion sensors like accelerators and gyros, that increases cost as well as development time required for software development.

That’s why robot systems require more integrated sensing solutions. For the Qualcomm Robotics RB3 Platform mentioned earlier, InvenSense, now a TDK company, offers a number of sensors and microphones that feature low power, tight sensitivity matching, and high acoustic overload point (AOP).

The RB3 platform employs InvenSense’s six-axis inertial measurement units (IMUs), consisting of a three-axis gyroscope and three-axis accelerometer, a capacitive barometric pressure sensor, and multi-mode digital microphones. The IMUs quantify external real-time clock measurements to ensure precision accuracy, while the pressure sensor measures relative accuracy of 10 cm of elevation difference.

Besides motion sensors, robots are increasingly employing smart sensor and camera solutions equipped with SLAM-based navigation systems that enable the robots to meet challenging requirements in real-life environments. Moreover, these sensors and cameras are incorporating machine-learning capabilities to run 3D-vision systems in robots.

However, developers must ensure small form factors and low power usage while integrating these high-resolution sensors in their robot systems. Additionally, these sensors and cameras should feature easy integration with robot controllers over standard digital interfaces.

Like AI, smart sensors and cameras are critical ingredients in the robot design recipe, and like AI, they are still in their infancy. 2020 is expected to bring greater maturity and more viable commercial sensing solutions that can serve robot systems at a lower cost and with greater accuracy. That’s when robots will move beyond their transformational role in warehouses and factories and become a collaborative tool in larger consumer and industrial landscapes instead of working merely as a standalone intelligent object.

Advertisement

Learn more about InvenSenseNVIDIAQualcomm