Call it 21st century sorcery, or call it science, MIT researchers break down reality into a complex series of charts and equations, manipulating the underlying mathematics of light to remove reflections from digital photographs shot through glass. The best part: they used a commercially-available Microsoft Kinect.

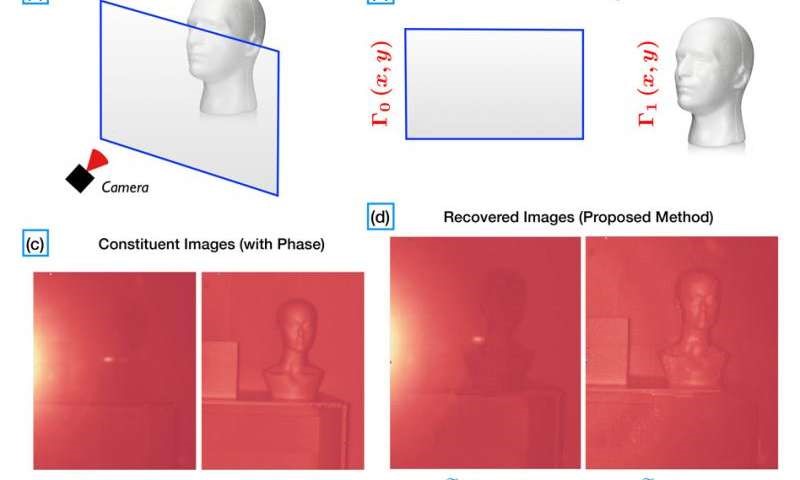

Computer scientists from MIT’s Media Lab’s Camera Culture group devised a unique approach to image separation that slices light into multiple reflections. The technique measures the arrival time of the light reflected off of nearby objects as well as the light reflected from distant objects, before calculating the interval of time during which the reflection is manifest.

Typically, off-the-shelf light sensors are too slow to distinguish between multiple light sources, but by leveraging the Fourier transform signal processing technique, the team was able to deconstruct the light sources into their specific frequencies and determine their arrival time by measuring differences in the phase.

This occurs because each frequency in a Fourier decomposition is distinguished by two properties: amplitude and phase. Amplitude refers to the height of the wave’s crest and describes how much it contributes to the overall composite signal, while phase describes the offset of the wave’s troughs and crests. Superimposing nearby frequencies often creates a difference in phase alignment, signifying a difference in the arrival time of each light source. Unfortunately, conventional light sensors can only measure intensity, not phase.

“You physically cannot make a camera that picks out multiple reflections,” says Ayush Bhandari, a PhD student in the MIT Media Lab and first author on the new paper. “That would mean that you take time slices so fast that [the camera] actually starts to operate at the speed of light, which is technically impossible. So what's the trick? We use the Fourier transform.”

Bhandari and his team re-assembled the complete phase information by feeding a few targeted measurements into a specialized algorithm that adapted an X-ray crystallography technique known as phase retrieval. Teaming up with Microsoft Researchers, the group created a special camera that emits light at specific frequencies and gauges the intensity of the reflection. This information is then combined with data obtained from multiple reflectors positioned between the camera and object photographed, and placed through an algorithm that reconstructs the phase of the returning light, while separating signals from multiple depths.

The number of light frequencies emitted by the camera depends on the number of reflectors; for example, two frequencies are required when removing reflections from a single glass pane separating the subject and the camera. Of course, the light frequencies are impure, requiring an additional filter to remove the noise.

A full minute was needed to perfectly separate an object from its reflection, combing through 45 frequencies.

Source: Techxplore

Advertisement

Learn more about Electronic Products Magazine