By Brian Santo, contributing writer

The image of your face can now be reconstructed by performing a brain scan on anyone who has ever looked at you.

The capability has been demonstrated only with monkeys, but dollars to bananas that your brain isn’t notably more complex than a monkey’s, so you might want to take note that there might be some interesting innovations in police sketches in the not-too-distant future.

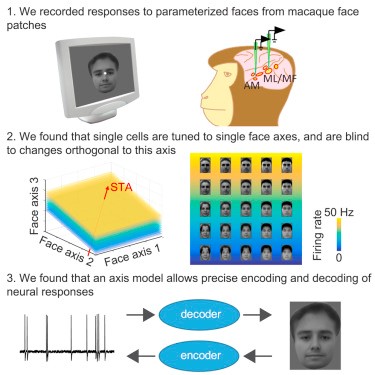

Scientists at Caltech have figured out how the brain’s mechanism for storing the images of faces works and have used that knowledge to learn how to scan the brain of a macaque and use those scans to render extraordinarily accurate recreations of faces that the macaque has seen. The result suggests that primate brains are far less complicated than originally thought.

Meanwhile, some neural networks and the artificial intelligence based on those networks have been getting so sophisticated that many AI researchers are coming to believe that it might not be possible for humans to understand what AIs are thinking anymore. The Caltech research suggests that such understanding might be possible after all.

The lead researcher on the Caltech project is Doris Tsao. By 2013, Tsao and her team had isolated a set of six regions of brain cells in the inferior temporal cortex (the “IT cortex”) used in facial recognition. They called these regions facial patches. Their initial hypothesis was that specific sets of cells were dedicated to storing the images of specific faces. For example, one set of X-number of cells held the image of your spouse; another set of cells held your boss’ dog; the third set was your Aunt Edith; and so on.

But further research suggested that this might not be the case.

Tsao and her team discovered that one specific set of cells responded only when they were shown images of hair, while another set responded solely to irises, and yet a third set responded only to noses, and so on, with different sets correlating to various facial features. Tsao discussed these findings during a 2013 TEDxCaltech talk. At the time, she said that the next step was to try to map the responses.

The Caltech researchers went a step further than that — they not only mapped the responses, but they’ve learned how to interpret them, as they explain in a paper titled The Code for Facial Identity in the Primate Brain, published in the journal Cell.

Tsao and her team said that they discovered there is “an extraordinarily simple transformation between faces and responses of cells in face patches. By formatting faces as points in a high-dimensional linear space, we discovered that each face cell’s firing rate is proportional to the projection of an incoming face stimulus onto a single axis in this space, allowing a face cell ensemble to encode the location of any face in the space.” The paper discusses how they used the code to reconstruct facial images with astonishing accuracy. Our work suggests that other objects could be encoded by analogous metric coordinate systems.

The code for faces that’s used by the brain turns out to be exactly analogous to how colors are coded for electronic displays, using red, green, and blue (RGB) light, Tsao explained in an audio interview accompanying the summary of her paper.

One way to represent colors is to assign an entirely unique code to each color. There’s a more efficient way to do it, however: Assign each color a combination code that assigns levels of intensity for R, G, and B. In HTML, for example, hot pink is #FF69B4 (which translates to 255 red, 105 green, 180 blue) and rebeccapurple is #663399 (102 R, 51 G, 153 B).

“The face cells turn out to do the same thing as RGB code, except they're doing it in a much higher dimensional space,” said Tsao. “It’s not three-dimensional anymore; it’s approximately 50-dimensional. The same set of cells are coding all the different faces, and it’s the pattern of activity across these cells that distinguish the different things. The same way that, with RGB, you have the same cells coding all the different colors, but it just depends on the relative activation of RGB.”

Tsao said that now that we understand how face coding works, Caltech plans to examine how the brain models faces. The process might be more complex for tracking the three-dimensionality of the face when a person is moving. They also want to explore how top-down models work. How does it work if you dream a face, or imagine a face? Another avenue that Caltech will try to understand is how other objects are coded.

Advertisement

Learn more about Electronic Products Magazine