By Patrick Mannion, contributing editor

As the interface between the physical and digital worlds, sensors and transducers have moved from a technological backwater to the forefront of automotive safety, security, healthcare, Internet of Things (IoT), and artificial intelligence (AI) enablement. As such, they have undergone revolutionary changes in terms of fundamental physical and electrical capabilities such as size, power consumption, and sensitivity, while at the same time triggering new thinking in sensor integration, ranging from sensor fusion to AI-based generation of sensor processing algorithms applied in a fog-computing-like architecture.

The drivers behind many of these innovations are the miniaturization and low-power requirements of the IoT, including smart consumer devices, wearables and the industrial IoT (IIoT), advanced driver assistance systems (ADAS) and the excitement around autonomous vehicles, drones, security systems, robotics, and environmental monitoring, just to mention a few. All told, MarketsandMarkets expects the market for smart sensors, which include some level of signal processing and connectivity capability, to grow from what was $18.58 billion in 2015 to $57.77 billion by 2022, corresponding to a CAGR of 18.1%.

Many of the sensors and innovations behind them were on full display at the recent CES 2018 in Las Vegas, where attendees were treated to an intoxicating mixture of what is available now and what is yet to come. At the show, Bosch Sensortec (BST) went right to the heart of what designers need right now: a lower-power accelerometer for wearables and IoT, as well a high-performance inertial measurement unit for drones and robotics.

Both of BST’s devices are based on microelectromechanical systems (MEMS), a technology that has come a long way since it was first adopted for airbags in the 1990s. Since then, it has undergone at least two more stages of development, having moved quickly into consumer electronics and gaming, smartphones, and now the industry is in the IoT stage. This is what Marcellino Gemelli, BST’s director of global business development, calls “the third wave.”

The two devices that Gemelli and his team were showing at CES targeted this third wave. The BMA400 accelerometer has the same 2.0 x 2.0 footprint of previous devices, but it consumes one-tenth of the energy. This is a feature so critical that it received the CES 2018 Innovation Award.

According to Gemelli, the BMA400 design team designed the device from the ground up with the goal of lowering power, and for this, it needed to think about the actual application. It quickly became clear that the 2-kHz sample rate used by typical accelerometers was not required for step counters and motion sensing for security systems. Realizing this, the team reduced the sample rate to 800 Hz. Along with other, more proprietary changes to the design of the MEMS sensor and associated ASIC, the BMA400 now consumes only 1 µA when transmitting an interrupt signal to the host microcontroller (MCU) when an event occurs, compared to the 10 µA that would typically be used.

The other IoT MEMS device that BST announced at the show was the BMI088, an inertial measurement unit (IMU) designed for drones and other vibration-prone systems that is interesting for its ability to both suppress as well as filter and reject the system’s vibrations as noise. Measuring 3.0 x 4.5 mm (Fig. 1 ), the BMI088 has a measurement range of ±3 g to ±24 g for its accelerometer and ±125°/second to ±2,000°/s for its gyroscope.

Fig. 1: The BMI088 IMU reduces and filters out vibration in applications ranging from drones to washing machines.

According to Gemelli, the BMI088 design team first suppressed vibration by setting the MEMS sensor to the substrate with a different glue formulation, “but it was a more holistic approach than just that. If the sensor generates garbage, it’s no good to anyone.” With that in mind, the team also modified the sensor structure and the software running on the ASIC used to make sense of the signal.

However, another key feature with regard to stability is the thermal coefficient of offset, or TCO, which is specified as 15 mdps/°K. Other key features include a bias stability of less than 2°/h and a spectral noise of 230 μg/√Hz in the widest measurement range of ±24 g.

MEMS speakers emerge

While sensors garner much of the attention as data-gathering tools for the IoT, transducers haven’t shared in the spotlight. However, USound is changing that through a partnership with STMicroelectronics that saw it announce the first advanced MEMS-based microspeaker, which it also demoed at CES (Fig. 2 ).

Fig. 2: The microspeakers from USound and STMicroelectronics replace bulky, lossy, electromechanical drivers with small, efficient, MEMS-based technology with negligible heat loss.

The microspeakers use STM’s thin-film piezoelectric transducer (PεTra) technology and USound’s patented concepts for speaker design. The devices eliminate the need for an electromechanical driver and its associated size and inefficiency, with much of that driver’s energy going to heat generation in the coils.

Instead, the microspeaker uses silicon MEMS and is expected to be the world’s thinnest while weighing half that of conventional speakers. The design applications include earphones, over-the-ear headphones, or augmented reality and virtual reality (AR/VR) headgear. Along with small size comes lower power and negligible heat (see Fig. 2 ).

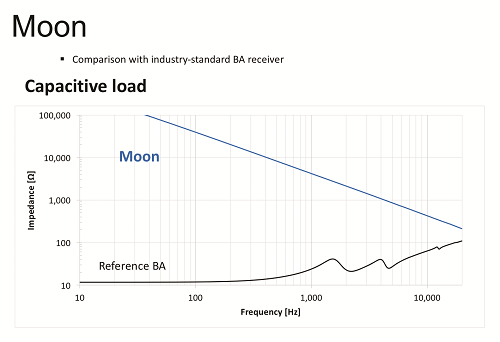

However, with small size comes the inevitable tradeoff in sound pressure level, so STMicroelectronics provided a graph of the MEMS speaker compared to a reference balanced armature speaker, showing a flat response out to 1 kHz (Fig. 3 ).

Fig. 3: USound’s microspeakers (Moon) use STMicroelectronic’s thin-film piezoelectric transducer (PεTra) technology to produce MEMS speakers with a flat SPL response out to 1 kHz. BA = balanced armature.

Chirp makes sensors out of transducers

There are many ways for designers to incorporate proximity and motion sensing into their IoT designs for either presence sensing or user interfaces or both. Ultrasound has been an option for many years, with sonar being a good example. However, Chirp Microsystems has taken soundwave-based sensing to the IoT with its CH-101 and CH-201 ultrasonic sensors.

Taking advantage of the wide dispersion of ultrasound of 180°, the sensors use a speaker (transducer) to generate the ultrasound wave and then calculate the time taken to return to its microphone pickup (sensor) to determine the range. Along with wide dispersion, the advantage of the ultrasound is that it is low-power (15 µW in wait mode), low-cost, and can resolve down to 1 mm.

Beyond sensing range and proximity, designers can develop gesture-based IoT device interfaces by taking advantage of Chirp’s patent-pending gesture classification library (GCL) that is based on machine learning and neural network algorithms. For gesture sensing, however, at least three sensors are required, along with Chirp’s IC with a trilateration algorithm to determine a hand’s location, direction, and velocity in 3D space.

The combination of gesture, presence, range, and motion sensing with low cost, low power, small size (3.5 x 3.5 mm, including the processor), a simple I2 C serial output, and all operating off of 1.8 V.

Sensor fusion of LiDAR and cameras

There was a time, not so long ago, when it was assumed that light detection and ranging (LiDAR) alone was the way forward for autonomous vehicles. More recently, it has become clear that even though LiDAR has advanced significantly, safety requirements demand the use of multiple technologies for accurate and intelligent ambient sensing at high speed. With this in mind, AEye developed its intelligent detection and ranging (iDAR) technology.

A form of augmented LiDAR, iDAR overlays 2D collocated camera pixels on to the 3D voxels and then uses its proprietary software to analyze both on a per-frame basis. This overcomes the visual acuity limitations of LiDAR by using the camera overlay to detect features such as color and signs. It can then focus in on objects of interest (Fig. 4 ).

Fig. 4: AEye’s iDAR technology overlays 2D camera pixels on LiDAR’s 3D voxels so objects of particular interest can be identified and brought to the fore.

While AEye’s technology is data- and processing-intensive, it does allow the assignment of resources on a dynamic basis to customize data collection and analysis based on such parameters as speed and location.

Fusing sensors with AI and fog computing

According to Bosch Sensortec’s Gemelli, the next step is to rethink how sensors are designed and applied. Instead of designing a sensor and its associated algorithms from the ground up, Gemelli suggests that the time has come to start applying AI techniques to automatically generate new algorithms for sensor use based on analysis of the acquired data and the application over time. For instance, a suite of different sensors may be performing specific functions well, but with AI monitoring, it may turn out that those sensors could be used to track a parameter that they were never intended for, or they may also be used more efficiently.

The concept is gaining traction, said Gemelli. It also dovetails with fog computing architectures, where the goal is to minimize the amount of data that must pass between sensors and the cloud. Instead, by applying AI, more processing can be done at the sensor itself, over time, using the larger network and the cloud only as needed.

Advertisement

Learn more about Electronic Products Magazine