By Majeed Ahmad, contributing writer

Sensor fusion, which enables data analysis through the combination of several sensors, is being rapidly adopted in mobile designs such as smartphones, wearables, and internet of things (IoT) devices. Sensor-fusion platforms are also breathing new life into emerging categories like augmented-reality (AR) and virtual-reality (VR) gadgets.

For starters, it’s important to understand the difference between sensor fusion and sensor hubs. Sensor fusion procures data from all of the sensors and cross-references the multiple sources of information using software algorithms to create a coherent picture. For example, it combines data from an accelerometer and a gyroscope to subsequently deliver the motion context for a fitness-tracking wearable device.

A sensor hub, on the other hand, utilizes the information furnished by sensor fusion and turns it into a meaningful context. It’s usually a microcontroller (MCU) or microprocessor (MPU) that performs a specialized task like counting steps in a pedometer.

The sensor-fusion technology, along with sensor hubs, has transformed smartphone, tablet, wearable, gaming, and IoT designs over the past years. That makes it a fundamental building block in the optimization of sensor architectures striving to craft new experiences for mobile users.

It all began when smartphones started to collate data from sensors like accelerometers and gyroscopes for apps catering to indoor navigation and activity monitoring in the post-iPhone era. Since then, sensor fusion has been trying to coordinate different sensor combinations on multiple mobile platforms and thus create new user experiences.

Smartphones and beyond

Almost all high-end Android phones now feature sensor fusion as a connectivity hub for accelerometers, gyroscopes, and other sensors. For a start, smartphone manufacturers are trying to improve the GPS location tracking and sensing when inside a building where the signal is either unavailable or very poor.

Here, sensor fusion can help in tracking the exact location of the device by collating data from the accelerometer, gyroscope, and other sensors. The integration of a barometric pressure sensor, for instance, can enhance smartphone functionality with weather forecasting, altitude sensing, and other location-centric features.

Not surprisingly, therefore, most of the new smartphone designs are equipped with multiple sensors to obtain accurate indoor positioning results.

In the mobile realm, the next frontier for sensor-fusion technology is the growing domain of wearable devices. Here, sensor fusion is becoming a key technology differentiator among wearable devices spanning across fitness, health-care, and lifestyle markets.

Take, for instance, Qualcomm’s Snapdragon Wear 2500 chip especially designed for children’s smartwatches. It employs sensor-fusion technology to deliver location tracking instead of relying on a standalone GPS device. The chip features a built-in sensor hub and pre-integrated sensor algorithms, and it allows wearable device manufacturers to implement additional sensors.

Power consumption, a key issue in compact wearable designs, continues to drive the development of power-savvy algorithms. At the same time, however, sensor-fusion algorithms are playing a vital role in facilitating an unprecedented level of precision and accuracy in wearables serving active sports, clinical trials, and AR and VR gadgets.

Software complement

Algorithms, which maintain position, orientation, and situational awareness in sensor-fusion designs, play a vital role in setting the stage for sophisticated data analysis. For instance, algorithms for localization and tracking can generate inferences from incomplete data, introduce redundancy and fault tolerance, and extrapolate human-like contextual information.

It’s worth mentioning here that algorithm designers working on tracking and navigation systems usually create in-house tools that are difficult to maintain and reuse. So companies like MathWorks are offering toolsets that allow engineers to design, simulate, and analyze systems that fuse data from multiple sensors.

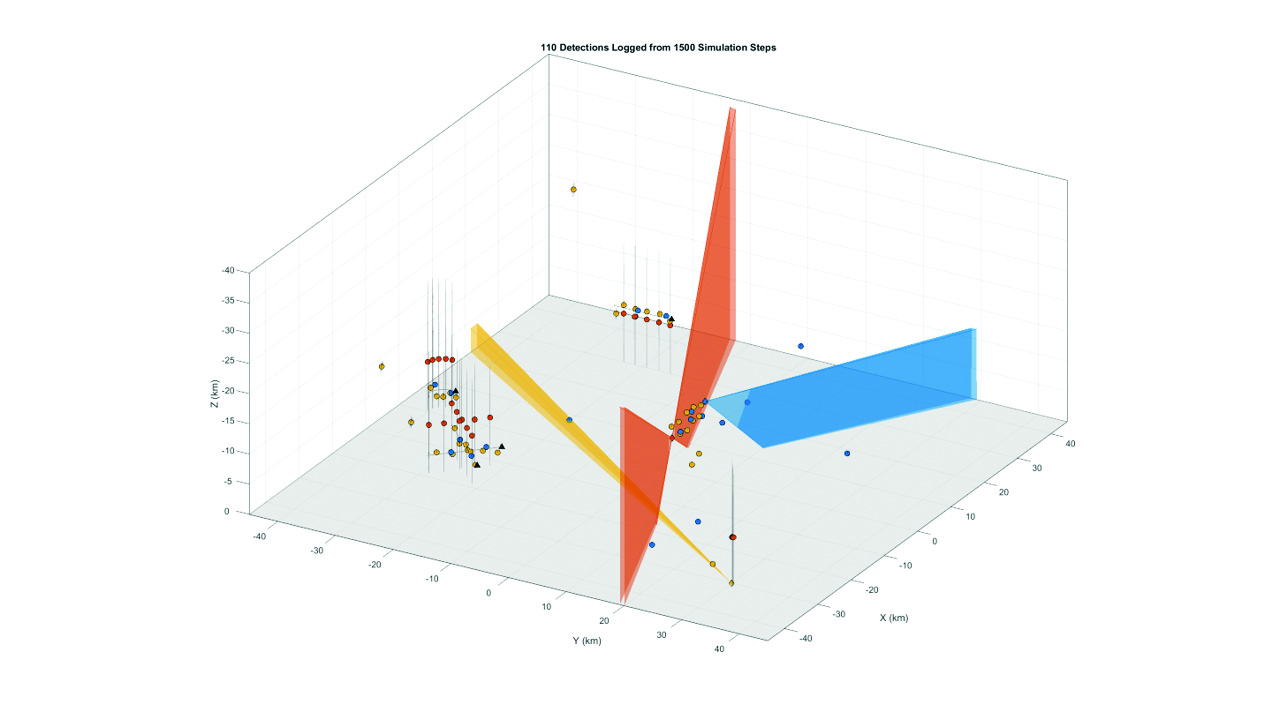

The Sensor Fusion and Tracking Toolbox from MathWorks enables engineers to explore multiple designs without having to write custom libraries. That facilitates data-association algorithms, which can evaluate fusion architectures using real and synthetic data. Then there are multi-object trackers, sensor-fusion filters, and motion and sensor models that complement the toolset.

Multi-platform radar detection generation capabilities in MathWorks’ Sensor Fusion and Tracking Toolbox. (Image: MathWorks)

The toolset facilitates synthetic data generation for active and passive sensors — including RF, acoustic, infrared, GPS, and inertial measurement unit (IMU) sensors — and it includes scenario- and trajectory-generation tools. It also extends MATLAB-based workflows to help engineers develop an accurate perception algorithm for sensor-fusion systems.

Sensor chip suppliers like InvenSense, a TDK company, also provide sensor-fusion algorithms and run-time calibration firmware. That, in turn, eliminates the need for discrete components and guarantees that calibration procedures complement the sensor-fusion algorithms to provide precise and absolute positioning.

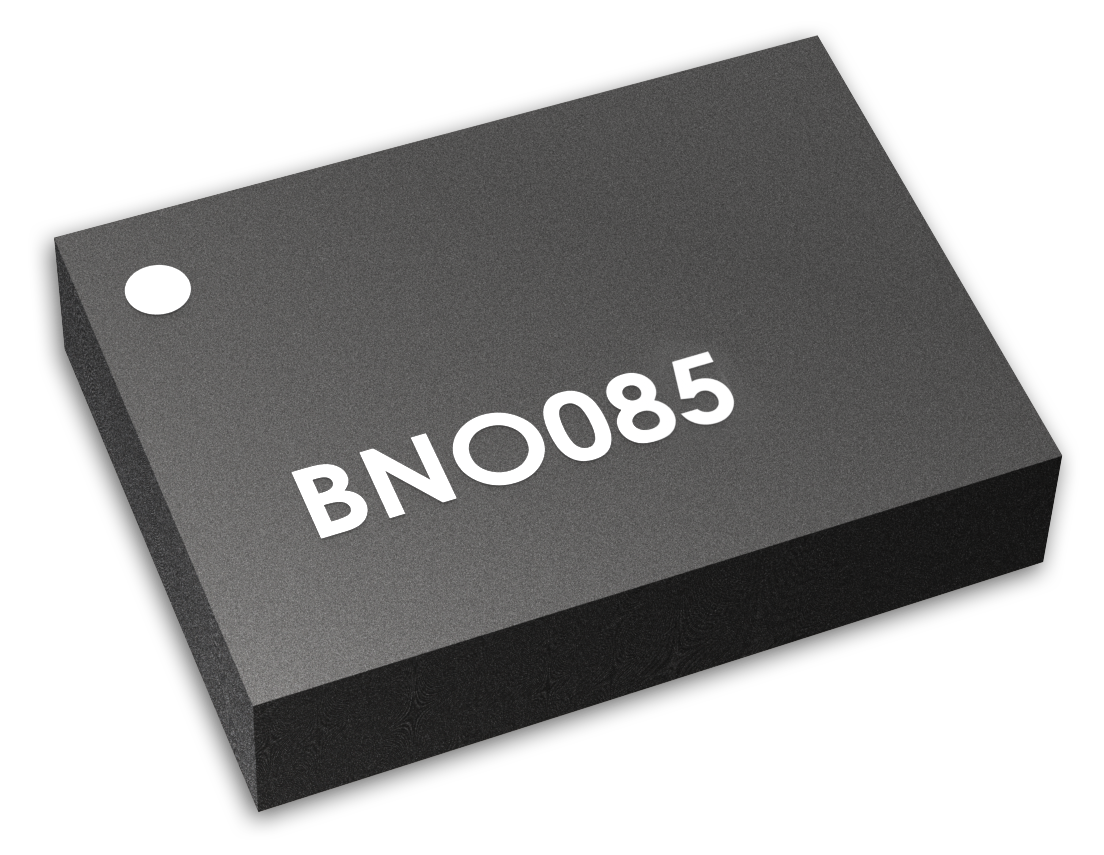

Likewise, sensor supplier Bosch Sensortec has joined hands with software house Hillcrest Labs, a subsidiary of InterDigital, Inc. , to provide turnkey sensor-fusion solutions. Bosch’s BNO080 and BNO085 modules, for example, integrate a triaxial accelerometer, a triaxial gyroscope, and a magnetometer with a 32-bit Arm Cortex-M0+ microcontroller that runs on Hillcrest’s SH-2 firmware.

Bosch’s BNO085 SiP module runs on Hillcrest’s SH-2 firmware. (Image: Hillcrest Labs)

The MotionEngine software in SH-2 provides sophisticated signal-processing algorithms to process sensor data and feature precise real-time 3D orientation, heading, calibrated acceleration, and calibrated angular velocity. The MotionEngine software employs advanced calibration and sensor fusion to turn individual sensor data into motion applications such as activity tracking, context awareness, advanced gaming, and head tracking.

Innovation hotbed

Sensors are nearly everywhere, and that makes sensor fusion a crucial component in the mobile design recipe. Therefore, sensor-fusion technology will continue to evolve as newer applications land on smartphone, wearable, and other mobile platforms.

It’s been more than a decade since sensor fusion found a home in smartphones and other mobile designs, but as it turns out, sensor fusion can still do a lot more. For instance, sensor-fusion technology has a fundamental role in improving the often noisy and inaccurate data returned from sensors. This is especially advantageous when voice-activated platforms are joining the sensor bandwagon, which dramatically reduces the processing time. Then there are Bluetooth-enabled hearable and wearable devices that are adding end-to-end support for Alexa Voice Service.

Sensor fusion will also play a critical role in preventing battery drain and ensuring trigger-word efficiency in these always-on and always-listening voice applications. At the same time, new location-based services and motion-based gaming environments will continue to push sensor-fusion technology toward a new era of innovation.

Advertisement

Learn more about Electronic Products Magazine