Layers are good things in many instances. Layers of thin strips of wood combine to form plywood, for example, which exhibits strength that would otherwise be unrealized. In engineering, a top-down hierarchal design approach is—at its core—a layered approach. Complex processes are broken down into smaller, more manageable chunks and a pipeline flow is established to provide final stages with real-time data needed at the moment.

Each stage requires its own turn, taking its time to perform. This results in lag time from input to output. Once a pipeline is full and streaming, data on the other side is contiguous relative to itself even though it is delayed from the source. This is acceptable with one-way data transmission but can introduce problems with bi-directional communications channels. We can experience this in our personal lives in the digital delays inherent to IP-based telephony.

As more data and functionality are imparted into cloud-based operations, the bandwidths and traffic densities are increasing to a point where data centers can be overwhelmed, especially if they are oversubscribed. As fiber deployment continues to tackle last-mile connectivity, each endpoint becomes a data center unto itself. If every hop in the routing of data is going through multiple servers, routers, switches and layers, this can and does introduce unacceptable slowdowns in performance and delivery, obviously negatively affecting users’ experiences and satisfaction.

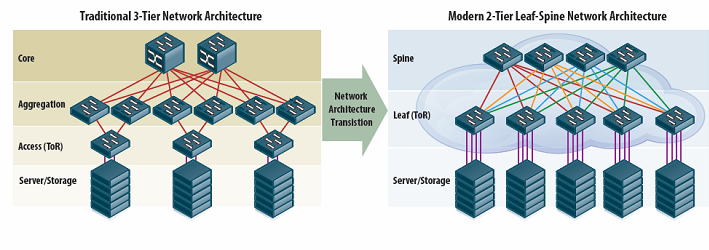

Top-of-Rack (ToR) topologies are helping to aggregate higher-density traffic in local data clusters by allowing them to flatten out the network and eliminate an entire layer. And that’s just what the two-tier leaf-spine technology proposes to do. It architecturally shifts the incumbent three-tier structure in place now by flattening out the network into a two-tier structure (Figure 1).

Figure 1: By moving to Top-of-Rack topologies, data centers can eliminate an entire layer and provide higher traffic density and faster response. (Source: Avago Technologies)

Figure 1: By moving to Top-of-Rack topologies, data centers can eliminate an entire layer and provide higher traffic density and faster response. (Source: Avago Technologies)

The key to making this feasible is next-generation transceivers that can be retrofitted into older facilities. The availability of hot-swappable faster data rate ports in existing switch/router/server boxes allows an extension of the life of the equipment in present-day service. This also permits scalability to a higher level.

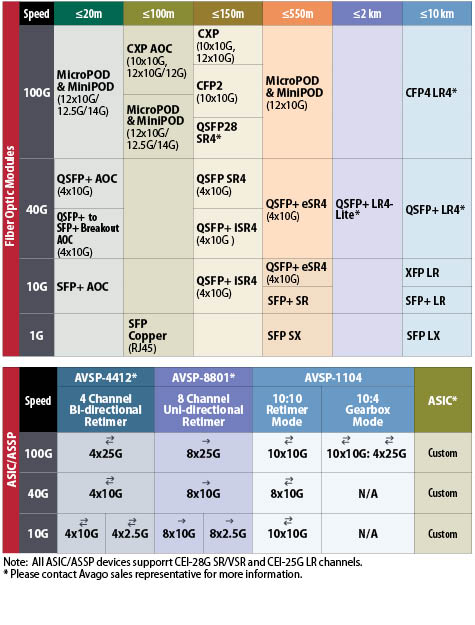

This is exactly the tactic that Avago is employing as the company rounds out its portfolio of fiber-optic transceivers, transmitters and receiver modules (Figure 2). The present 1G slots can be upped to 10G. The 10G ports can be upped to 40G, and 40G can be upped to 100G and beyond at distances from 20 meters to 10 kilometers. Even updated copper transceivers allow chip-to-chip and chip-to-module connectivity at 10G, 25G and 28G. Designers can expect even higher rates as this trend continues.

Figure 2: Form factor-compatible replacement modules designed for higher data rate ToR topologies can give new life to old racks and scale down the network. (Source: Avago Technologies)

Figure 2: Form factor-compatible replacement modules designed for higher data rate ToR topologies can give new life to old racks and scale down the network. (Source: Avago Technologies)

But, designers should also expect the need to do more burst processing. As traffic density increases, bursts of traffic can overwhelm older layer two-switch and layer-3 routers. The increased data throughput may not be supported using older boxes. As a result, these newer-generation parts are ideal choices for the baseline of next-generation designs. These may include next-generation protocols as well, such as Transparent Interconnection of Lots of Links (TRILL), where connectivity is broadcast to all routing bridges through an independent link state protocol.

Knowing is half the battle and resources online such as Avago’s Product Solutions Guide for Data Centers is a great place to start. An overall Selection Guide also showcases an array of solutions.

By: Jon Gabay

Advertisement

Learn more about Avago Technologies