By Gina Roos, editor-in-chief

MIT researchers have developed a sub-terahertz radiation receiving system or array that could be used to complement infrared-based LiDAR imaging systems used in autonomous vehicles. Light-based image sensors often have trouble with extreme environmental conditions such as fog, while sub-terahertz wavelengths, between microwave and infrared radiation on the electromagnetic spectrum, can be detected through fog and dust clouds, said MIT.

Here’s how a sub-terahertz imaging system works: “To detect objects, a sub-terahertz imaging system sends an initial signal through a transmitter; a receiver then measures the absorption and reflection of the rebounding sub-terahertz wavelengths. That sends a signal to a processor that recreates an image of the object.”

However, there are several challenges in implementing the sub-terahertz sensors into the vehicles, said MIT. These include a need for a strong output baseband signal from the receiver to the processor. The problem is that many existing discrete component solutions are large and expensive, and smaller on-chip sensor arrays produce weak signals.

According to the MIT researchers, released in a paper published by the IEEE Journal of Solid-State Circuits , they’ve developed a “two-dimensional, sub-terahertz receiving array on a chip that’s orders of magnitude more sensitive,” so it can capture and interpret sub-terahertz wavelengths in environments with a lot of signal noise.

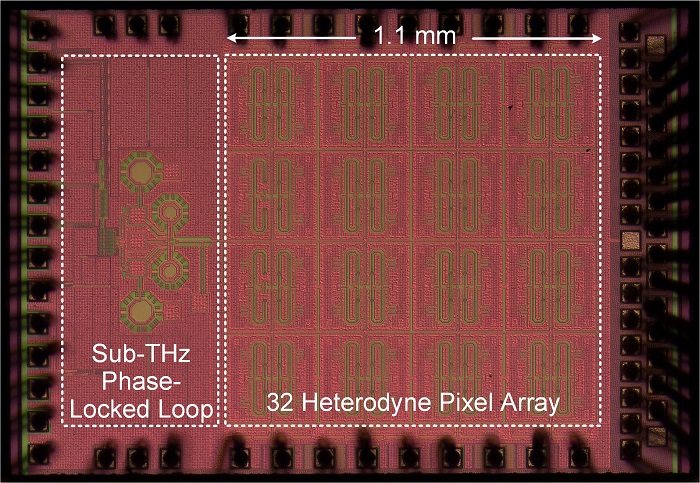

Image: MIT.

The authors of the paper are Ruonan Han, an associate professor of electrical engineering and computer science and director of the Terahertz Integrated Electronics Group in the MIT Microsystems Technology Laboratories (MTL), and Zhi Hu and Cheng Wang, both Ph.D. students in the Department of Electrical Engineering and Computer Science working in Han’s research group. (Download the PDF of the paper here .)

“A big motivation for this work is having better ‘electric eyes’ for autonomous vehicles and drones,” said Han in a press release . “Our low-cost, on-chip sub-terahertz sensors will play a complementary role to LiDAR for when the environment is rough.”

The receiving array is based on heterodyne detectors, a scheme of independent signal-mixing pixels that are typically difficult to densely integrate into chips, said MIT. However, the researchers were able to shrink the size of the detectors to fit many of them on a chip.

The “heterodyne sensors, which mix the input signal with a strong local oscillator (LO) signal, are able to generate much higher baseband output and sensitivity compared to square-law detectors,” according to the paper, solving the need for strong output baseband signals.

Central to the design is the single pixel, a “heterodyne” pixel, which generates the frequency difference between two incoming sub-terahertz signals and the local oscillation, an electrical signal that changes the frequency of the input frequency, said MIT. This is what produces a signal in the megahertz range that can be interpreted by a baseband processor.

The output signal calculates the distance of objects, similar to how LiDAR works. The output signals of an array of pixels is what enables high-resolution images of a scene for detection and recognition of objects.

The prototype, a 32-pixel array integrated on a 1.2-mm2 device, improves sensitivity by about 4,300× than the pixels in today’s on-chip sub-terahertz array sensors, said MIT.

Heterodyne pixel arrays work only when the local oscillation signals from all pixels are synchronized, said MIT, leading the designers to a decentralized design that solves scalability issues. As the array scales up, the power shared by each pixel decreases, which reduces the output baseband signal strength that depends on the power of the local oscillation signal, said MIT. This means that the signal generated by each pixel can be weak, resulting in low sensitivity.

In a decentralized design, each pixel generates its own local oscillation signal for receiving and down-mixing the incoming signal. An integrated coupler synchronizes its own local oscillation, delivering more output power to each pixel. In this design, each pixel offers high sensitivity.

The drawback is that the footprint of each pixel is much larger, a challenge for a high-density array design. So the researchers combined the antenna, down-mixer, oscillator, and coupler components into a single component for each pixel, enabling a “decentralized design of 32 pixels,” said MIT. A phase-locked loop (locked to a 75-MHz reference) ensures that the 32 local oscillation signals are stable.

“We designed a multifunctional component for a [decentralized] design on a chip and combined a few discrete structures to shrink the size of each pixel,” Hu stated. “Even though each pixel performs complicated operations, it keeps its compactness, so we can still have a large-scale dense array.”

Advertisement