Efficient data compression and transmission are crucial in space missions due to restricted resources, such as bandwidth and storage capacity. This requires efficient data-compression methods that preserve critical information while lowering the size of the data. Testing finds that space-grade processors used in satellite payloads can now address resource-hungry applications, such as data compression at a similar performance level than can be computed with a laptop computer.

For Earth observation satellites, the payload application handles a large amount of data from image sensors, video cameras or even binary files. Data compression is therefore a key feature for storage and downstream transmission. Prior to transmission to Earth, data must be stored and compressed on board, necessitating significant computational capabilities.

Teledyne e2v processors, which are multi-core processors designed for space applications, are highly efficient for supporting this specific type of application. Real-time data-compression algorithms can be optimized to execute on several cores, resulting in a substantial enhancement in compression speed.

This article presents a performance test and evaluation of data compression for space applications using Teledyne e2v space processors in on-board computing satellite payloads. The study examines the LS1046 and LX2160 processors and compares them with similar industrial CPUs that are not designed for usage in space.

LS1046 and LX2160 processors

The LS1046 and LX2160 processors (Figures 1 and 2) are designed for space applications that demand high computational power and are capable of withstanding radiation. In addition, they can execute deep-learning AI algorithms to process images related to space. These devices facilitate the pre-processing of information at the edge, resulting in a reduction of the downlink bandwidth required for transmitting the data to the ground.

Typical applications include:

- Communication satellites/constellations, embedding AI/security

- Landing and avionics space systems, robotics and control of mechanical arm

- Human mission exploration, science missions

- Early warning, observation satellites, security/automated situation detection and awareness/AI

- Defense in space

- High-bandwidth space observation

- Meteorological satellites

The LS1046 is the first Arm processor developed by Teledyne e2v. It is a quad-core Arm Cortex-A72 processor, operating at a maximum frequency of 1.8 GHz, with a computing capacity of 30k DMIPS and including ECC-protected L1 and L2 cache. Additionally, it offers a wide range of peripherals, such as packet-processing acceleration, 1/10 Gigabit Ethernet (GbE), PCIe Gen 3, SPI, I²C and UART. It is certified for space up to Level 1 according to the standards NASA EEE-INST-002 – Section M4–PEMs and ECSS-Q-ST-60-13C.

The LX2160, which is currently under development, includes the most recent iteration of a 16-core 64-bit Arm Cortex-A72-based processor. This processor has a computational capability of up to 200k DMIPs and operates at a maximum speed of 2.2 GHz. This processor is equipped with the wire-rate I/O processor (WRIOP) functionality, allowing it to efficiently manage high-speed peripherals, such as 100 GbE, multiple PCIe Gen 3, hardware L2 switching, DPAA2 with 100-Gbits/s decompression/compression and a 50-Gbits/s encryption engine. In addition, there is a twin CAN interface, as well as UART, SPI and I²C.

Test setup

To evaluate the efficiency of the Teledyne e2v’s space processors, a hardware configuration including a QLS1046-Space development kit and an LX2160ARDB development board from NXP Semiconductors was used.

The development kit contains the QSL1046-Space module, with 4 GB of DDR4 memory and operating at a frequency of 1.6 GHz. Although the LX2160 processor possesses a decompression/compression acceleration engine with the ability to handle rates of up to 100 Gbits/s, the test solely evaluated the core computing capabilities. Enabling this engine would significantly enhance the compression performance of the LX2160. The benchmark was conducted on many processors and files commonly used in several applications.

Type of compression

The goal is to use a compression method that is a tradeoff between speed and performance. The two main types of compression are described below.

Lossless compression

This technique minimizes the file size while ensuring that no data is compromised. It operates by detecting and removing redundant information from the dataset. Lempel-Ziv-Welch (LZW) compression, run-length encoding (RLE) and Huffman coding are all prevalent lossless compression algorithms.

These methods are highly suited for situations in which each component of the file must remain intact, as is the case with photographs and images captured in space. Principal benefits of lossless compression include the absence of quality degradation and reversibility (i.e., the ability to restore images to their original state).

An instance of a lossless method is the Huffman compression algorithm, which takes advantage of the file’s redundant data to compress it. Additionally, this algorithm might be parallelized on each core of the processor.

Lossy compression

A portion of the file data is omitted using this method to attain greater compression ratios. Although this causes the file to have a reduced size, it also compromises the quality of the file. Lossy compression algorithms that enjoy widespread usage include the MPEG and JPEG formats.

One of the benefits of lossy compression is a reduced file size, which results in an image that differs marginally, if not completely, from the original.

Power consumption is a critical factor in space applications, particularly satellites, due to the high cost of electricity. In this regard, processors outperform FPGAs in terms of computing efficiency per watt. In situations where lossy compression is acceptable, on-board compression using processors may be the optimal solution.

The primary benefit of conducting a benchmark using a lossless compression algorithm is the ability to retrieve the complete base file, which is unattainable when using a lossy compression algorithm.

LZ4 plugin

The LZ4 plugin is utilized in the benchmark to compress files. LZ4 is a lossless data-compression technique designed to prioritize fast compression and decompression speeds, resulting in a relatively low compression ratio. It is especially appropriate for situations when rapid data processing is crucial. The term “LZ4” is derived from “Lempel-Ziv 4,” which signifies its association with the Lempel-Ziv family of compression techniques.

The LZ4 method is commonly employed in applications that require high-speed performance with little delay, such as real-time data processing and communication systems.

Tested files

During the benchmark, a variety of file types were utilized, all of which can be compressed in any application, making them particularly useful for space applications. Text files are commonly utilized for drafting and managing notes, procedures and reports. When it comes to image formats, JPEG and RAW can be quite intriguing for compression purposes, especially when there is a need to quickly send a large number of photos on Earth in a fast or near-real-time manner. Executable files could be utilized for updating software on a satellite or integrating AI plugins. The characteristics of the tested files are shown in Table 1.

The benchmark (Figure 3) is designed to compress files in parallel, ensuring maximum performance and fast results. The time taken for a compression is measured, and then the compression speed in megabits per second is calculated. In the same way, it measures the time taken for all threads and calculates the total compression speed in megabits per second across all scripts. The algorithm operates in an endless loop, enabling continuous file compression.

The selection of a compression algorithm is crucial to optimize programs and can be different for each application. In the case of lossy compression, there are very different steps that can be parallelized. The processor’s architecture (depending on whether vector processing is available) and the number of cores have an impact on the selection of the algorithm.

With lossless compression algorithms, the same considerations apply. However, this case is less complex, as the algorithm requires less computational power. Power consumption and temperature management are additional parameters to consider, especially for space applications, for which multi-core processors are interesting solutions.

The potential use of AI algorithms in the data- and image-compression process is interesting, especially in decision-making to filter and prioritize tasks. Performance on board is always limited, and AI algorithms can lead to a reduction in processing but also an intelligent scheduling of tasks.

Experimental results

The benchmarks were performed in similar hardware environments between the LS1046 and LX2160 processors to eliminate some approximations. The program was designed to optimize memory contention between multi-cores, and it was one of the most challenging aspects.

Cache coherency was not exercised in the test, as each thread has an independent picture to process. The processor MMUs and L1/L2 memory systems usually take care of the cache coherency unless the application controls it.

Results are presented for two main aspects: compression ratio and speed of compression.

Compression ratio

The compression ratio was considered only to emphasize how important it is to understand the compression process and any changes between file types. The compression ratio is strictly dependent on the file type. In the case of JPEG, the compression ratio is minimal or non-existent, whereas in the case of RAW, the ratio is significant enough to be considered. It is worth noting that only a few files were tested, and the ratios consistently yielded very similar results with each attempt.

Other benchmarks appear to align with this outcome. The NCI files appear to be more easily compressible, likely because of their internal structure that facilitates redundancy. Text and executable files have a similar compression ratio.

To understand a compression application, one must be familiar with the algorithm’s operation and the desired compressed file format. Understanding file differences and compression solutions makes it easier to choose the best method for each situation and compare its speed with other processors.

Once you have a good grasp of compression solutions and the variations between files, it becomes simpler to select the most suitable algorithm for each scenario and to evaluate its speed in comparison with other processors.

Speed of compression

Computing speed is a crucial aspect to consider when comparing processors. It was interesting to conduct this benchmark to evaluate the performance of the files used in a customer application case and compare them with other processors.

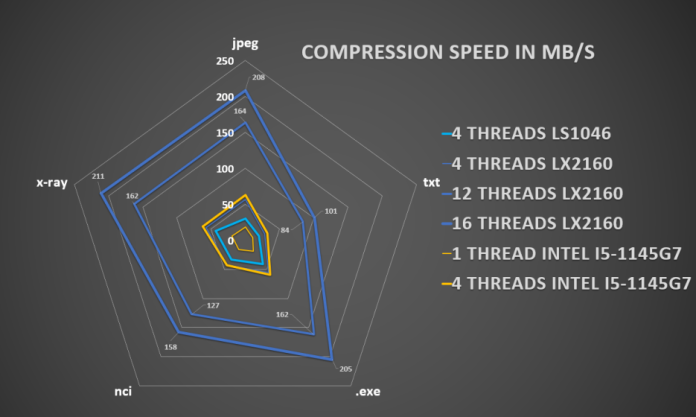

As shown in Figure 4, the LX2160 demonstrates remarkable compression speed compared with the Intel Core i5-1145G7. In addition, the figure demonstrates that the LX2160 is 4× faster than both the LS1046 and an Intel i5. Based on the algorithm, it appears that the number of cores has a direct impact on speed.

Figure 4: Compression speed on tested files with Teledyne e2v’s LS1046 and LX2160 processors (Source: Teledyne e2v)

Based on the compression speed test results of these space-grade processors, managing the vast amounts of data generated during flight is now achievable. This is particularly crucial as image sensors continue to improve in resolution and capture rate.

About the author

Manuel Blanco is an application engineer at Teledyne e2v, specializing in the development of demonstrations and research on the applications of digital products, such as processors and space-grade memory systems. He is currently completing an apprenticeship program that alternates between his studies at INP Phelma, where he focuses on microelectronics and telecommunications, and his work at Teledyne e2v, focusing on processor and memory product applications in space systems.

Manuel Blanco is an application engineer at Teledyne e2v, specializing in the development of demonstrations and research on the applications of digital products, such as processors and space-grade memory systems. He is currently completing an apprenticeship program that alternates between his studies at INP Phelma, where he focuses on microelectronics and telecommunications, and his work at Teledyne e2v, focusing on processor and memory product applications in space systems.

Advertisement