Editor’s note: EE Times’ Special Project on neuromorphic computing provides you with everything you need to know about neuromorphic computers from the basics, in the article below, to if it’s a bridge to quantum computing and everything in between.

How much intelligence can we build into machines? We’ve come a long way in recent decades, but assistants like Siri and Alexa, image-recognition apps, and even recommendation engines tell us as much about how far we have to go as how far we have come. For deep-learning tasks like medical diagnosis, an abundance of computer power, and plenty of well-tagged data, the progress has been astonishing.

But what about the rest?

Our aspirations for AI go well beyond data science. We want our wearable biosensor systems to warn us instantly of anomalies that could indicate a serious health event, self-driving cars that can react in real time to the most challenging driving conditions, and autonomous robots that can act with minimal direction. How do we get there?

For now, it may seem like at least our mobile devices understand us (after a fashion), but they really don’t. They simply relay our voices, to be decoded into words, then requests, and into actions or answers. This is a computation-intensive process.

A 2013 projection showed that “people searching by voice for three minutes a day using speech recognition would double the data centers’ computation demands, which would be very expensive using conventional CPUs.” This led to Norman Jouppi and his colleagues at Google developing the tensor processing unit (TPU), focused on optimizing hardware for matrix multiplication. That offers the promise of improving power efficiency of neural network queries by 95%.

Those processors are already making a big impact in server-based applications, but the approach concentrates all the memory and processing at the data center. It therefore puts huge reliance on the communication infrastructure and is potentially wasteful, as time and energy are spent communicating information — much of it irrelevant.

Enter edge computing: How can we meet the speed, power, area, and weight requirements of mobile and standalone applications?

Selectively copying biology

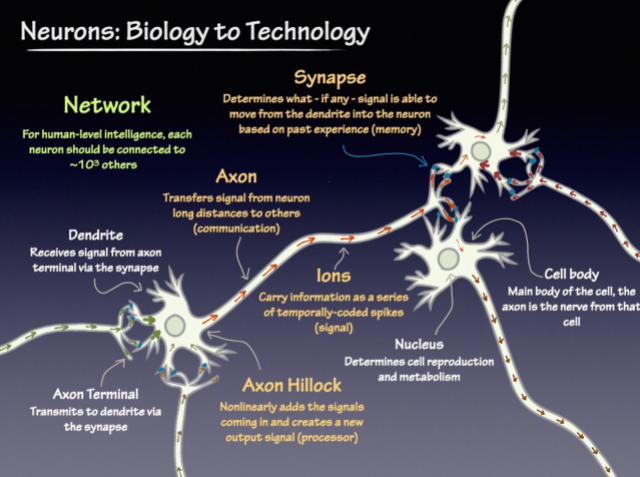

Neural networks used for machine intelligence are inspired by biology (Fig. 1 ). Hence, neuromorphic engineers try to imitate that same biology to create hardware that will run it optimally. That approach gives engineers a large menu to choose from.

Fig. 1: The goal of neuromorphic engineering is to learn as many lessons from biology as possible in an attempt to achieve the low power and high functionality of the brain. The design choices that engineers make in implementing neural processing, memory, and communication will determine how efficiently an artificial brain performs a given task.

One strategy stops partitioning chip architectures into processor and memory, instead breaking it into neurons (which perform both functions).

Next, large many-to-many neuron connectivity is preferred because it makes the networks more powerful. Sensor-processor pipelines that maintain the geometry of incoming signals (such as images) can be helpful because they allow for efficient neighbor-neighbor interactions during processing — for example, human retina. Keeping signal values in the analog domain is beneficial because everything can be processed simultaneously rather than split up into complicated actions on different bits.

Finally, relating the timing of communications to neural behavior — not an arbitrary clock — means that signals inherently contain more information: Brain-like spikes that arrive together are often related to the same events.

This is why the term “neuromorphic engineering” or “neuromorphic computing” is a bit slippery. The term was coined by Caltech Professor Carver Mead in the late 1980s. Mead’s projects and others over the following decades were particularly focused on the benefits of using analog computation. An example is a system that replicates a set of fly’s-eye motion-detector circuits: Analog signals are detected by multiple receptors, then propagate sideways through nearest-neighbor interaction. This system was very fast and very low-power (Reid Harrison demonstrated just a few microwatts in the late 1990s) and showed the advantages of both retaining signal geometry and analog processing.

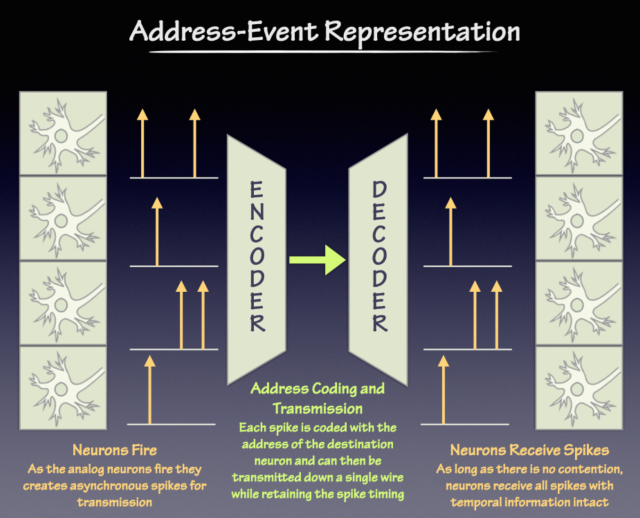

Another characteristic of “pure” neuromorphic systems is their frequent use of address-event representation, or AER. The communication system has many of the advantages of conventional networks while providing many-to-many communication while retaining spike timing.

Using AER, a neuron sends out a spike whenever it needs to depend on its learning, behavior, and the input just received. It transmits to all other neurons in the network, but only the neurons that should receive spikes do; all others ignore it. What makes this network different is that the gaps between the spikes are relatively large, so spikes that arrive from different neurons at roughly the same time can be considered correlated (Fig. 2 ).

Fig. 2: Address-event representation is one way of maintaining the timing of spikes that neurons use to communicate — without each neuron directly wired to hundreds or thousands of others. It works so long as the likelihood of a spike is sufficiently low so that there is little contention among spikes arriving simultaneously at the encoder.

Many neuromorphic systems use AER — France’s Prophesee and the Swiss firm aiCTX (AI cortex) focus on sensory processing. That approach is equally elegant and practical, providing the benefits of hard-wired connections between neurons without all the wiring. It also means that information from an incoming signal can simply flow through the processor in real time, with irrelevant information being discarded and the remainder further processed in the neural pipeline.

Tradeoffs

However attractive this “classic” neuromorphic technology is, downsides remain. For instance, all that power and speed doesn’t come without a penalty. Digital electronics are more power-hungry than analog precisely because devices are constantly correcting errors: forcing the signal to either one or zero. With analog circuitry, this doesn’t happen, so errors and drift caused by changes in temperature, variability in devices, and other factors don’t disappear. The result may not be exactly wrong but simply shifted or mangled.

Making matters worse is the reality that electronics manufacturing is far from perfect. Thankfully, that matters less with digital technology because circuits can be tested. If they fail, they can be discarded.

Still, a set of neural weights that process perfectly in one analog system might work very poorly on another. If you are trying to learn centrally and then clone that behavior into many different machines, there’s a price to pay. The penalty could be accepting lower reliability or, more likely, designing in more redundancy to overcome these problems.

Luckily, this is not an insurmountable problem but worth noting as we move toward emerging technologies such as memristors . These elegant devices can be used in neural networks, as they are memories that can be embedded within core neural circuitry. Memristors have the advantage of allowing analog neurons to become even more compact and lower-power.

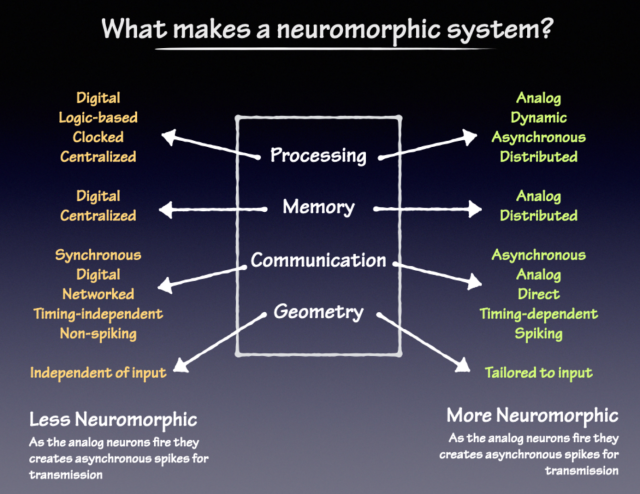

Another approach involves minimizing (or eliminating) analog circuitry while implementing architectural lessons based on nature, especially distributed, well-connected neurons — making them only as neuromorphic as is expedient (Fig. 3 ).

Fig. 3: The line between what is and isn’t neuromorphic is blurry. Where engineers make compromises depends on the application and the criteria for success. If absolute repeatability is critical and power is not, less neuromorphic solutions are preferred. Where speed, power, area, and weight are the main concerns, and fuzzier behavior is more acceptable, neuromorphic computing becomes practical.

IBM’s TrueNorth , for instance, achieves very low power despite being digital because logic-based devices can be made using nanometer manufacturing processes; analog circuitry is much more difficult to implement at these small sizes.

Intel’s Loihi chip moves a step closer to analog, as it is asynchronous: Each neuron is allowed to fire at its own rate. Synchronization happens through a set of neighbor interactions so that no process begins until others are finished for a time step, or clock-driven operation.

Virtuous circle

All of this makes it appear that analog neuromorphic systems are dead ends, but that’s not necessarily the case. Loihi and TrueNorth are both general-purpose chips, intended to be used for generic learning tasks.

But biology is not generic; it optimizes based on specific tasks. Short term — where speed, power, area, and weight really matter — engineers, too, will want to optimize. They will ultimately choose the most efficient design, even if a chip is more expensive.

If some niche applications for neuromorphic computing prove successful, such as keyword spotting or sensory processing, then that could create a virtuous circle of investment, development, innovation, and optimization. The end result could be a viable industry with its own version of Moore’s Law, one that is more tightly connected with the needs of cognitive and intelligence tasks.

Which leads to a logical conclusion: Neuromorphic processors could eventually overcome the bottlenecks currently faced in trying to build sophisticated intelligent machines. Among the goals are machines that can reason and recognize objects and situations, then adapt on the fly to different types of sensory data — akin to human brains.

That goal will require deep understanding of biological processes in the effort to make neuromorphic computing a practical reality.

Sunny Bains teaches at University College London, is author of “Explaining the Future: How to Research, Analyze, and Report on Emerging Technologies,” and writes EE Times’ column “Brains and Machines.”

Advertisement

Learn more about Electronic Products Magazine