Content provided by AspenCore and IBM

When a patient visits a doctor, the session typically begins with the doctor asking a question like, “So why are you here today?” or “How have you been feeling?” In this initial phase, the doctor tries to gather patient-specific information about recent events that led the patient to notice a medical problem. Armed with this information, the doctor can begin an examination, using his experience and training to diagnose the cause of the patient’s complaints.

The opening of this process, with a simple verbal back-and-forth between doctor and patient, is essential to the process of determining a rapid course of action. How much easier and more productive would life be if people could do this in other circumstances — in particular, interacting with machines just as easily as the doctor interacts with his patient?

In a recent conversation, Amit Fisher, who is in charge of the Cognitive IoT offerings for the Watson IoT business unit at IBM, points out how different it is today for people trying to diagnose machine problems. “Let’s assume we are talking about a maintenance worker and this maintenance worker needs to go through some procedure [to fix a system with pumps]. One option is to ask someone; another option is to open the internet; the third option is to go to the literature and look for the manuals and the procedures and all the guidelines.”

All of these options are time-consuming and ignore key information. Amit suggests that the best option is to be able to ask the system: What are the symptoms? What does the error code mean? How come I see this error code but I do not see this light turning on? Why does this red light continue to blink even after I replace the pumps? He says, “You cannot ask Google, and you cannot ask the internet. The reason you cannot is because you must have the context. … You need to understand what the current state is — what is going wrong, what was the error code, what [were] the history and the messages that the device sent over the last week or year — in order to understand and to provide intelligent answers to this interrogation.”

Adding cognition

Making the machine capable of answering these questions is the specialty of Amit and his group. The work hinges on two key concepts. The first is having machines outfitted with appropriate sensors and connected to networks, which is what the Internet of Things, or IoT, is all about. The second, and trickier, part is allowing machines and humans to interact orally and aurally; this is the realm of natural language processing and cognitive computing.

Natural language processing, or NLP, allows a person to give operating instructions to a computer not in a specialized programmable way (usually via API), but in common, everyday English … or Spanish, Chinese, or any other human language. The computer’s job is to use a knowledge base — a set of facts, assumptions, and rules in machine-readable form that relates to tasks the machine is designed to perform — to solve a problem, interpret these natural language statements, and perform the requested task or respond with appropriate answers. This process is called cognitive computing.

Carried to their ultimate degree, these disciplines can result in an artificially intelligent computer capable of understanding and reacting to anything. Making such a generic machine would require a vast knowledge base, a great amount of computing power, and a very significant programming and training investment.

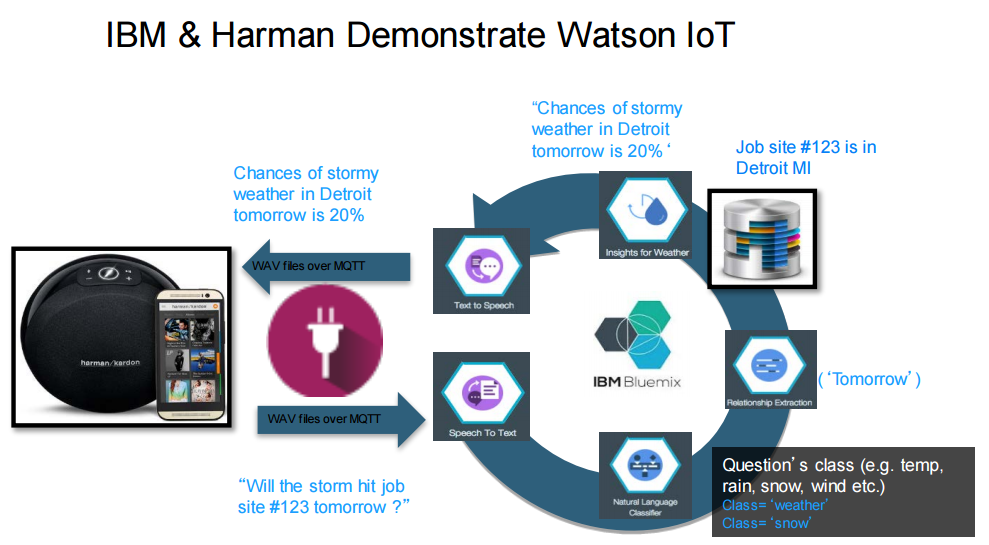

However, limiting the tasks to be performed, and hence the computing power and size of the knowledge base needed, can simplify the problem to the point at which practical systems can actually be made. Amit provides one example of such a system that was built with AV manufacturer Harman Kardon: “We basically took a regular speaker and made it a ‘smart speaker’ that you can interact with, ask questions of, and train to answer business-related questions.”

The project was not about furthering the development of NLP; it was to demonstrate how it is much easier to build such a system today. Amit notes, “While we were able to build [such a] system two, three years ago, it would probably have required five, six, seven smart PhDs for months and months.” But in actuality, the Harman Kardon project “took us literally one-and-a-half developers for two weeks to have a proper demo, which was pretty accurate.”

Available today

The success of the Harman Kardon project was not only due to the maturing of the technology, but also the fact that the API is, as Amit puts it, “ready for consumption from a programmer’s perspective. So a regular developer doesn’t have to have a PhD in text analytics and natural language processing and can still be very effective in this new domain. This is the big change that I see in the market.” IBM’s API embeds the essential elements for natural language processing, and programmers add a knowledge base for a particular task based on their understanding of the desired outcome.

The API can be used with any knowledge base, and Amit notes that “the beauty of it is that it can be trained.” Once the API is tailored to a specific domain through the knowledge base, and as it is trained to the task, its precision improves over time, Amit explains. “This is why it's called a cognitive system, because the system learns over time how to be more accurate and more specific to the domain.”

Creating a knowledge base isn’t as complex as it may sound at first. To understand the process, we first need to consider just what the cognitive process is. “What a cognitive system is trying to do,” Amit explains, “is to basically replicate what we are doing as human beings. Think about any decision that you are making, from a simple decision like whether to take the elevator or the stairs, or what is the next model of car you’re going to buy. The way we make the decision is by looking at the facts and evidence, understanding the alternatives. We are trying to interpret the implication of each decision through a set of values that we care about, a set of metrics that we care about. We have this value function, and we’re trying to evaluate the end result — how a specific decision will influence those metrics. And by maximizing or minimizing a specific value, we make a decision. That’s the natural process that we go through every day for every small decision, sometimes without even thinking about it.”

To make a computer operate in this manner, you have to depart from the typical deterministic programming model with its “decision-tree like” analysis. “With [the computer system known as] Watson, we are trying to do the same thing as in a human cognitive process. We are trying to define the field of knowledge which is the domain.”

To do this, developers must gather the corpus of knowledge about a task and tell the machine what the value resources are for the task and what the ranks of those resources are, in order of importance. Once this is done, the semantics of the task are created; the data is indexed to create the metadata needed for Watson to make sense of the data and to serve as the basis for the reasoning. Once this is done, the training phase can begin to build a reasoning model that will be used for induction and deduction. The learning process is iterative and continuous, with every outcome being used to update the reasoning model.

To simplify the programming process, IBM deconstructed the entire process into a set of small API services that can be easily used by developers. For example, when you create such a system, you need a way to interact with a microphone and a speaker as well. By implementing these services, the process of creating a cognitive system is greatly simplified. To make sure that the solution architecture can be described, tested, and eventually deployed, IBM provides several capabilities. The best known, perhaps, is Node-RED, an open-source environment that allows developers to fast prototype and simulate interactions with the system.

More to come

IBM is continuing to advance its API tools in such areas as training and quality management, making it possible to create modern, useful systems. Consider, for instance, a wind farm that’s been outfitted with cognitive capabilities, so that a maintenance technician could simply interrogate the system remotely when a problem occurred and go to the site ready to make the fix without having to spend hours high in the air at the turbine to troubleshoot the problem. The same case can be made for deploying the technology in factories and other industrial facilities.

Amit believes the future for the technology is very bright: “I think in the next two to three years, you will see a tremendous amount of innovation coming to the enterprise — automating and making an enterprise system, a cognitive system, an expert system, a reasoning system, you name it. … I think the pace and the acceleration will be like nothing we’ve seen before.”

Learn More:

- Learn more about the Watson IoT Platform

- Create your Internet of Things application with Watson IoT Platform for free

- Watson Text to Speech Recipe

Related Blogs:

Related Recipes:

Advertisement

Learn more about IBM