BY KURT SHULER

Vice President of Marketing, ArterisIP

www.arteris.com

Developing autonomous driving SoCs is equivalent to having three high-speed sports cars converging on the same intersection from different directions. Each car is on a mission-critical path, but can the drivers safely navigate the intersection without slowing down, stopping, or crashing? Now, instead of cars, imagine three conflicting design requirements converging at the same intersection on an SoC design:

1. Safety

2. Near-real-time embedded performance

3. Supercomputing complexity

Developing autonomous driving technology represents an exciting growth opportunity for the semiconductor industry, and it will attract many developers from both traditional and non-traditional SoC design teams. The stakes are high because the first-place winner will dominate the market. The second- and third-place finishers might be able to survive, but the companies that finish fourth through 20th place will not have much to celebrate.

The engineering challenge is to simultaneously integrate all three features — safety, performance, and complexity — into one device. These requirements may perplex system designers in ways that personal computer, mobile phone, and data center systems didn’t. Autonomous driving chips must be economically viable and meet the technology expectations of automotive OEMs and regulators.

Current trends and ISO 26262

The safety and supercomputing requirements in autonomous driving systems are extremely complex and pose obstacles to deliver near-real-time performance. Therefore, performance will suffer if functional safety mechanisms are added only via software.

Designers of advanced driver assistance systems (ADAS) once attempted to solve these challenges by creating more complex software. This approach was unsustainable for autonomous driving because it adversely affected system latency, processing bandwidth, and safety. Furthermore, once the software is deployed in the field, upgrading and maintaining the system creates more risk and cost.

Fortunately, the ISO 26262 specification offers a guide for software and hardware development of ADAS designs while adding a safety mechanism to the CPUs, memory controllers, and on-chip interconnects.

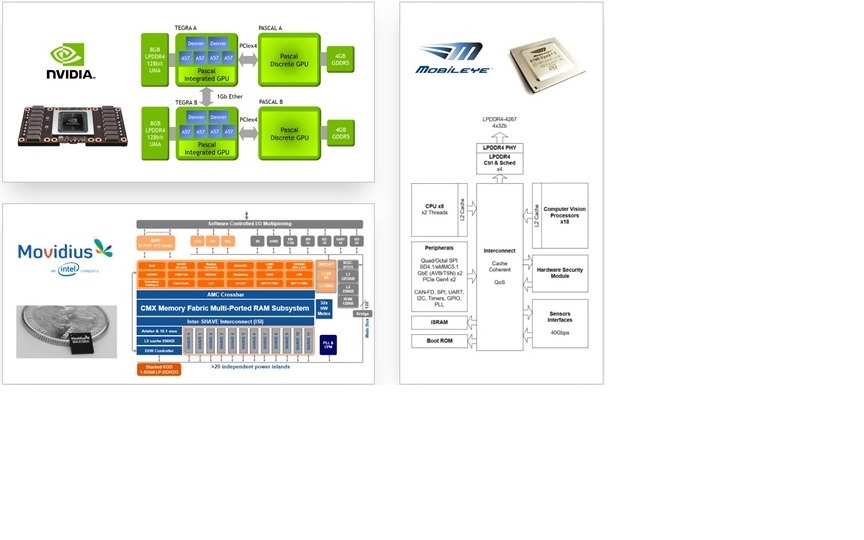

The ISO 26262 specification is enabling the development of even more complex autonomous driving SoCs. These systems implement neural networks with multiple heterogeneous hardware accelerators. And that facilitates more efficient vision processing, sensor fusion, and autonomous driving functions. Fig. 1: Current machine learning and ADAS chips split algorithmic processing over multiple hardware accelerator types to optimize processing efficiency.

Fig. 1: Current machine learning and ADAS chips split algorithmic processing over multiple hardware accelerator types to optimize processing efficiency.

Machine learning drives complexity

Here, to understand the level of complexity, you must first consider the challenges that hardware engineers face when creating functionally safe supercomputing systems. For autonomous driving SoC designs, responding to changing conditions in the physical world is an essential requirement for meeting this challenge.

The good news is that the physical world exists on a microsecond timescale, while the computing world works in a nanosecond realm. The bad news is that, unlike the mobile and PC space, autonomous driving SoCs will have to perform a type of artificial intelligence known as deep-machine learning.

To avoid reliance on a pre-programmed algorithmic approach, which could never work in the complex physical world, a hardware architecture that implements neural networks is emerging as one of the best ways to achieve deep-machine learning.

Machine learning requires multiple calculations to compute a useful “answer” to the system. Simplifying, parallelizing, and hardware-accelerating these calculations are required to get an answer within the physical-world time budget.

Hardware acceleration

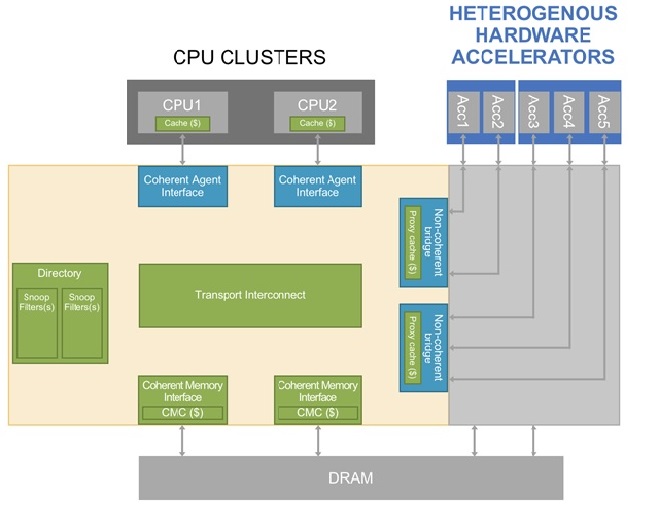

Autonomous driving hardware will drive machine learning by dividing an SoC into areas that perform specific algorithmic tasks. It’s done via optimized and connected processing nodes. These processing nodes are custom processing elements or hardware accelerators that act as neurons within the neural network. Fig. 2: In modern machine-learning systems, a larger share of algorithmic processing is being provisioned on custom hardware accelerators.

Fig. 2: In modern machine-learning systems, a larger share of algorithmic processing is being provisioned on custom hardware accelerators.

To reduce computational latency, a larger share of machine-learning processing must be implemented in the SoC architecture via algorithm-specific hardware accelerators. It helps in managing the latency, bandwidth, and quality-of-service (QoS) in communication paths between the processing nodes.

Achieving near-real-time performance for autonomous driving becomes increasingly difficult as the number of hardware accelerators increases. Therefore, the on-chip interconnect linking the accelerators becomes a critical component in achieving improved efficiency. In contrast, data center neural networks can withstand a fair amount of latency due to chip-to-chip or server-to-server communications.

Trend toward cache coherency

In addition to the low-latency requirements, the neural network and supercomputing operations require high-bandwidth communications between processing nodes to keep them efficiently fed. It allows the computation and sharing of information to be done as quickly as possible.

One way to share information is to couple memories, usually in the form of internal SRAMs, to each processing element or subsystem. That memory is then used as an output mailbox, which is fed inputs for subsequent computational steps. Managing this type of communication in software becomes difficult to maintain and increases latency as the number of processing elements escalates.

Autonomous driving SoC design teams who are on the cutting edge of innovation have been adopting heterogeneous cache coherency. It’s a more scalable approach for achieving high-bandwidth and low-latency on-chip communications as it simplifies software.

The race toward autonomous driving has officially kicked off a new era of prominence for hardware accelerators in SoC design. In the past, main CPUs or CPU clusters were the most significant hardware blocks on the chip.

Today, hardware accelerators are gaining prominence because SoC architects now innovate by dividing complex algorithms into smaller computations. And these computations can be more efficiently processed by hardware accelerators and by the architecture that best connects and feeds this processing network.

Functional safety

Functional safety adds another layer of complexity to autonomous driving SoC development. The SoC will execute all autonomous driving functions in a scheme that will detect and, in some cases, correct errors and failures. Detecting and correcting faults demands additional system logic, which can steal processing power from the supercomputing functionality, especially if it’s implemented in software.

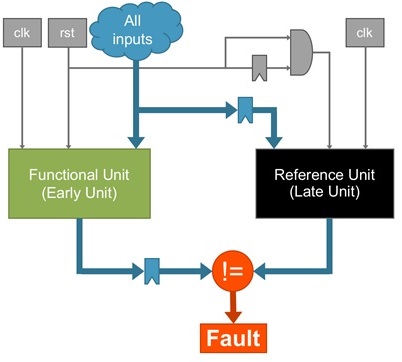

The key for design teams is to analyze their system and implement hardware-based fault detection and repair capabilities based upon the hazards and risks of various faults. Additionally, they can compensate for the complexity of software-only fault detection approach. Fig. 3: Functional safety duplicates certain logic and compares results to identify faults.

Fig. 3: Functional safety duplicates certain logic and compares results to identify faults.

Addressing faults in hardware will add additional logic to the SoC design. This will increase latency and die area, which is often the only way to meet system QoS and power consumption requirements. For example, SoC interconnect unit duplication can add a few clock cycles of latency to an operation, resulting in an increase of nanoseconds to finish the tasks.

However, implementing an equivalent functional safety mechanism in software requires not only more time in the millisecond range but also more power consumption, especially for any off-chip DRAM access.

Enable hardware development teams

To achieve these “oil-and-water” requirements simultaneously, companies will need expertise in functional safety, near-real-time embedded processing, and high-performance computing. Most companies may have knowledge in one or two areas, but not all three. Therefore, they must develop and hire in the areas where they lack experience.

Functional safety knowledge is challenging to obtain. This is because there is a shortage of engineers with functional safety knowledge. Many of the experts may not have proficiency in the types of highly complex semiconductors that the automotive industry must create.

At this point, the road to the autonomous driving SoCs passes through the executive office of the developer. There are simply not enough skills within each of these disciplines to hire away from other companies, and there are even fewer people who are capable of putting it all together.

The software will get the media “buzz” in the autonomous driving era because it has lower barriers to entry than hardware development and is easier for investors to understand. However, it’s the semiconductor developers who are truly innovating by devising custom hardware finely tuned to execute complex software.

These teams have already created ADAS systems that provide a model for successfully integrating the three layers of complexity: safety, latency, and performance. These trends provide hints of what autonomous driving SoCs will look like: a tightly coupled hardware-software design optimized for efficient code execution; a cache-coherent SoC architecture supporting deep-machine learning; and multiple heterogeneous processing elements.

And an intelligent approach to implementing functional safety without sacrificing power efficiency, performance, and area. Are you ready? Start your engines, and don’t be afraid to stop and ask for directions along the way.

Advertisement