As ystem-on-chip (SoC) designs continue to grow in complexity and integration, there is a constant push to adopt new system design methodologies in order to meet product schedules. Devices have gotten more complex, but the allotted time for their development has not increased. If anything, the schedule has compressed with the constant push to get new and better products into the marketplace as early as possible.

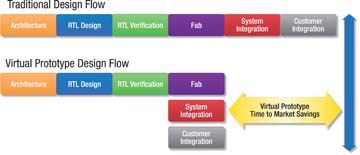

One of the most visible areas where this is happening is in mobile where ARM processors dominate. As ARM processors become more widely adopted in other markets segments such as personal computing, servers, and tablets, design approaches used successfully to roll out mobile devices quickly are being adopted in these additional product areas. One of the key technologies seeing broader adoption is virtual prototypes (see Fig. 1).

Fig 1: A key technologies seeing broader adoption is virtual prototypes.

Optimization starts at the beginning

Virtual prototypes can be used to optimize multiple portions of an SoC design, starting with the architecture of the chip itself. The performance of a design depends in large part on the performance of the data path between the processor cores and the memory subsystem. A number of highly configurable components in this path could have an impact, as could the interdependence between various parameters. Increasing the throughput on a single data path may have a negative impact on other components, or maximizing throughput for every path may result in a design that consumes too much power to be usable. Virtual prototypes play a crucial part in the optimization of this vital subsystem.

Traditionally, optimization has been done by using a cycle-accurate model of the interconnect component(s) at the heart system and traffic generators are used to represent other components in the system. The traffic generators are then replaced by actual models for the processors and memory controller blocks to get a more accurate picture of actual performance. Accuracy is of huge importance here during the making of design decisions. Numerous design projects have failed because of faulty architectural decisions made using models that were less than 100% accurate.

The latest generation of coherency extensions added to the AMBA bus by ARM has forced a change to how interconnect optimization can be accomplished. Whereas cache management used to be entirely under software control, it now can be managed at the hardware level, causing the number of read-and-write instructions to go from a handful to more than 20.

This means that maintaining a coherent system interaction is outside the reach of even the most complex traffic generators. Accordingly, virtual prototypes used to optimize the latest generation of interconnects are no longer an accurate interconnect surrounded by traffic generators. Correct optimization can now be accomplished only by replacing the traffic generators with implementation-accurate models for all of the coherent components.

Enabling early software development

At the other end of the spectrum, virtual prototypes can be used to enable early development of the software that will run on the SoC. The virtual prototype needs here are different. Whereas the system architect requires accuracy to make design decisions, the software developer requires execution speed far more than hardware accuracy. While the virtual prototype used for software development requires functional accuracy, hardware details and timing behavior can be optimized away.

Freed from the need to represent implementation accuracy, these high-speed virtual prototypes are often available well in advance of the actual implementation of the device and enable a good portion of the application software to be developed before silicon is available. Since application software represents only the top of the software stack however, there is still a need to rely upon lower levels of that stack to be coded properly before the product is to be shipped and the need for hardware accuracy arises again.

Tying together speed and accuracy

These middle levels of the system stack define the interface between hardware and software. Terms used for these layers vary from team to team, but they typically comprise OS drivers, firmware, and diagnostics. They are, in short, any software routines directly impacted by the hardware with which it communicates. This software defines how the system performs and how well it interacts with the hardware.

Virtual prototypes are often used in this critical area, but the use model varies. Software at this level needs both the speed of the higher level virtual prototypes and the accuracy of prototypes used by the architecture teams. The value extracted can be tremendous, because the design team can guarantee software working on silicon the first day back in the lab.

Getting to that endpoint can be challenging since there is a natural tradeoff between speed and accuracy. The more accurate a virtual prototype is, the slower it runs.

A variety of approaches are available to deliver a virtual prototype solution that is both fast and accurate. Hybrid solutions that combine the speed of high-level virtual prototypes with the accuracy of hardware prototypes, such as emulators or FPGA boards, are currently being pushed by numerous vendors. While these approaches can bring together hardware accuracy and virtual speed, they do so by sacrificing much of the initial value of the virtual prototype. Debug visibility is limited and tying a low-cost virtual prototype to a hardware resource drives up the price by orders of magnitude limiting deployability and further hampering debug throughput.

Hybrid virtual prototypes solve the speed versus accuracy problem by using both fast and accurate models in a single virtual prototype. Multiple approaches can be used depending on the needs of the design team and availability of models. The simplest approach is to use high-speed virtual models to represent components not requiring accuracy and accurate models for components requiring more design detail.

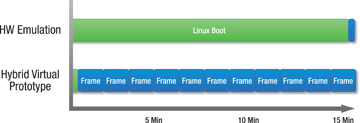

Fig 2: The hybrid virtual prototype boots in just 15 to 20 seconds, vs 15 minutes for a hardware emulator.

In Fig. 2 , the illustration shows the performance of a graphics SoC containing a Cortex-A9 processor and Mali-400 GPU. The virtual prototype for this system used ARM Fast Models for the Cortex-A9 and the memory subsystem. The Mali GPU model was compiled from register transfer level (RTL) code. The runtime speed of the virtual prototype varied depending on resources required during execution. The OS boot required little interaction between the processor and CPU, and Linux was able to boot in 15 to 20 seconds. Processing graphics frames slowed down execution as the GPU dominated performance. The graph compares this performance with the same system running on an emulator. The Linux boot process took 15-20 minutes, meaning that the virtual prototype was able to boot Linux and process 10+ frames of graphics while the OS was still booting on the emulator.

Another approach used by virtual prototypes to solve the speed/accuracy tradeoff involves using fast, functionally accurate models to run to a specific point of interest and then switching to a more accurate model to continue execution. This approach is especially useful for tasks such as system benchmarking. The entire system needs to be represented with 100% cycle accuracy for accurate results but also depends on an OS boot or other long software process that doesn't require that level of execution accuracy. Swapping the model representation enables virtual prototypes to efficiently solve complex system problems without requiring access to expensive hardware resources.

Advertisement

Learn more about Carbon Design Systems